For the academically inclined version of this piece, here's the paper. You can also listen to this interview with the author.

“Intelligent” Firewall Management: A Crisis

“So, the machine has high accuracy and explains its decisions, but we still don’t have engagement with our users?” I asked seeking clarification on a rather perplexing situation. Aware of my prior work in Explainable AI (XAI) around rationale generation, a prominent tech company had just hired me to solve a unique problem. They invested significant resources to build an AI-powered cybersecurity system that aims to help analysts manage firewall configurations, especially “bloat” that happens when people forget to close open ports. Over time, these open ports accumulate and create security vulnerability. Not only did this system have commendable accuracy, it also tried to explain its decision via technical (or algorithmic) transparency. But, there was almost zero to no traction amongst its users. The question was—why?

“Yeah, that’s the confusing part, isn’t it? I think we just need better models…we need to build better rationales [natural language explanations] … guess that’s why we brought you in!” the team’s director chuckled as we continued the meeting.

Even though I was brought in to solve the problem, there was an underlying assumption that the solution to this AI problem was to “build better AI”. This dominant techno-centric assumption stems from a mythology around Explainable AI. For our purposes, we will call this the “algorithm-centered Explainable AI” myth. It goes something like this: If you can just open the black box, everything else will be fine.

In this article, I will challenge this myth and offer an alternative version of XAI, one that is sociotechnically informed and human-centered. I will use my prior work as well as my experience with the aforementioned cybersecurity project to share the journey to the Human-Centered XAI perspective. This human-centered stance emerges from two key observations.

First, explainability is a human factor; it is a shared meaning-making process that occurs between explainer(s) and explainee(s). For our purposes, we will adopt the overarching definition that an explanation is an answer to a why-question. Implicit in Explainable AI is the question: “explainable to whom?”

Understanding the “who” the human in the loop is crucial because it governs what the explanation requirements for a given problem. It also scopes how the data is collected, what data can be collected, and the most effective way of describing the why behind an action. For instance: with self-driving cars, the engineer may have different requirements of explainability than the rider in that car.

Second, AI systems do not exist in a vacuum-- they are situated and operate in a rich tapestry of socio-organizational relationships. Given the direct impact AI systems have on human lives, before we even start deploying anything, don’t you think we need to know more about our stakeholders whose lives will be impacted by our systems?

The cost of failure is high—if we fail to understand who the humans are, it’s inconceivable how we will effectively design meaningful systems.

In fact, as we move from an algorithm-centered perspective to a human-centered one, as we move from AI to XAI and re-center our focus on the human—through Human-centered XAI (HCXAI)—the need to refine our understanding of the “who” increases.

Considering the socially-situated nature of AI systems, our current AI problems are no longer purely technical ones; they are inherently sociotechnical. How can we expect sociotechnical problems to be solved by purely technical means? An algorithm-centered approach can only get us a part of the way. The human-centered approach needs to be sociotechnically informed to address the current challenges. But how might we do that?

My own journey towards a sociotechnically-informed Human-centered XAI has been anything but self-evident. It has been years of work, each “turn” building on the previous one through self-reflection, critique, and refinement. Like Thoreau, I believe the journey is, at times, more than the destination. In that spirit, I would like to give you a sense of that trajectory, which can give you a feel for the evolution of the insights. My hope is that, by vicariously living through my journey, you might be able to develop some intuitions and apply them to your own journey. I will break things down by “turns”—the turn to the machine, turn to the human, and turn to the sociotechnical. I will situate the first two turns using our prior work and return to the cybersecurity story when discussing the turn to the sociotechnical.

Turn to the machine:

In the first phase, we wanted to tackle a technical problem—how can we make an AI agent think out loud in plain English? The goal was to make rationales that anyone, regardless of their AI knowledge, could understand.

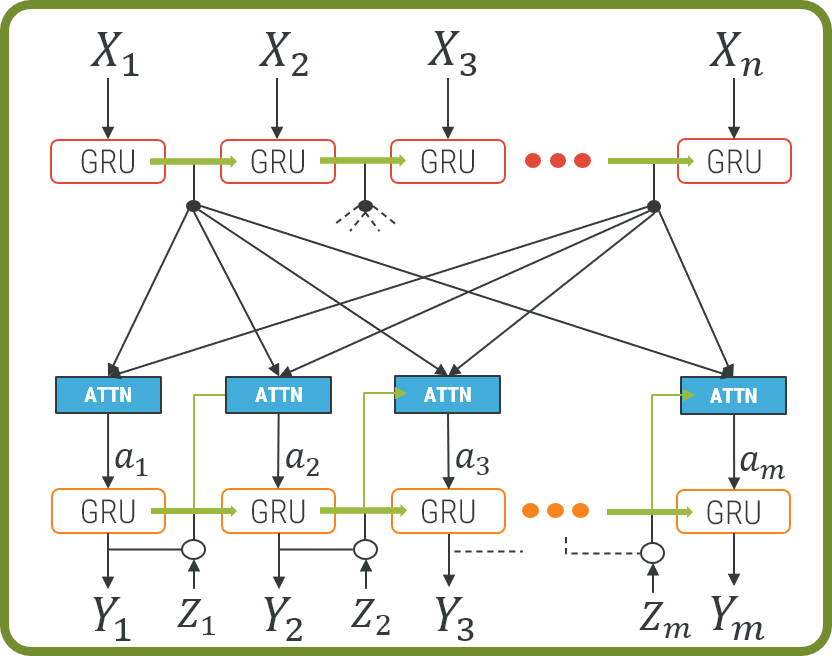

To achieve our goal, we pioneered the notion of Rationale Generation is a process of producing a natural language explanation for agent behavior as if a human had performed the behavior. Conceived as a form of post-hoc interpretability, the technical contribution comes from looking at explanation generation in natural language as a translation task using neural machine translation (NMT). We used a deep neural network trained on human explanations to generate rationales to justify the decisions of an AI agent. Below is the neural architecture as a teaser (Fig 2, adapted from [2] ).

Curious to know more? Check out the paper "Rationalization: A Neural Machine Translation Approach to Generating Natural Language Explanations".

We can think of this phase as an existence proof—can we do this? Short answer – yes. How do we know? We mainly used procedural evaluation (BLUE score) to establish the accuracy of the generated rationales. Connecting to our cybersecurity example, the company hired me when it was on this stage-- it had an existence proof of their explainability technique using a similar approach.

Turn to the human:

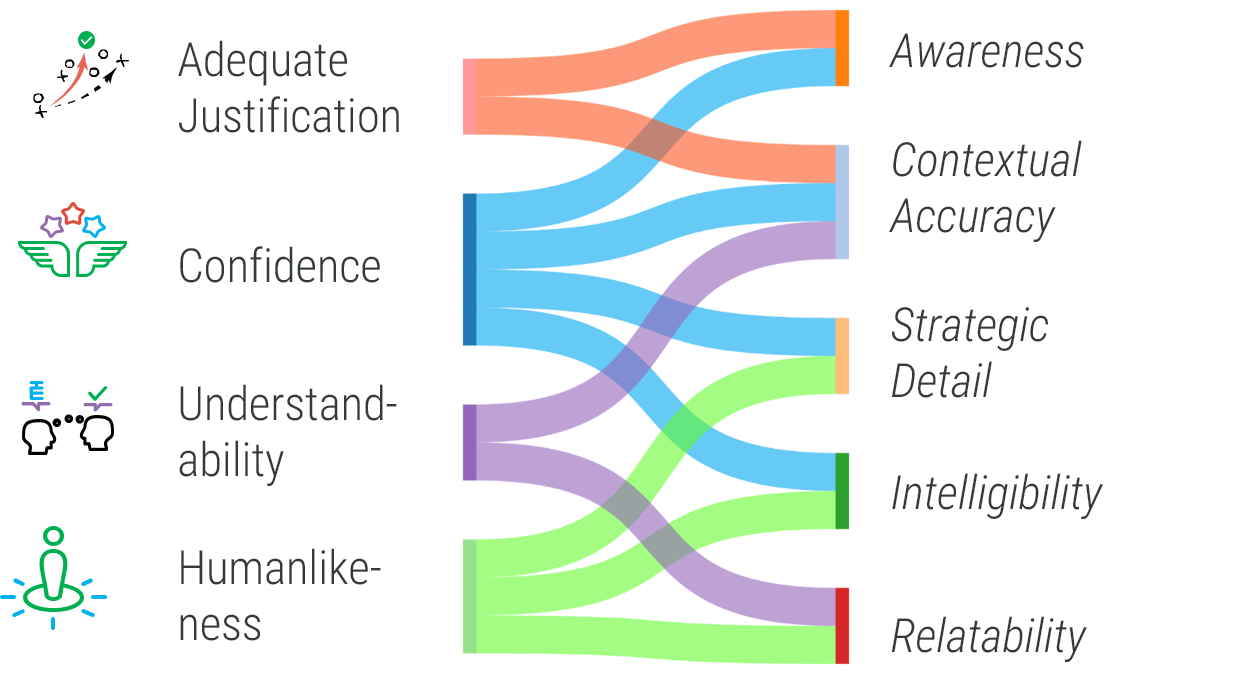

In the second phase, the training wheels come off, advancing the XAI discourse towards a human-centered one. Now that we knew we could generate accurate rationales, the question is: do humans find them plausible? How do we evaluate for human-centered plausibility? To address these questions, we conducted two mixed-methods user studies to evaluate the viability of the rationales. We were also able to tweak the neural architecture to generate two styles of rationales with different characteristics. Both studies showed that the generated rationales were viable, but more importantly, it allowed us to disentangle and understand the relationships between the human factors governing user perceptions. Here is a picture (Fig 3, taken from Ehsan & Riedl's 2019 paper [3]) of the relationships between the dimensions and components—I don’t expect you to fully unpack this from the image, but you can appreciate the complexity of the relationship.

In this phase, we learned something crucial for Human-centered XAI— how the two processes (technological development in XAI and understanding of human-factors) co-evolve. As our technological perspectives improved, so did our understanding of “who” the human is.

Moreover, as the metaphorical picture of the user became clearer, other people and objects in the background have also come into perspective. The newfound perspective demands the ability to incorporate all parties into the picture. It also informs the technological development needs of the next generation of refinement in our understanding of the “who”. This work serves as the bridge to the turn for the sociotechnical.

Curious to know more? Check out the paper: Automated Rationale Generation: A Technique for Explainable AI and its Effects on Human Perceptions.

Bridge: Before we turn to the sociotechnical, some important reflections. Both phases taught us a lot; most importantly, they highlighted epistemic blind spots that would not have been obvious had we not gone through them (hence, the importance of sharing the journey). For instance, our current paradigm of investigation primarily accommodates a 1-to-1 Human-AI interaction, which is inadequate to address the multi-stakeholder real-world problems such as in the cybersecurity example where there are developers, solution architects, product managers, etc. Given AI systems are socially situated, how might we incorporate different types of --“who’s” – stakeholders? The “turn to the human” revealed that a 1-1 Human-AI paradigm was not enough to address the complex sociotechnical challenges of XAI. We needed a conceptual expansion.

Turn to the sociotechnical: Reflective Human-centered XAI

To address this, we need to build and expand on our current conception of XAI—here, I propose Reflective Human-centered XAI (HCXAI), a sociotechnically informed mindset that is grounded in critical AI studies and HCI. It begins with two key properties: (1) a domain that is critically reflective of (implicit) assumptions and practices of the field, and (2) one that is value-sensitive to both users and designers. Here, critical reflection [4] can highlight our intellectual blind spots, which can expand our design space. Value-sensitivity enables human-centered approaches to address our blind spots.

By bringing the unconscious aspects of experience to our conscious awareness, reflection makes them actionable. To help with critical reflection, we can situate XAI as a Critical Technical Practice (CTP). AI pioneer Phil Agre coined the term to CTP to describe a design philosophy where we critically reflect on (challenge) the status-quo (e.g., established practices) to highlight “marginal” insights. These marginal insights can potentially help overcome recurrent impasses and generate alternative technology. Moreover, the very design of new technology can be used as a method to critically understand technology’s relationship to society. As a result, Reflective HCXAI encourages us to question the status-quo--- for instance, by perpetuating the dominant algorithm-centered paradigm in XAI, what perspectives are we missing? How might we incorporate the marginalized perspectives to embody alternative technology? In this case, a dominant XAI approach can be thought of as “algorithm-centered,” in a way that privileges technical transparency. Such a viewpoint can limit the conceptual landscape (the epistemic canvas) of Explainable AI by equating explainability to just model transparency. A predominantly algorithm-centered approach to XAI could, in theory, be effective if explanations and AI systems existed in a vacuum, devoid of situated context. However, it is not the case that explanations and AI systems are devoid of situated context.

Curious to know more about CTP and Reflective HCXAI? Check out this paper: "Human-centered Explainable AI: Towards a Reflective Sociotechnical Approach

Grounding Reflective HCXAI:

But how might we operationalize this perspective? Here, we can utilize rich strategies from HCI like participatory design and value-sensitive design (the full paper has more details with examples). By helping us act on our reflections, these strategies can break new grounds on the expanded design space that emerges through critical reflection. If this sounds too abstract, don’t worry. I will now return to the cybersecurity story and ground this perspective with a real-world example.

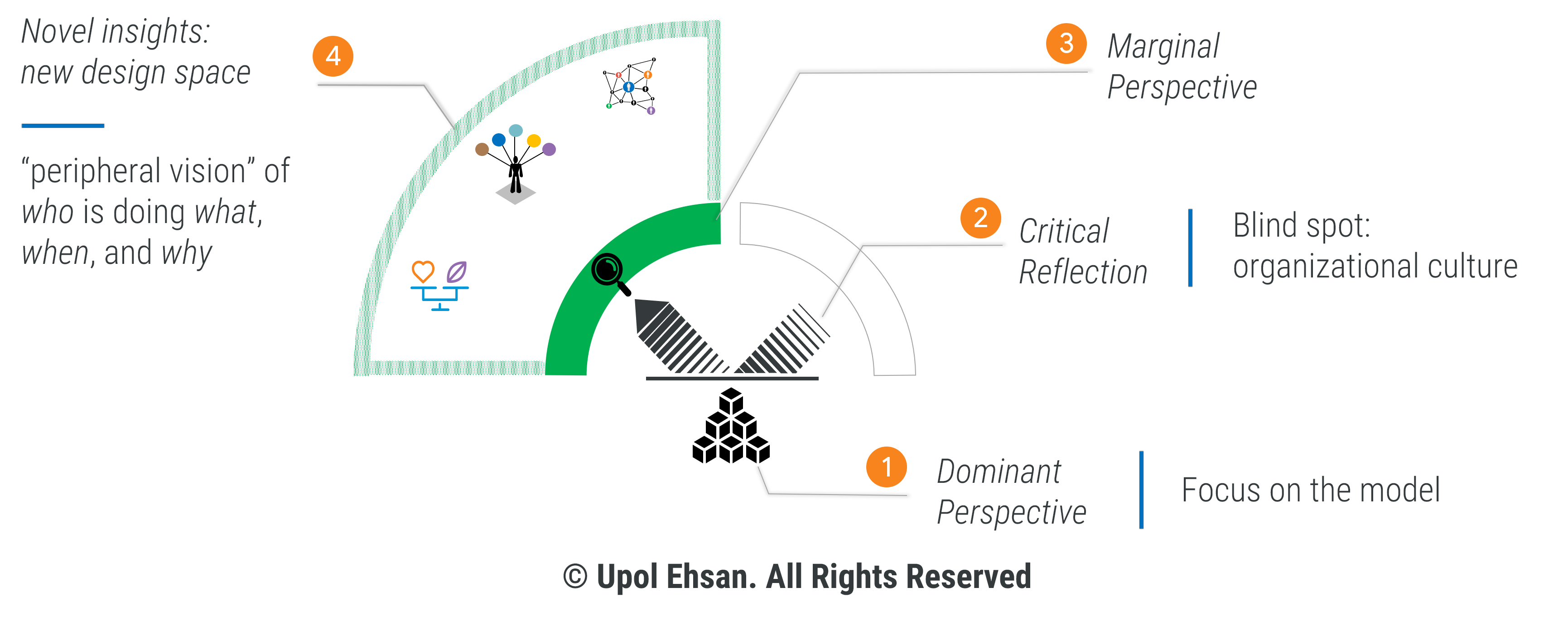

All of this might seem a bit abstract, so let’s ground things by returning to the cybersecurity story. With the help of the diagram in Figure 4, I will share how I used a Reflective HCXAI perspective to troubleshoot the issue and resolve it.

First, we start with the dominant paradigm or status quo—what’s the dominant way of doing things? [(1) in the picture] In this case, all our attention was on the model or algorithm. It was as though if we could just open the opaque box “better” all our problems will be solved. However, remember that there was little to no engagement from the users. Now that we have identified the dominant perspective, what’s the first thing the framework asks us to do? Critically question the status quo and reflect on it—that is, by continuing to do things the way things are currently done, what perspectives are we missing?

How did I do this critical reflection? [(2) in the diagram] I interviewed, surveyed, did ethnography with stakeholders. This process of critical reflection brought key missing insights (intellectual blind spots) from the unconscious to the conscious awareness, something I call “marginal perspective” [(3) in the diagram]. The blind spot, in this case, contained facets of organizational culture that was (a) ignored in the design of the system and, more importantly, (b) dictating user behavior (lack of engagement). By questioning the status quo, I identified the marginal insights or the blind spots.

What does HCXAI ask us to do next? Address these blind spots in a way that is value sensitive to both end users and designers (in this case, my research team). How did I do that? [(4) in the diagram] In this case, I utilized the strategies outlined in the paper—participatory design sessions and value elicitation exercises with my stakeholders (these strategies are contextually situated and the researcher should adapt according to the domain; so it’s not a one-size-fits-all prescriptive approach). This process of exploring the blind spot gave me a new design space to work on, one that went beyond the algorithmic bounds of the previous paradigm.

Exploring this expanded design space, what I found was rather surprising – it turned out the problem was not with the algorithm’s “performance” per se, but how the accountability was distributed in this Human-AI collaboration. To the analysts, the machine had no skin in the game. The AI would not get fired if it was wrong; instead, humans would suffer the consequences-- in other words, they felt that the accountability was displaced. The cost of failure was entirely on the humans. Why trust something that has immunity and nothing to lose? To my stakeholders, the situation was unfair. Given there was no legitimate way to contest the machine, it was best to ignore (or not engage with) it altogether; hence, no actions or engagement. It was clear that algorithmic or technical transparency alone was not enough to drive informed actionability and engagement.

My stakeholders wanted something more than technical transparency. They wanted “peripheral vision” into what else was going on — a digital trajectory of past interactions of peers (who had skin in the game) with similar AI recommendations. They wanted to know who did what, when, and why, something I dub Social Transparency (ST). Long story short, once we implemented a version of ST, engagement shot through the roof. Previously, without ST, there was individual vulnerability; now, with ST, they had distributed accountability. This is something the algorithm-centered XAI view could not accommodate.

Explainability is not just technical transparency:

The applied Reflective HCXAI perspective taught me an important lesson around the notion of explainability. it was clear that regardless of how good the model is, technical transparency alone might not be enough to empower users to take informed actions (actionability is one of the core goals of explainability). The reflective perspective transformed my very understanding of explainability such that it was no longer opaque-box bound, algorithm-centered, and limited to technical transparency. It showed that things that ultimately matter might actually be outside the opaque box. In this case, expanding explainability by including Social Transparency was the key to the puzzle. Cybersecurity isn’t the only domain the Reflective HCXAI perspective has helped me. It has already paid dividends in on-going projects in healthcare (radiology) and commerce (sales).

Curious to know how we can conceptualize explainability beyond technical transparency? Here is a recent paper introducing the notion of Social Transparency in AI systems: "Expanding Explainability: Towards Social Transparency in AI systems"

Moving ahead with Reflective HCXAI:

With the basics of Reflective HCXAI in mind, I will briefly touch on the future and what we can do to foster a robust era of Human-centered XAI. I will start with one of my favorite quotes from Phil Agre, commenting on how a Critical Technical Practice (CTP) lens demands a dual identity:

“At least for the foreseeable future, [we will] require a split identity – one foot planted in the craft work of design and the other foot planted in the reflexive work of critique”

Given Reflective HCXAI is mediated by a CTP-lens, by construction, it is a work-in-progress (at least for the foreseeable future). We will always hone the design of XAI systems while at the same time be reflexively engage in its critique. I want to take a moment to highlight that Agre is asking us to engage in critique, not criticism. The process is not meant to break down or criticize efforts; rather, it is an iterative one where there is an interplay between highlighting our intellectual blind spots and addressing them. Also, by design, Reflective HCXAI does not a normative stance or espouse a singular path to the sociotechnical perspective. It is not a prescriptive framework; rather, it is best conceived as an ethos of design. Considering the proliferation of work in XAI, my proposal of Reflective HCXAI should not be interpreted as a treatise or an end of discussion; rather, in spirit of Agre’s split identity of design and critique, this is just the beginning. In fact, there is likely blind spots I am missing! As a result, I invite the community to join us to extend and refine the framework. Together, we can build a future of XAI whose epistemic canvas is not bound by the limits of the algorithms, but one that treats explainability for what it is—a human factor.

So far, I have been invited to speak about Reflective HCXAI in multiple venues, from Fortune 100 companies to universities to startup meetups. In non-academic settings, one of the most common and important questions I get is: how can we empower creators and designers of XAI systems to adopt this Reflective HCXAI mindset, especially considering the organizational forces at play?

Before I address how we can empower creators of AI systems part, the “organizational forces” portion needs attention. Implicit in that portion is the reality of market forces – for better or worse, there is a dominant impulse for what I call a “fast and furious” development mindset when it comes to AI systems. Speed and scale are somehow considered to be unquestionable virtues in computing. Unfortunately, fast and furious processes can also lead to reckless decisions and harmful outcomes (e.g., minorities discriminated by algorithmic decisions). A Reflective HCXAI perspective, by encouraging critical reflection, advocates for a more thoughtful and thorough approach to design, one that does not have the speed of the “fast and furious” AI design. As a result, in organizational settings, there is understandable pressure to move fast—sadly, the “move fast and break things” mentality has gotten us to our current situation around algorithmic injustice. If we adopt a similar mindset for explainability, the entity that is supposed to help us fight injustice and empower users, we risk exacerbating the issues. Therefore, it is important to pause and reflect on our response to market forces – just because we can do something does not entail we should especially considering the disproportionate harm AI systems can do. Here, organizational culture and leadership matters – no matter how well intentioned the creators are, if their incentives are misaligned, no amount of Reflective HCXAI can help.

Now, coming back to the operational (and somewhat less challenging) part of how we might empower AI creators—it is important we cultivate the dual identity mindset that Agre mentioned. Having design cultures that have “one foot” planted in the development of HCXAI systems while the “other foot” in the constructive critique of one’s own methods is crucial. We cannot empower creators if we do not allow them the permission to have this “work-in-progress” mindset. Any notion of a “finished” product will impede critical reflections. I have already witnessed a few top AI teams adopt this Reflective HCXAI mindset where they have allowed themselves to follow up every release of a product with an explicit critique of the product. This has not only improved the quality of the XAI product but also allowed them to take a slow and steady approach to XAI development than a potentially harmful fast and furious one. Interestingly, the slow and steady approach has paid more dividends in terms of mitigating harms; therefore, there is also a business case to be made for the Reflective HCXAI perspective.

Another important question I get is: from a skills perspective, how should I set up my team so that we can build better human-centered XAI products or systems? There are two key components to the answer. First, human-centered work requires active translational work from a diverse set of practitioners and researchers. This entails that, compared to T-shaped researchers and practitioners (who have intellectual depth in one area), we need more Π-shaped ones who have depth in two (or more) areas and thus the ability to bridge the domains. Second, and more importantly, we cannot do meaningful HCXAI work if our teams are monolithic and homogenous in their set up. In setting up diverse teams, the most important part is to ensure that non-CS or AI members—psychologists, anthropologists, policy experts, etc.— are not treated as second-class citizens of the team. There needs to be humility from computer scientists (like myself) that code, alone, cannot solve everything. Therefore, we need to learn how to work with domain experts, not just expecting them to learn how to code and speak “our language” but also meet them halfway by investing time to critically read their bodies of work. Diversity does not only mean intellectual diversity; there has to be concerted and intentional effort in setting up teams that have demographic diversity, ensuring under-represented voices have a space to be heard. This is not just the right thing to do but also an efficient way of creating meaningful products.

Parting thoughts:

Wrapping things up: Explainability is an inherently human-centered problem where we cannot afford a technocentric view. Incorporating sociotechnical elements requires conceptual reframing. Here, Reflective HCXAI – a sociotechnically informed perspective—can help by disentangling the intricate challenges around AI systems. By situating XAI as a Critical Technical Practice, critical reflection can highlight our intellectual blind spots, which can expand our design space [23]. Addressing the blind spots in a value-sensitive manner fosters human-centered design of XAI systems. The reflexive mindset can set up an iterative cycle where we carefully study how the human factors interplay with the technical components, creating an XAI that is not just bound by and centered around the algorithm, but one that fully celebrates and can incorporate the “messy” contexts in which real AI systems live.

We started with the Algorithm-Centered myth: If you can just open the black box, everything will be fine.

Here is my response: Not everything that matters lies inside the black-box of AI

Critical answers can lie outside it. Because that’s where the humans are.

Author Bio:

Upol Ehsan is a philosopher and computer scientist by training and a technologist by trade. His current work focuses on the explainability of AI systems from a human-centered perspective. Bridging his background in philosophy and engineering, his work resides at the intersection of AI and HCI with a focus on designing explainable, encultured, and ethical technology. He actively publishes in peer-reviewed venues like CHI, IUI, and AIES, where his work has won multiple awards. He organizes events that connect the HCI and AI communities, including leading the organization of the first workshop on Human-centered XAI at CHI 2021. He is currently a Doctoral Candidate in Computer Science at the School of Interactive Computing at Georgia Tech. He graduated summa cum laude, Phi Beta Kappa from Washington & Lee University with dual-degrees in Philosophy (B.A) and Engineering (B.S).

Outside academia, he is a social entrepreneur and has co-founded DeshLabs, a social innovation lab focused on fostering grassroots innovations in emerging markets like Bangladesh.

Twitter: @upolehsan

References

[1] Ehsan, U., Harrison, B., Chan, L., & Riedl, M. O. (2018, December). Rationalization: A neural machine translation approach to generating natural language explanations. In Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society (pp. 81-87).

[2] Ehsan, U., Tambwekar, P., Chan, L., Harrison, B., & Riedl, M. O. (2019, March). Automated rationale generation: a technique for explainable AI and its effects on human perceptions. In Proceedings of the 24th International Conference on Intelligent User Interfaces (pp. 263-274).

[3] Ehsan, U., & Riedl, M. O. (2020, July). Human-centered Explainable AI: Towards a Reflective Sociotechnical Approach. In International Conference on Human-Computer Interaction (pp. 449-466). Springer, Cham.

[4] Sengers, P., Boehner, K., David, S., & Kaye, J. J. (2005, August). Reflective design. In Proceedings of the 4th decennial conference on Critical computing: between sense and sensibility (pp. 49-58).

Citation

For attribution in academic contexts or books, please cite this work as

Ehsan Upol, "Towards Human-Centered Explainable AI: the journey so far", The Gradient, 2021.

BibTeX citation:

@article{xaijourney,

author = {Upol, Ehsan},

title = {Towards Human-Centered Explainable AI: the journey so far},

journal = {The Gradient},

year = {2021},

howpublished = {https://thegradient.pub/human-centered-explainable-ai/},

}