Researchers get credit for writing papers. If you’re a professor, the number of accepted papers determines whether you’ll get tenure. If you’re a student, it determines if and when you can graduate, as well as your future industry or academic job prospects.

A paper is meant to be a detailed manuscript, a how-to guide, for understanding and replicating a research idea. But papers don’t always tell the full story: researchers often leave out some details or obfuscate their method, driven by the desire to please their future reviewers. A researcher’s understanding of their paper also evolves over time, as they run further experiments and talk with other researchers, and this evolution is rarely documented publicly (unless it provides enough material to write another paper). This isn’t because researchers are bad people; the incentives just aren’t there to spend valuable time writing about these new developments.

Machine learning is a field where what is considered ‘publishable’ is beginning to change. ReScience publishes replications of previous papers, and Distill exclusively publishes excellent expositions of interesting ideas. Researchers are also starting to be recognized for producing high-quality blog posts, and writing readable code that easily reproduces their results.

What the community still lacks, though, are incentives for publicly documenting our real thoughts and feelings about our past papers. I often talk about my past work more candidly with my friends and colleagues than I do in my published work, and I’m more willing to own up to its flaws and limitations. But many aspiring researchers don’t have the means to attend conferences to have such honest conversations with the people whose work they’re building on.

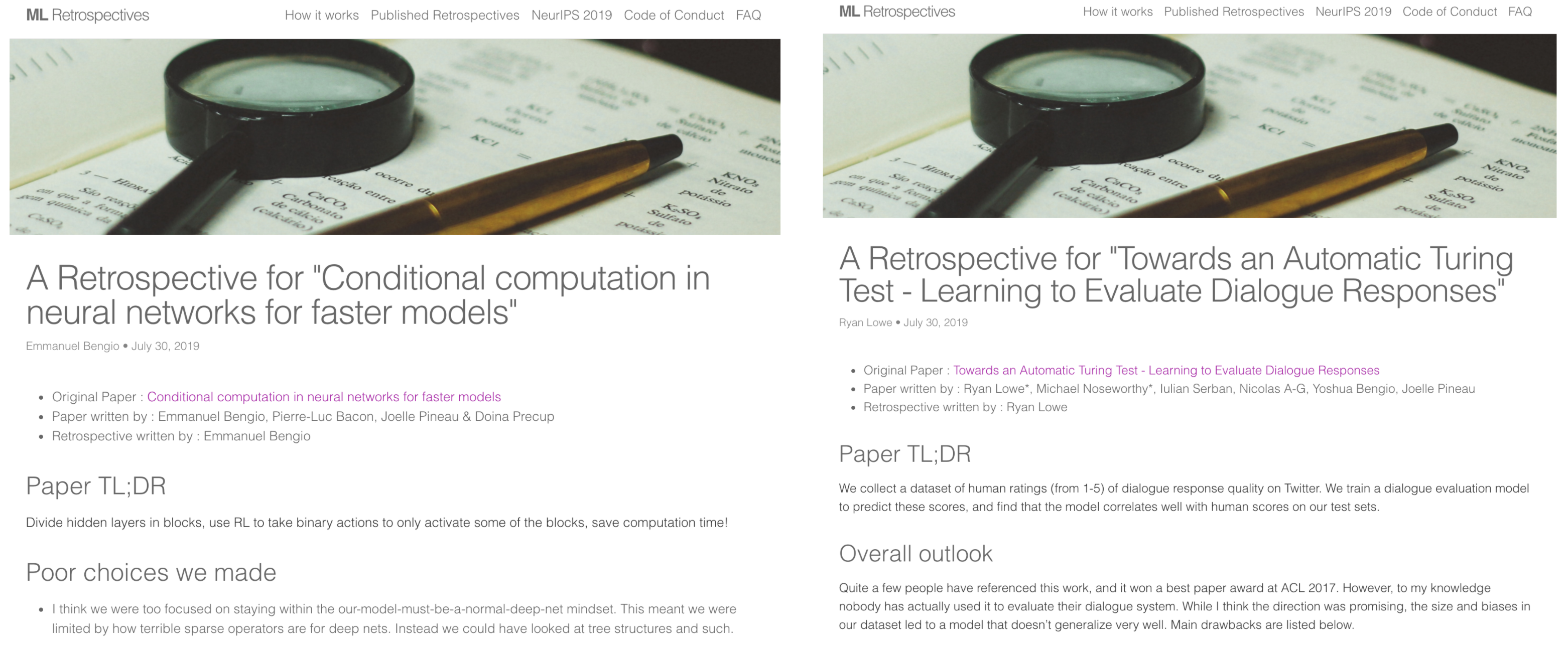

Today, we’re launching ML Retrospectives, a website for hosting reflections and critiques of researchers’ own past papers that we’re calling retrospectives. We’re also announcing the NeurIPS 2019 Retrospectives workshop, where high-quality retrospectives can be published.

ML Retrospectives is an experiment: we’re not sure what the right incentives are to get researchers to write retrospectives, and how we can make them as useful as possible to the ML community. Working together to figure this out is one of the goals of our NeurIPS workshop. We’re hoping that having a platform for retrospectives will encourage researchers to start talking about their past work more openly and honestly, and share new insights about their papers in real time.

We think ML Retrospectives has the potential to shift publishing norms in the machine learning community. We’re excited to see what you do with it.

Paper debt

ML Retrospectives is built on an idea I’ll call ‘paper debt’. Paper debt is the difference in available knowledge between a paper’s author and a paper’s reader; it’s all of the experiments, intuition, and limitations that the author could have written in their paper, but didn’t. Paper debt is a specific form of ‘research debt’ --- the difference in knowledge between a layperson and an expert in a field --- first described by Chris Olah & Shan Carter upon releasing the Distill journal.

Paper debt can accumulate in various ways. Sometimes, we researchers are forced to leave out some intuition or experiments for brevity. Other times, we make subtle obfuscations or misdirections. Lipton & Steinhardt describe some of these in “Troubling Trends in Machine Learning Scholarship”: papers often fail to differentiate between speculation and explanation, hide the source of empirical gains, and add unnecessary equations to make the methods look more complex.

More blatant omissions are also common in paper-writing. For example, if the authors performed additional experiments on other datasets that didn’t pan out, this is usually omitted from the paper even though it would be extremely useful for other researchers to know. Similarly, authors may choose inferior hyperparameters for their baseline models, which is often not apparent unless they fully describe their hyperparameter selection process.

Incentives encouraging paper debt exist because researchers write papers partly to please their future reviewers. This makes sense: researchers are evaluated by the number of papers they publish at top-tier conferences and journals. Obfuscating weaknesses and omitting negative results improves the paper in the eyes of the reviewers, thus making it more likely to pass peer review.

A final force that’s vital in driving the increase in paper debt is time. As paper authors perform additional experiments and have conversations with other researchers in the field, they develop a better understanding of their work. Sometimes, authors will update their paper on arXiv if the changes are modest enough, but more often these findings simply remain in the minds of the authors. After all, it takes effort to incorporate these findings neatly into the narrative arc of your paper, and it’s unclear how many people will notice what you’ve added unless they’re reading the paper for the first time.

Paper debt is a supreme waster of researchers’ labour. Nowadays, part of reading papers is trying to decipher which claims are technically sound. It’s not uncommon to read a paper in machine learning and think: “Okay, what are they trying to hide? What secret trick do you need to actually get this to work?” For many researchers, this skepticism has been hard-earned trying to build on top of cool ideas that simply didn’t work as advertised. What’s amazing is how often this happens without us thinking twice about it. We’ve grown accustomed to such a large amount of paper debt that all of this second guessing seems normal.

Imagine if, for every published paper, the authors described in as full and honest detail as possible all of the things that worked and didn’t work. How incredible would that be? That’s the future we’re aiming towards, and we’re hoping that ML Retrospectives is a concrete step in this direction.

How it started

ML Retrospectives started with the realization that some of my own previous papers had accumulated paper debt.

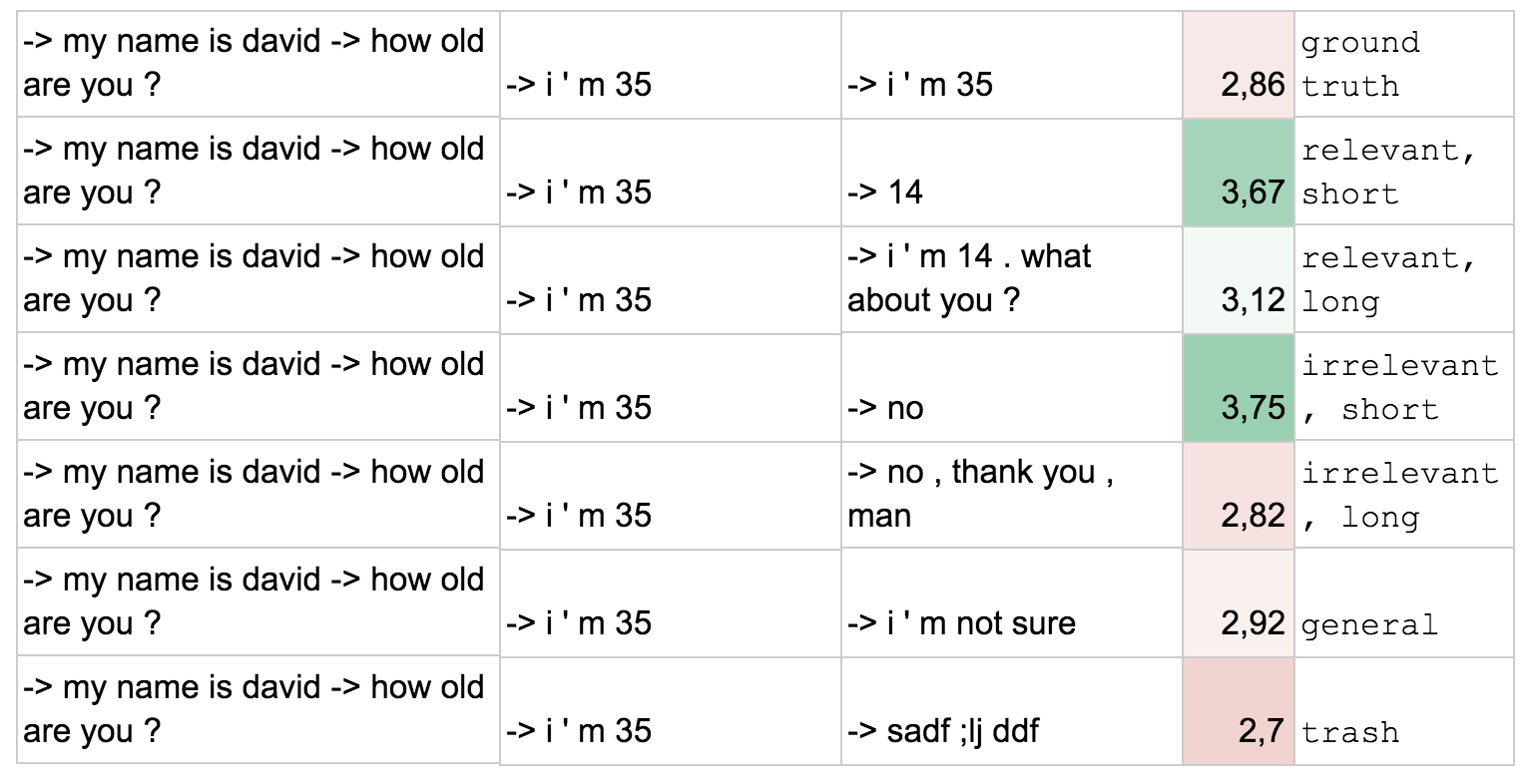

In 2017, I co-authored a paper about training a neural network (which we called ‘ADEM’) to automatically evaluate the quality of dialogue responses. The paper was accepted to ACL, a top-tier NLP conference, and won an ‘Outstanding Paper’ award. In many ways I was proud of that paper --- I felt (and still feel) that dialogue evaluation is an under-studied problem, and this paper was an interesting step towards a solution.

But I also had some shreds of guilt. In the year after the paper was released, I had several discussions with other researchers who were trying ADEM for dialogue evaluation, and found that it didn’t work very well for their datasets. I then received an email from a student who had run some sanity checks on data they created by hand. The student was concerned since ADEM seemed to fail some of the sanity checks; it consistently rated shorter responses better than longer responses, even if the shorter responses didn’t make sense.

While these results were new to me, I already had a sneaking suspicion that ADEM might not generalize well to other datasets. After first training ADEM, I noticed that it consistently rated shorter responses higher. We found this was due to a bias in our MTurk-collected dataset, and tried to remedy it by over / under-sampling. We talk about this bias, and our attempted solution, in the paper. What I didn’t mention in the paper was that I had also tried ADEM on a dataset collected for a different project, and found that it didn’t perform very well. I take full responsibility for this lack of disclosure in the paper; at the time I figured that it was due to a difference in the data collection procedure, and dismissed it. After all, what really mattered was that ADEM generalized on the test set, right?

As a result of these conversations, I found myself describing my research in a very different way than I had written about it in the paper: “Oh yeah, it’s a cool idea, but I wouldn’t actually use it to evaluate dialogue systems yet.” I was happy to discuss the limitations of ADEM in personal conversations. But I was hesitant to update the paper on arXiv; I didn’t want to take the time to run rigorous additional testing, and including informal conversations or ad-hoc results in a Google Sheet sent by a student I didn’t know seemed unreasonable. So I waited, trying to hold the creeping tendrils of guilt and self-judgement at bay, until something could come along to change my mind.

What eventually shifted my perspective was a realization: I didn’t have to feel shame for these inconsistencies in my past papers. When I wrote those papers, I simply had a different level of diligence than I do now. That doesn’t make me a bad person, it just means that I’m improving as a scientist over time. Just like everyone else.

With the clearing of this emotional weight it became easier to look at my past papers. What did I want to do about the accumulation of paper debt? I thought about writing a personal blog post about it, but after discussions with my supervisor Joelle and others at Mila and Facebook AI Montreal, I was convinced to try and make it bigger. That is now a reality, thanks to a fantastic team across a range of research institutions.

ML Retrospectives

What we came up with was ML Retrospectives.

ML Retrospectives is a platform for hosting retrospectives: documents where researchers write honestly about their past papers. Writing a retrospective is a simple way to help reduce paper debt, since you get to describe what you really think about your paper. We wanted retrospectives to be less formal than a typical paper, so we made them more like blog posts, and put the whole website on Github. To submit a retrospective, you simply make a pull request to our repository.

Why write a retrospective? In the same way that publishing code shows that you care about reproducibility, writing a retrospective shows that you care about honestly presenting your work. Retrospectives can be short --- taking 30 minutes to write down your thoughts about your paper can have a large impact for those who read it. They also don’t have to be negative; you can write about new perspectives that you’ve developed on your work since publication, which can breathe new life into your paper in light of more recent developments in the field. Retrospectives submitted before September 15 can also be considered for publication in the NeurIPS 2019 Retrospectives workshop.

We decided to open up retrospectives only to authors of the original paper. While a venue for critiquing other people’s papers might also be valuable, we wanted to focus on normalizing sharing drawbacks of your own past papers. For this first iteration, submissions to ML Retrospectives will not be officially peer reviewed, but we’re considering starting a journal for high-quality retrospectives if we get enough interest.

This ML Retrospectives experiment is asking a research question: what happens when we create a platform that encourages researchers to be open and vulnerable about their past work? While we’re excited about making self-reflection (an important form of scholarship) publishable through our NeurIPS workshop, in the longer term we want to build cultural norms around sharing research honestly. That doesn’t mean shaming those who have made errors when writing papers in the past, but rather celebrating those who have the courage to speak up and share openly.

Looking forward

Doing science is important. By figuring out how the world works, we can build things that make our lives better. But it’s also important to look at the scientific process itself; if the incentives of individual researchers aren’t aligned with improving the scientific understanding of the field, we get situations where the majority of papers in an entire field fail to replicate. ML Retrospectives is one small iteration on the scientific process, and we hope to see other researchers try things that they think will be beneficial for the community.

The ultimate goal of retrospectives is to make research more human. That means getting researchers to write papers like how they’d talk to a friend. It means making research transparent and accessible for everyone. And it means creating an environment in the machine learning community where it’s okay to make mistakes.

After all, in this scientific endeavor, we’re all on the same team.

Ryan Lowe is a PhD student at McGill University & Mila, supervised by Joelle Pineau. His research covers topics in dialogue systems, multi-agent reinforcement learning, and emergent communication. He’ll hopefully be graduating soon.

Special thanks to the Retrospectives team: Koustuv Sinha, Abhishek Gupta, Jessica Forde, Xavier Bouthillier, Peter Henderson, Michela Paganini, Shaghun Sodhani, Kanika Madan, Joel Lehman, Joelle Pineau, and Yoshua Bengio, for help making ML Retrospectives a reality. Thanks also to Natasha Jensen for the slogan idea and website design feedback, as well as Emmanuel Bengio, Michael Noukhovitch, Jonathan Binas, and others for earlier conversations about retrospectives. Finally, thanks to Joel Lehman, Evgeny Naumov, Hugh Zhang, and Kanika Madan for comments that helped shape this article.

If you enjoyed this piece and want more, subscribe to the Gradient and follow us on Twitter!