This past Valentine's day, OpenAI dropped two bombshells: a new, state-of-the-art language model and the end of its love affair with open source.

Some context: in what has been dubbed the "Imagenet moment for Natural Language Processing", researchers have been training increasingly large language models and using them to "transfer learn" other tasks such as question answering and sentiment analysis. Examples include fast.ai's ULMFit [1], OpenAI's GPT [2], AI2's ELMO [3], Google's BERT [4], and now the GPT-2, a scaled up version of the original Generative Pre-Training Transformer (GPT).

Nothing is surprising about OpenAI publishing new research with impressive results. What makes this announcement special is OpenAI's deliberate decision not to fully open source their work [5], citing fears that their technology would be misused for "malicious applications" such as spam or fake news. This sparked a debate on online forums like Twitter and Reddit, as well as a flurry of media coverage on how AI research was becoming "too dangerous to release."

While OpenAI is correct to be concerned about potential misuse, I disagree with their decision not to open source the GPT-2. To justify this, I first argue that only certain types of dangerous technology should be controlled by suppressing access. Then, on the basis of this analysis, I argue that withholding the full GPT-2 model is both unnecessary for safety reasons and detrimental to future progress in AI.

Deceptive and Destructive Technologies

I broadly classify modern technology with potential for misuse as either destructive or deceptive technology. Destructive technologies operate primarily in the physical realm. Think chemical weapons, lab-engineered super viruses, lethal autonomous weapons, or the atom bomb.

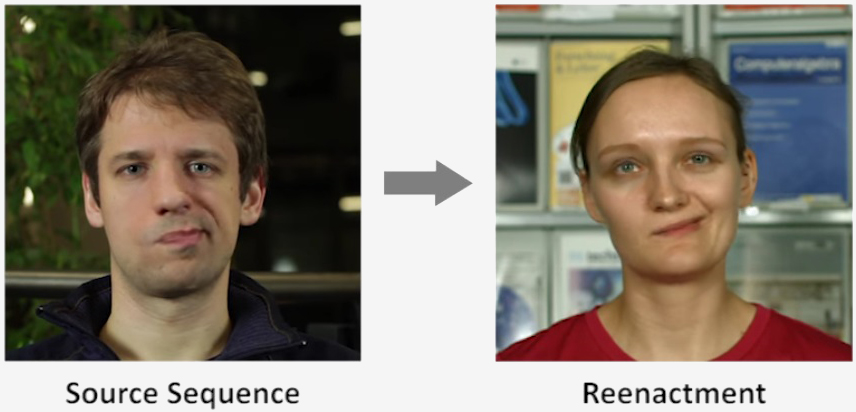

On the other hand, deceptive technologies operate primarily in the realm of our minds and can potentially be misused to manipulate and control people on a broad scale. Think deepfakes, Photoshop, or looking back in history, the Internet or the printing press. With the notable exception of autonomous weapons, fears around AI misuse tend to fall into this category.

For sufficiently dangerous destructive technologies, the only way to protect society is to heavily restrict access (e.g. Uranium for nuclear weapons) and knowledge (e.g. instructions for creating antibiotic-resistant bacteria). Merely refusing to publish the details around a dangerous technology is insufficient without other control mechanisms: the rapid pace of technological progress ensures that, unless research is forcefully stopped by some external force, any discovery will be independently replicated in a matter of years, if not months. Suppressing a technology in this fashion is extremely heavy-handed and far from foolproof. There is always some chance that a terrorist will scrounge up enough radioactive material to make a crude dirty bomb, but we currently have no other choice: Earth would be a graveyard if an atom bomb could be assembled from parts and instructions easily obtainable online.

However, with deceptive technologies, there is an alternative, more effective option. Instead of suppressing a technology, make knowledge of its power as public as possible. While counterintuitive, this method of control relies on realizing that deceptive technologies lose most of their powers if the public is broadly aware of the potential for manipulation. While knowledge of nuclear weapons will not save me from fallout, awareness of recent advances in speech synthesis [6] [7] [8] will make me significantly more skeptical that Obama can speak Chinese. Bullets do not discriminate on one's beliefs, but my knowledge of modern photo editing makes it difficult to convince me that Putin is capable of riding a bear.

For a specific example, we can look at a technology that has (thankfully) not destroyed modern society despite its potential for chaos: Photoshop.

Photoshop: A Case Study

When the camera was invented in the 1800s, it was heralded as a new, impartial way to record history. Instead of relying on unreliable secondhand accounts, people could directly glimpse an impartial record of the past and relive the moment for themselves.

In the early days of photography, it was not widely known that photos could be manipulated. The infamous dictator Joseph Stalin capitalized on this ignorance to literally rewrite history in his own favor. Personal enemies were executed and then erased from photographs of important historical events, with none to know better. Political propaganda was photoshopped onto signs held by protesters, manufacturing support for political ideologies where none existed. Such techniques allowed Stalin to imprison and execute millions of citizens without losing power.

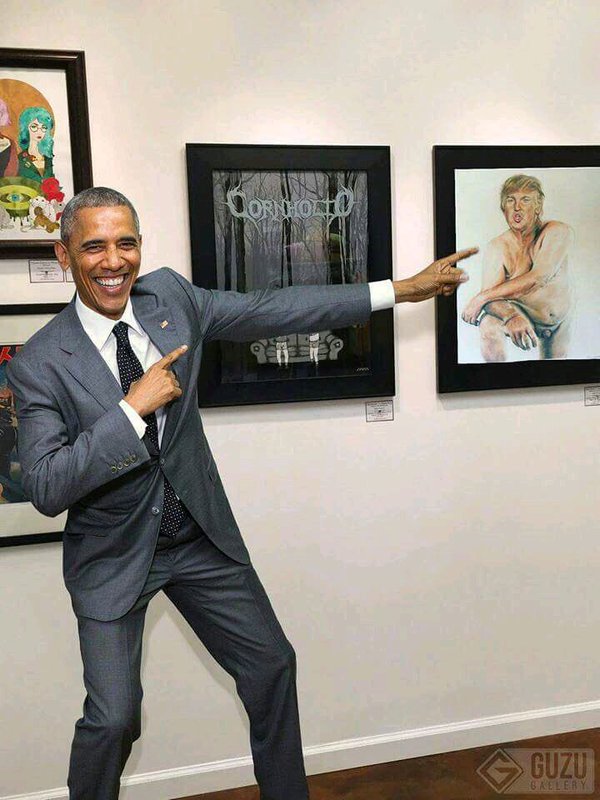

Upon Photoshop's release in 1988, people were naturally worried that open access to photo editing technology would have severe potential for abuse. Thirty years later, society has emerged relatively unscathed despite Photoshop being simple enough for high school students to use and ubiquitous enough to commandeer its own verb. Why? Precisely because everyone knows about Photoshop. To a population largely inoculated to its power, Photoshop is useful only for pranks, making absentee friends feel included in group outings, and the occasional, short-lived publicity stunt. To a population that believed that photos were an unalterable snapshot of reality as did most a hundred years ago, photo editing was the ultimate propaganda. Oh the difference information can make.

While recent advances in voice, text, and image generation [9] [6:1] [7:1] [8:1] may cause some to fear that an apocalypse is right around the corner, I suspect that these technologies will follow a similar trend to Photoshop: society will simply learn to become more skeptical.

Analysis of GPT-2

Analyzing text generation specifically, OpenAI justifies the danger the GPT-2 might pose by selecting a handful of model-generated stories. For convenience, I have copied the prompt and first two paragraphs of their example story on unicorns below.

PROMPT: In a shocking finding, scientist discovered a herd of unicorns living in a remote, previously unexplored valley, in the Andes Mountains. Even more surprising to the researchers was the fact that the unicorns spoke perfect English.

MODEL: The scientist named the population, after their distinctive horn, Ovid's Unicorn. These four-horned, silver-white unicorns were previously unknown to science. Now, after almost two centuries, the mystery of what sparked this odd phenomenon is finally solved.

Note here that in addition to running the model a few times and hand-picking the best sample, the prompt is also itself cherry-picked. In particular, prompting on unicorns that speak English can easily mask deeper issues: generated sentences that would usually be considered nonsense may "fit" with a nonsensical prompt.

Even considering the above, this short sample still has severe coherence flaws. The first sentence implies that the unicorns have a single horn, while the second suggests that they have four. Additionally, while the prompt implies that the unicorn discovery is recent news, the third sentence implies that the unicorns were discovered two centuries ago.

This may sound like nitpicking [10], but I think it is evidence of a deeper issue common to many deep learning models: GPT-2 doesn't have true "understanding" of the text it is generating. It is extremely easy to generate sentences that look presentable at a glance. For example, the postmodernist essay generator [11] and Mathgen use context free grammars to generate "grammatically correct" sentences without any semantic meaning. After all, to someone unfamiliar with mathematics, both of the equations below look like gibberish anyways.

\begin{equation}

\frac{{\partial ^2 B}}{{\partial x^2 }} = \frac{1}{{c^2 }}\frac{{\partial ^2 B}}{{\partial t^2 }}

\end{equation}

\begin{equation}

\frac{1}{O ( {D^{(Y)}} )} \ge \prod_{\Psi \in V} \iint_{\emptyset}^{0} \tan \left(-e \right) + \overline{\frac{1}{0}}.

\end{equation}

Generating valid sentences is easy. Generating coherence is hard.

Further examination of a raw dump of non cherry-picked outputs reveals similar issues. To be fair to OpenAI, GPT-2's generations are a cut above any other generative language models I have seen, but we are still a long ways away from human level coherence. Crucially, none of the samples shown by OpenAI are at a level where they can be directly misused by a malicious agent.

In addition, it isn't obvious that the GPT-2 is superior to its open sourced sister language models. While BERT [4:1] ran ablations to show that their bidirectional encoders provided performance gains over unidirectional language models like the GPT [2:1] independent of model size, OpenAI has yet to publish any such comparisons to other existing models. Since they also do not fine-tune their model, we are also unable to directly compare performance on downstream tasks like summarization or translation.

Why Open Sourcing the Full Model is Important

Some may counter that open sourcing the complete model is unnecessary, and that it is sufficient to merely disclose the research result. This is mistaken in a few ways.

AI research has exploded in part due to open source, as researchers can iterate on existing research in the blink of an eye instead of having to rebuild prior work from scratch. As one of the most influential organizations in AI research, OpenAI's strong history of open source has undoubtedly inspired others to do the same. If OpenAI's new policy reverses this trend, other researchers may follow suit, threatening to end the culture of open source that has brought so much benefit to our field.

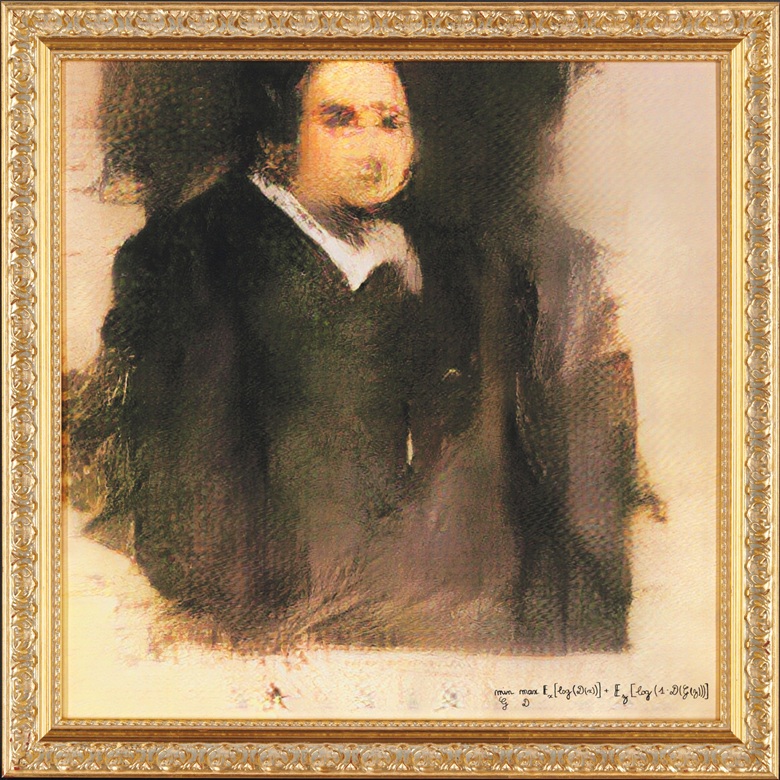

Additionally, open source encourages the spread of information to the general public. With open source, thispersondoesnotexist.com made it to the top of ProductHunt. With open source, artists produced the first AI-generated art piece sold at a major art auction. While OpenAI's research blog is only read by ardent machine learning practitioners, work built on open source can reach a much wider audience that is unlikely to have seen the original research announcement.

Open source also ensures research legitimacy. In a field littered with broken promises, it is of paramount importance that researchers can replicate extraordinary claims by examining the open sourced code. OpenAI's reputation ensures that no one will question this particular result regardless of whether it is open sourced, but this reputation is built upon excellent prior open sourced work. Remember that even if you do not lie, others will, and without a way to verify, it becomes impossible for either researchers or the public to see through the smoke and the mirrors.

This is not to say that everything should be open sourced without a second thought. Sufficiently dangerous technologies of the destructive kind should never be made easily accessible. Even for particularly dangerous technologies of the deceptive kind, it may be advisable to add a small delay between paper publication and code release to prevent a fast-reacting malicious actor from swooping in before the public has had time to fully process the new results. If OpenAI believes that the GPT-2 is such a result, I would advocate that they commit to open sourcing their model by a specific date in the near future.

Final Thoughts

AI research has benefited substantially from an open source culture. While most other disciplines lock state-of-the-art research behind expensive paywalls, anyone with an internet connection can access the same cutting edge AI research as Stanford professors, and running experiments is as simple as cloning an open source repository and renting a GPU in the cloud for only a few cents an hour. Our dedication towards democratizing AI by publicly releasing learning material, new research, and most importantly, open sourcing our projects is why we have progressed so rapidly as a field.

I commend OpenAI for their fantastic new research pushing the limits of language modeling and text generation. I also thank them for their deliberate thought and willingness to engage in a much needed discussion on research ethics, something the AI field does not often talk about despite its paramount importance. OpenAI was correct to raise questions regarding misuse of AI but incorrect to use this as a justification to not open source its research.

I sincerely hope that 2019 will not be the inflection point where machine learning shifts from being an open system to a closed one that is neither safer nor conducive to progress as a field. OpenAI, for the sake of our future, please open source your language model.

Correction: Alec Radford graciously pointed out that I linked the wrong page of sample GPT-2 outputs. In light of this, the raw samples are much closer to the cherry-picked stories and the article now reflects that.

Citation

For attribution in academic contexts or books, please cite this work as

Hugh Zhang, "OpenAI: Please Open Source Your Language Model", The Gradient, 2019.

BibTeX citation:

@article{ZhangOpenAI2019,

author = {Zhang, Hugh}

title = {OpenAI: Please Open Source Your Language Model},

journal = {The Gradient},

year = {2019},

howpublished = {\url{https://thegradient.pub/openai-please-open-source-your-language-model/ } },

}

If you enjoyed this piece and want to hear more, subscribe to the Gradient and follow us on Twitter.

Cover image source. The left photo of Obama is a doctored version of the right photo.

Special thanks to Felix Wang, Ani Prabhu, Philip Hwang, and Eric Wang for their comments, suggestions and especially their questions.

Hugh Zhang is an editor at the Gradient and a researcher at the Stanford NLP Group, with interests in generative models and AI policy. If you enjoyed this piece and want to hear more, subscribe to the Gradient and follow the author on Twitter.

Howard, Jeremy, and Sebastian Ruder. "Universal language model fine-tuning for text classification." arXiv preprint arXiv:1801.06146 (2018). ↩︎

Radford, Alec, et al. "Improving language understanding by generative pre-training." URL https://s3-us-west-2. amazonaws. com/openai-assets/research-covers/languageunsupervised/language understanding paper. pdf (2018). ↩︎ ↩︎

Peters, Matthew E., et al. "Deep contextualized word representations." arXiv preprint arXiv:1802.05365 (2018). ↩︎

Devlin, Jacob, et al. "Bert: Pre-training of deep bidirectional transformers for language understanding." arXiv preprint arXiv:1810.04805 (2018). ↩︎ ↩︎

While OpenAI has previously pondered the relationship between open source and AI misuse, this marks the first time an AI lab has refused to open source their work in the name of safety. ↩︎

Oord, Aaron van den, et al. "Wavenet: A generative model for raw audio." arXiv preprint arXiv:1609.03499 (2016). ↩︎ ↩︎

Oord, Aaron van den, et al. "Parallel wavenet: Fast high-fidelity speech synthesis." arXiv preprint arXiv:1711.10433(2017). ↩︎ ↩︎

Wang, Yuxuan, et al. "Tacotron: Towards end-to-end speech synthesis." arXiv preprint arXiv:1703.10135 (2017). ↩︎ ↩︎

Goodfellow, Ian, et al. "Generative adversarial nets." Advances in neural information processing systems. 2014. ↩︎

For the curious, some actual nitpicks include the extraneous comma in the first sentence after "population", the prompt not being itself grammatical (scientist -> scientists have), "Ovid's Unicorn" being a very odd name for a horn and confusion as to whether researchers/scientist + unicorn / unicorns is singular or plural. ↩︎

Bulhak, Andrew C. "On the simulation of postmodernism and mental debility using recursive transition networks." Monash University Department of Computer Science (1996). ↩︎