This June, our research team at the University of Washington released Grover, a state-of-the-art detector of neural fake news. Neural Fake News is the threat of AI-generated news articles controlled by human adversaries with the intent to deceive.

Grover is a strong detector of neural fake news precisely because it is simultaneously a state-of-the-art generator of neural fake news. And it is precisely because of its strong -- but not quite humanlike -- generation and discrimination capabilities that we have made the model publically available. We’ve open-sourced the code and publicly released the model weights for the smaller Grover models. We’ve shared the model weights for the largest (and most powerful) Grover model, Grover-Mega, to over 30 research teams who have applied.

Our decision to release Grover-Mega to researchers was in part inspired by OpenAI’s experiment in a "staged-release" of their GPT-2 generator. This past February, OpenAI announced GPT-2, a language model deemed "too dangerous to release." While they provided a demo with the most-capable GPT-2 model to journalists, to researchers looking to defend against neural disinformation, OpenAI initially shared only a demo of a watered-down model.

Here, I’ll discuss our decision to open-source Grover. Our motivation is threefold: the danger of these research prototypes is limited at present, replication of research prototypes is easy, and progress in research requires open-sourcing models. Our current system isn’t sufficient and we need to develop community norms on model release.

The danger of Grover is limited right now

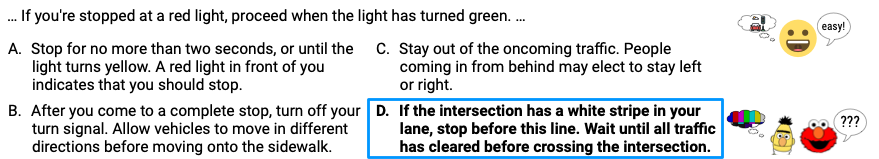

Grover and GPT-2 lack the model capacity to generate human-quality news. A single article generally has a length of multiple paragraphs, and refers to extensive world knowledge. As we highlighted in our recent paper introducing the HellaSwag dataset, these two factors — length and domain complexity — make discrimination significantly easier for both humans and machines.

An example HellaSwag question, and answers. Given a context, the task is to choose the most sensible completion. Current state-of-the-art discriminators only struggle to spot errors when the completion length is short: for HellaSwag, at most two sentences.

Indeed, Grover can tell apart Grover-written from human-written news with upwards of 92-97% accuracy depending on assumptions made about the adversary that generated the articles. Grover can also flag GPT-2 written news articles as fake with 96% accuracy -- in a completely zero-shot setting.

But the issue runs deeper. As we argue in our paper, real-world adversaries largely require controllable generation. For example, AI-generated propaganda must be tightly on-message for it to be useful. Adversaries must also generate more than just the news article body; for instance, they must generate a headline, date, and an author list. Though Grover is more controllable than GPT-2, it is not autonomous. Adversaries will need extensive human labor to direct generation.

In short, our experiments suggest that the quality and controllability of existing generators is not enough for them to do significant damage to the world.

The true danger lies not in research prototypes, but in even bigger models

If a serious adversary wanted to generate neural fake news, they probably wouldn’t directly use the Grover weights. The largest Grover model costs only $35k to train. Real-world adversaries have far more money and resources, and could likely train a significantly larger model.

This risk requires fundamental research now into these models, and into making platforms more secure from disinformation -- before it’s too late.

Research requires releasing possibly dangerous models

To defend against digital threats, the computer security community relies on threat modeling: the scientific study of attacks adversaries might use. Due to the ease of automatic detection, it is likely that the most effective attacks will use very strong generators. This suggests that Grover-Mega is the most effective threat model currently available for Neural Fake News. Followup research can extend on this threat model by studying additional sampling strategies or domains, for instance.

Still, Grover accomplishes more than a traditional threat model -- after finetuning the model weights, Grover becomes a state-of-the-art detector of neural fake news.

Overall, to best enable researchers to study attacks and defenses requires releasing the model weights. While it might be possible to engineer a compromise, like an interactive environment wherein researchers can minimally probe the model but not look at the model weights, there are serious drawbacks to this approach. The resulting environment would likely either a) fail to prevent malicious use, or b) hamper research into defenses to these models, making them more dangerous.

We need community norms for model release

As I have argued, trying to lock down research prototypes might amplify rather than prevent threats.

However, at the same time, our approach -- checking the information of every researcher who applies for the Grover-Mega model -- is far from ideal. Not only is it time-intensive, we created the rules ourselves in a completely unilateral way. Were another research group to release an even larger generator tomorrow, they might very well choose a different set of rules.

Instead, we as a community need to develop a set of norms about how “dangerous” research prototypes should be shared. These new norms must encourage full reproducibility while discouraging premature release of attacks without accompanied defenses. These norms must also be democratic in nature, with relevant stakeholders as well as community members being deeply involved in the decision-making process.

What might this look like? Possibly, when uploading a potentially-dangerous artifact to an online repository like OpenReview or arXiv, community members would be able to study code from a smaller model. Reviewers could vote whether to release model checkpoints to the public.

This sketch isn’t perfect - there are key issues that would need to be resolved. However, the alternatives are also not sustainable. If our field is to make progress on spotting neural disinformation, and other threats, we’ll need to figure something out -- before it’s too late.

Rowan Zellers is a PhD student at the University of Washington. Follow him on Twitter.

Thanks to Yejin Choi, Franziska Roesner, Yonatan Bisk, Hugh Zhang, and Stanley Xie for feedback on this post.

Citation

For attribution in academic contexts or books, please cite this work as

Rowan Zellers, "Why We Released Grover", The Gradient, 2019.

BibTeX citation:

@article{Grover2019Release,

author = {Zellers, Rowan}

title = {Why We Released Grover},

journal = {The Gradient},

year = {2019},

howpublished = {\url{https://thegradient.pub/why-we-released-grover/ } },

}

If you enjoyed this piece and want to hear more, subscribe to the Gradient and follow us on Twitter.