Healthcare is often spoken of as a field that is on the verge of an AI revolution. Big names in AI such as Google DeepMind, publicise their efforts in healthcare, claiming that “AI is poised to transform medicine.”

But how impactful has AI been so far? Have we really identified areas in healthcare that will benefit from new technology?

At the ACM CHI Conference on ‘Human Factors in Computing Systems’ held in May this year, Carrie J. Cai of Google presented her award-winning work on ‘Human-centered tool for coping with Imperfect Algorithms During Medical Decision-Making’, discussing the increasing usage of machine learning algorithms in medical decision making. Her work proposes a novel system enabling doctors to refine and modify the search of pathological images on-the-fly, continuously enhancing the usability of the system.

Retrieving visually similar medical images from past patients (e.g. tissue from biopsies) for reference when making medical decisions with new patients is a promising avenue where the state-of-the-art deep learning visual models can be highly applicable. However, capturing the exact notion of similarity required by the user during a specific diagnostic procedure offers big challenges to existing systems because of a phenomenon known as the intention gap, which refers to the difficulty in capturing the exact intention of the user. We will discuss this in more detail later.

Cai’s research showcases how the refinement tools they developed on their medical image retrieval system increases the diagnostic utility of images and most importantly, increases a user’s trust in the machine learning algorithm for medical decision making. Furthermore, the findings show how the users are able to understand the strengths and weaknesses of the underlying algorithm and disambiguate its errors from their own. Overall the work presented an optimistic view of the future of human-AI collaborative systems in expert decision-making in healthcare.

In this post, we want to look into three main areas namely — (1) the state of content-based image retrieval systems, (2) the role of deep learning in these systems and finally, (3) a discussion on their application and impact in healthcare.

The state of content-based image retrieval systems

Over the last two decades or so, content-based image retrieval (CBIR) has been a vivid research area in computer vision, mainly due to the ever-growing accessibility of visual data on the web. Text-based search techniques for images suffer many inconsistencies due to mismatches with the visual content, hence considering the visual content as a ranking clue for similarity is seen to be important in many cases.

Wengang Zhou et al. point out two crucial challenges in CBIR systems that they call as the intention gap and the semantic gap.

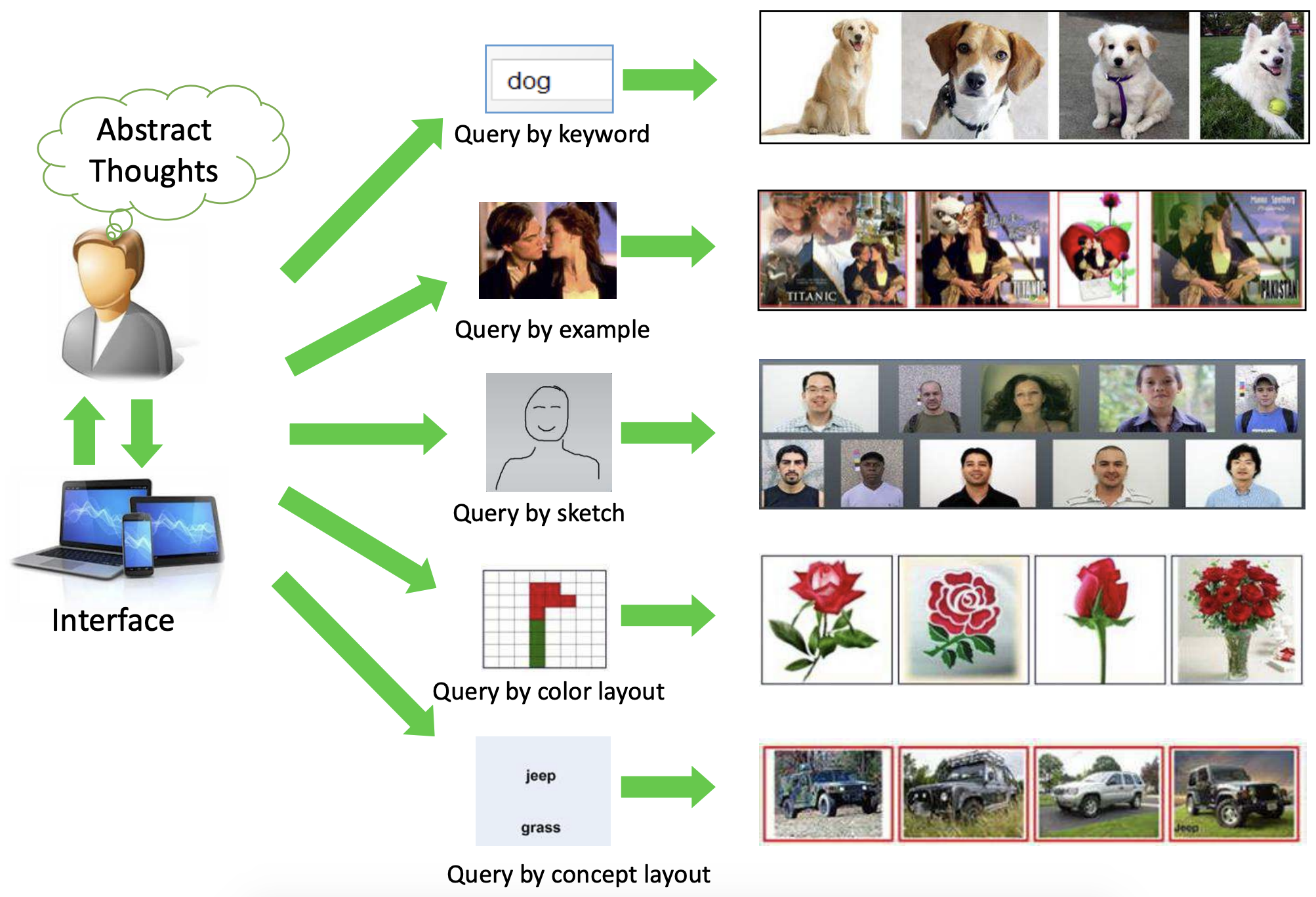

Figure 1 — Taken from the paper “Recent Advance in Content-based Image Retrieval: A Literature Survey” by Wengang Zhou et al.

The intention gap, as implied by the meaning, refers to the difficulty in capturing the exact intention of the user by a query at hand, such as an example image or a keyword. This is the challenge addressed by Carrie J. Cai et al. with their refinement tools in the user interface. Looking at past research, query formation by example image seems to be the most widely explored area, intuitively due to the convenience of obtaining rich query information through images. This demands accurate feature extraction from images which brings us to the next point, the semantic gap.

The semantic gap deals with the difficulty in describing high-level semantic concepts with low-level visual features. Now, this topic has attracted a considerable amount of research over the years with several notable breakthroughs such as the introduction of invariant local visual feature SIFT and introduction of Bag-of-Visual-Words (BoW) model.

Figure 1 shows the two main functionalities of a CBIR system. Matching the similarities between the query understanding and image features can also be an important step, but it fully depends on how well the system expresses the query and the image.

The recent explosion of learning-based feature extractors such as deep convolutional neural networks (CNN) opened up many avenues for research that can be directly applied to deal with the semantic gap we discussed in CBIR systems. These techniques have shown significant improvements over the hand-crafted feature extractors and have already demonstrated potential in semantic-aware retrieval applications.

The role of deep learning

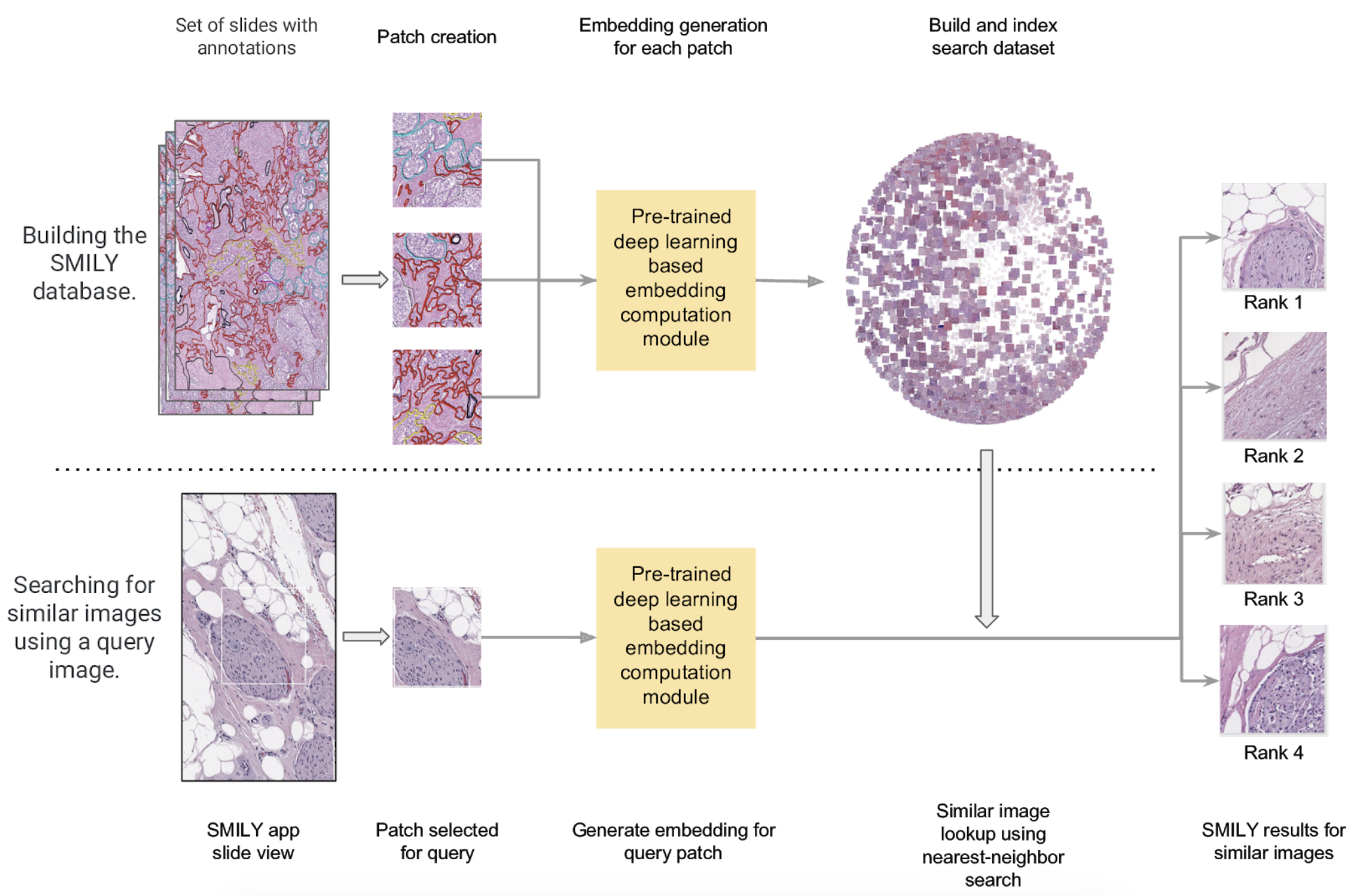

The underlying details of the CBIR system analysed by Carrie J. Cai et al. are presented in detail by Narayan Hedge et al. in their study “Similar Image Search for Histopathology: SMILY”. The overview of the system is shown in Figure 2.

A convolutional neural network (CNN) algorithm is used for the embedding computation module shown in Figure 2, which act as the feature extractor in the system. The network condenses image information into a numerical feature vector, also known as an embedding vector. A database of images (in this case patches of pathology image slides) along with their numerical vectors were computed and stored using the pre-trained CNN algorithm. When a query image was selected for searching, the embedding of the query image is computed using the same CNN algorithm and compared with the vectors in the database to retrieve the most similar images.

Figure 2 — Taken from “Similar Image Search for Histopathology: SMILY” by Narayan Hegde et al.

Further, Narayan Hedge et al. explains that the CNN architecture is based on a deep ranking network presented by Jiang Wang et al., that consists of convolutional and pooling layers together with concatenation operations. During the training stage of the network, sets of 3 images were fed: a reference image of a certain class, a second image of the same class and a third image of a totally different class. The loss function was modelled such that the network assigns a lower distance between the embedding of the images coming from the same class than the embedding of the image of the different class. Thus the image from the different class helps strengthen the similarity between the embeddings of images from the same class.

The network was trained using a large dataset of natural images (e.g. dogs, cats, trees etc) rather than pathology images. Having learnt to distinguish similar natural images from dissimilar ones, the same trained architecture was directly applied for feature extraction of pathology images. This can be seen as a strength of neural networks in applications with limited data, commonly termed as transfer learning.

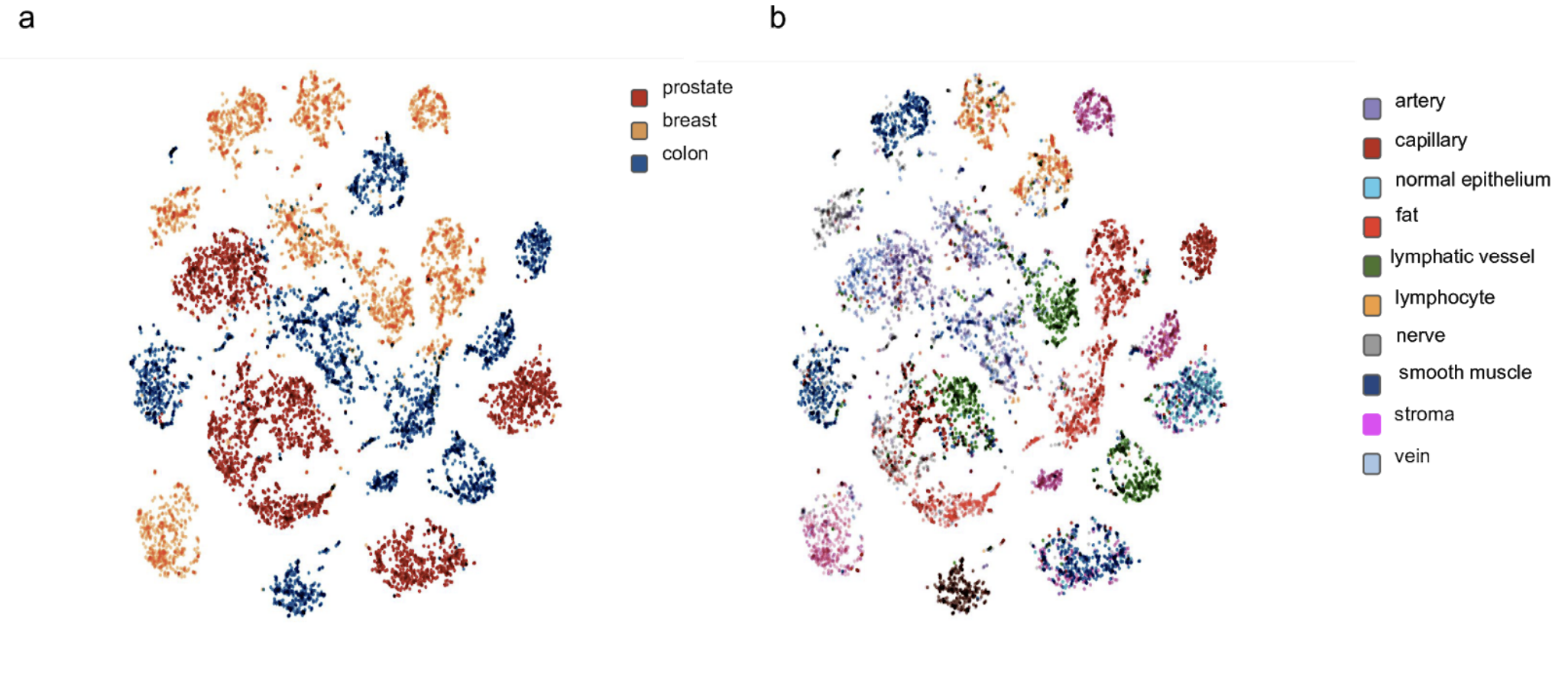

The CNN feature extractor computed 128-sized vectors for each image and L2 distance was chosen to be the comparison function between vectors. Narayan Hedge et al. visualised all the embeddings produced from the dataset of pathology image slides using t-SNE as shown in Figure 3. (a) shows embeddings coloured by organ site and (b) shows embeddings coloured by histologic features.

Figure 3 — Taken from “Similar Image Search for Histopathology: SMILY” by Narayan Hegde et al.

In fact, similar architectures and training techniques to deep ranking networks can be widely seen in deep learning literature such as Siamese Neural Networks and have been even applied for face detection applications.

Now, coming back to CBIR systems, we see that deep learning could help in reducing the semantic gap (discussed above) as these learning-based approaches are proven to be impressive in identifying important features even in noisy natural images.

Application and impact in healthcare

So far we looked at what goes into CBIR systems and the potential of deep learning in overcoming the crucial challenge of the semantic gap. But how applicable is CBIR in healthcare? And can we clearly quantify the impact?

Henning Müller et al. states that the radiology department of the University Hospital of Geneva alone produced more than 12,000 images a day in 2002 with cardiology being the second largest producer of digital images. The study further argues that the goal of medical information systems should be to “deliver the needed information at the right time, the right place to the right persons in order to improve the quality and efficiency of care processes.” Thus in clinical decision making, support techniques such as case-based reasoning or evidence-based medicine are desired to benefit from CBIR systems.

No matter how sound the technology is, integration of these systems in real clinical practices demands significantly more work, especially in building trust between the system and its users. This is where the study of Carrie J. Cai et al. stands strong by being very flexible with user’s relevance feedback, which provides the ability to the user to rate the returned results of the system. Henning Müller et al. also talks about the importance of relevance feedback in an interactive setting for improving the system results and also to increase the adaptability of the CBIR systems.

Another major point is quantifying the impact of these systems which is crucial for the adaptation and advancement of this field of research. After a user study with 12 pathologists, Carrie J. Cai et al. claims that with their CBIR system users were able to increase the diagnostic utility of the system with less effort. Also, results show increased trust, enhanced mental support for the users and improved likelihood to use the system in real clinical practices in the future. But diagnostic accuracy (although empirically stated to remain the same) was not evaluated in this study as it was out of scope.

Looking forward, it is evident that continuous collaboration of medical experts and AI system developers is required in both identifying the use cases and evaluating the impact of AI applications in healthcare. Additionally, the research community should focus on the development of open test datasets and query standards in order to set benchmarks for CBIR applications. This would be immensely helpful to drive the research forward with clear ideas of the contributions.

Written by:

Mirantha Jayathilaka

PhD researcher

School of Computer Science

University of Manchester, UK.

Email: [email protected]

Special thanks to Hugh Zhang, Max Smith and Nancy Xu for their insight and comments.

Citation

For attribution in academic contexts or books, please cite this work as

Mirantha Jayathilaka, "Is Deep Learning the Future of Medical Decision Making?", The Gradient, 2019.

BibTeX citation:

@article{FutureMedicineDeepLearning2019Gradient,

author = {Jayathilaka, Mirantha}

title = {Is Deep Learning the Future of Medical Decision Making?},

journal = {The Gradient},

year = {2019},

howpublished = {\url{https://thegradient.pub/is-deep-learning-the-future-of-medical-decision-making/ } },

}

If you enjoyed this piece and want to hear more, subscribe to the Gradient and follow us on Twitter.