This piece was a finalist for the inaugural Gradient Prize.

Machine Learning is a powerful technique to automatically learn models from data that have recently been the driving force behind several impressive technological leaps such as self-driving cars, robust speech recognition, and, arguably, better-than-human image recognition. We rely on these machine learning models daily; they influence our lives in ways we did not expect, and they are only going to become even more ubiquitous.

Consider a couple of example machine learning models: 1) Detecting cats in images 2) Deciding which ads to show you online 3) Predicting which areas will suffer crime, and 4) Predicting how likely a criminal is to re-offend. The first two seem harmless enough. Who cares if the model makes a mistake? Number three, however, might mean your neighborhood sees little police or too much, and number four might be the deciding factor in granting you your freedom or keeping you incarcerated. All four are real-life examples, Google Image Search, Google Ads, PredPol, and COMPAS by Northpointe.

In the following post, we will discuss two complex and related issues regarding these models: fairness and transparency. Are they fair? Are they biased? Do we understand why they make the decisions they make? These are crucial questions to ask if machine learning models play important parts in our lives and our societies. We will contrast this to humans who are the decision-makers these models are assisting or outright replacing.

Fairness

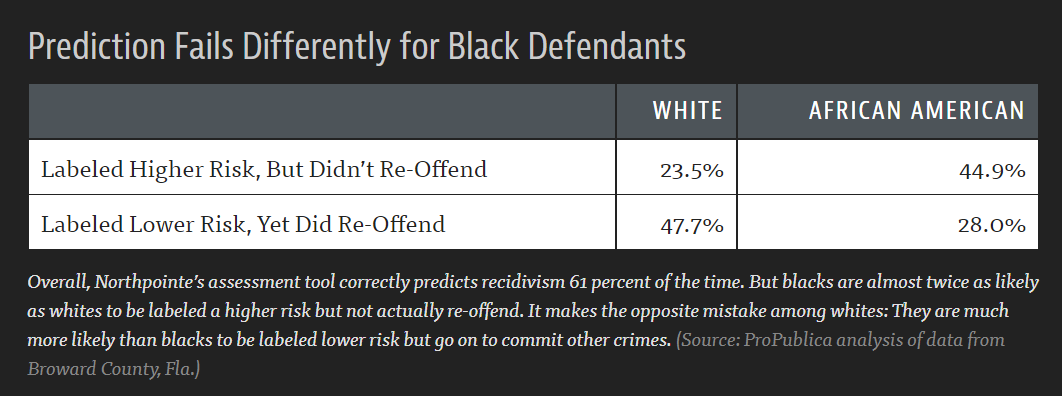

In 2016, ProPublica published a study in which they argue that there is racial bias in COMPAS by Northpointe, a software tool that predicts how likely a criminal is to re-offend. In their paradigmatic work on algorithmic bias, ProPublica found that

"Blacks are almost twice as likely as whites to be labeled a higher risk but not actually re-offend. It makes the opposite mistake among whites: They are much more likely than blacks to be labeled lower risk but go on to commit other crimes."

The numbers in the table above shows the false positives and false negatives reported in the ProPublica study. Northpointe disputes ProPublica's analysis.

First, let us note that we are not categorically against using statistics to make decisions on individuals. If the model based its decision to keep someone incarcerated on their extensive criminal record because people with similar criminal records were statistically more likely to re-offend, we would probably think this was fair. We make these kinds of decisions all the time. That said, there are specific properties, such as race, gender, religion, sexual orientation, that we as a society have decided cannot be used in certain scenarios. It's very circumstantial, however; if Google serves me ads for men's clothing instead of women's clothing based on my gender, I won't feel offended. In general, our sense of what is fair is exceptionally complex to describe and based on thousands of years of cultural history.

Machine learning models don't know these complex rules. If there is a correlation between race and re-offending rate in the data set, the model will most likely use this correlation in its decision-making. There is no guarantee that the models will be fair or unbiased -- only that they will be "good" under some quantifiable definition of good, typically the average amount of errors. Unfortunately, as described above, it is hard to quantify our sense of fairness universally. In cases where such quantification via a fairness metric is possible, we can include it in the definition of "good" and "optimize for ethics", a practical approach suggested by previous research [1].

Since machine learning models learn from data, they will learn the biases in the data. Put in another way; the models inherit our biases through the data we produce and collect. As such, it is essential to think about whether the data collection was biased. In the case of PredPol, the predictions it makes of crime are self-reinforcing since sending more police to an area generally results in them discovering more crime in that area. Once this new data feeds back into the system, it becomes even more confident in its prediction of this area as an area with a high crime rate. Recent work aims to address such feedback loops by viewing fairness problems as dynamical systems [2].

Even if you are aware of the risk of biases and try to avoid them, it might be hard to escape. In the case of COMPAS, Northpointe deliberately did not include race as one of the 137 properties upon which it bases its predictions; a practice commonly referred to as color blindness or Fairness through Unawareness [3]. However, as a consequence of colonialism, slavery, and other forms of oppression, there are correlations between race and income, poverty, parental alcohol abuse, criminal history, etc. which are included in the 137 properties. So given the 137 "color blind" properties, the model could, in principle, infer the race property to a high degree of certainty.

However, all hope is not lost as ensuring fairness in machine learning models is an active area of research. There's a wealth of proposed methods aiming at increasing model fairness, which can be categorized into approaches that a) adjust data, e.g., by oversampling, augmenting, or re-collecting better datasets, b) adjust models, e.g., via optimization constraints as discussed above, or c) re-calibrate the predictions, for example, by adjusting thresholds for specific demographic groups. As an example of the latter, Hardt et al. [4] propose a criterion for discrimination against a specific protected attribute in the data, e.g., race, by requiring that the true positive and false positive rates should be the same across protected attributes. So if the data contains a record of a group of people re-offending, the model must predict that they re-offended at the same rate regardless of race. The proposed method can be used to adjust any model --- like Northpointe's COMPAS model suffering from this type of unfairness --- to ensure that it does not discriminate against protected properties.

Thus, even though it does not seem possible to quantify fairness in a universal way that machine learning models can understand, we can attempt to define and correct it on a case-by-case basis.

Transparency

A lack of transparency can compound a lack of fairness. If we cannot understand why a machine learning model made a decision, it is hard to judge whether it is fair or not. Was my loan application rejected due to my economy or my race? If it were the former, that would be fair. If it were the latter, I would be outraged.

Learning models from data instead of hand-crafting them often results in models that are black boxes, i.e., it is hard to explain why the model produced a particular output for a particular input. This black-box nature is arguably intrinsic to machine learning since machine learning is often used when you don't know how to construct the model by hand or don't want to.

Just as there is no guarantee that a model will seem fair to humans, there are no guarantees that it will make sense to humans, simply that it will be "good". As with fairness, "Makes sense to humans" is hard to quantify; otherwise, one could include it in the definition of good.

Some machine learning methods lead to more understandable models than others, but this often comes at the cost of having less powerful models. If we limit ourselves to models that humans can understand, we might be ruling out very powerful models.

Deep Learning is a powerful and popular paradigm in machine learning behind many of the aforementioned technological leaps that take this to the other extreme. The philosophy is to learn very complex models and keep human processing of the data to a minimum. The resulting models are often so complex they are effectively impossible to understand. Even simple deep learning models routinely have millions of parameters.

Explanations produced with the LIME algorithm, image from[5]

Ensuring that machine learning models are explainable is an active area of research as well. Ribeiro et al. propose LIME, a method to explain the output of any model by learning a simple, explainable model to mimic the output of the complex model. Once the simple model is learned, one can examine which inputs are important to the output, e.g., whether my race or economy led to the decision. Been et al. present another way to understand what factors a model uses. First, they identify a model's internal encoding of a concept by showing it data representing this concept (e.g., input representing black and white people). Once such "concept activation vectors" are identified, the authors investigate how these vectors influence the model's predictions. If, for example, adding to the "blackness" concept increases the predicted risk score, the model does indeed use race to arrive at its decision.

Are humans better?

As discussed earlier, machine learning models are prone to biases and are often opaque. Since these models influence or outright replace human decision-makers, it seems appropriate to compare these models to their human counterparts. Instead of asking whether or not a machine learning model is fair and transparent in some abstract, absolute sense, we should be asking whether they are more or less fair and transparent than a human would be.

The extent of human cognitive biases is extensively documented. We create and rely on stereotypes [5:1], and we seek to confirm our own beliefs and ignore evidence to the contrary [6]. Even more grotesquely, we are influenced by utterly arbitrary information such as dice rolls, even when making important decisions such as determining the length of prison sentences [7]. We are not the rational, unbiased creatures we like to think we are.

In his book "Clinical versus statistical prediction: A theoretical analysis and a review of the evidence." Meehl reviews 20 studies and finds that in the majority, expert professionals were outperformed by simple statistical predictors or rules when it came to predicting clinical outcomes. Similar results have been achieved in over 200 studies subsequently in a diverse array of fields and applications [8]. One additional factor to consider in human decision-making is the dependence on the individual human making the decision. Different judges might judge the same defendant differently, and one radiologist might detect your cancer, whereas another might not, which hardly seems fair or desirable.

A complete opaqueness compounds this extensive list of cognitive defects and poor predictive performance. Unlike machine learning models, we cannot observe the internal state of the human mind. The naive approach to understanding people's motivation is to ask them, but they most likely do not know themselves, as demonstrated by the dice rolls affecting sentencing length. Further, we might deliberately lie about our motivation to avoid social stigma or promote our agendas.

Lastly, we are usually invested in our beliefs and decisions to the point of being defensive. Once we accept a worldview, it is hard for us to concede that we are wrong, even in the face of overwhelming evidence. This defensiveness makes detecting and correcting poor decisions and biases even harder.

Are humans really that bad? Kahneman [9] argues eloquently that we do indeed have the capacity to act rationally but that it is a taxing exercise and something we can only do in short periods, under the right circumstances. The fact that we have compiled a list of our own cognitive biases speaks to our ability to be rational. By concentrated conscious effort and careful deliberation, we can be our better selves. However, the conditions under which we must make many of our decisions are not conducive to this type of behavior. We cannot reasonably expect a busy doctor on the 14th hour of their shift to acting completely rationally and optimally.

Discussion

It is interesting to note that we recognize and forgive our own irrational thinking. Imagine a driver swerving to avoid a collision with a cat jumping onto the road and accidentally hitting and killing two persons instead. No one would blame the driver. Indeed, “to err is human”. Imagine a self-driving car doing the same thing. There would be outrage that the car did not choose to hit the cat. We have higher expectations of a party building the model deciding how to react that has the time to properly contemplate things, and rightly so. We can and should expect such parties to work closely together with regulatory bodies, and, if need be, we can enforce it through policy. In short, we can and should hold these models to higher standards than we do ourselves.

Introducing these models exposes our faults through them and offers us a chance to carefully deliberate how to best handle them. We gain a platform from which we can make disinterested rational decisions about our biases freed from the stresses of having to make decisions in the real world, in real-time. We can attempt to quantify our biases, and we can strive to reduce them. We only need to summon our capacity for rational thought and unbiased judgment once, while crafting the model, instead of continually, every time we make a decision. We can encode our better selves into these models, and rest assured that even if our judgment momentarily falters, theirs will not.

Cynthia Dwork, Moritz Hardt, Toniann Pitassi, Omer Reingold, and Richard Zemel. Fairness through Awareness. Proceedings of the 3rd Innovations in Theoretical Computer Science Conference, 2012. ↩︎

Xueru Zhang, Ruibo Tu, Yang Liu, Mingyan Liu, Hedvig Kjellström, Kun Zhang, and

Cheng Zhang. How Do Fair Decisions Fare in Long-Term Qualification?

Thirty-Fourth Conference on Neural Information Processing Systems, 2020. ↩︎Pratik Gajane and Mykola Pechenizkiy. On Formalizing Fairness in Prediction with

Machine Learning. arXiv preprint arXiv:1710.03184, 2017. ↩︎Moritz Hardt, Eric Price, Nati Srebro, and others. Equality of Opportunity in Supervised Learning. In Advances in Neural Information Processing Systems, pages 3315–3323, 2016. ↩︎

Marco Tulio Ribeiro, Sameer Singh, and Carlos Guestrin. " Why Should I Trust You?":

Explaining the Predictions of Any Classifier. arXiv preprint arXiv:1602.04938, 2016 ↩︎ ↩︎James L Hilton and William Von Hippel. Stereotypes.

Annual Review of Psychology, 47(1):237–271, 1996. ↩︎Raymond S Nickerson. Confirmation Bias: A Ubiquitous Phenomenon in Many Guises.

Review of General Psychology, 2(2):175, 1998. ↩︎Birte Englich, Thomas Mussweiler, and Fritz Strack. Playing Dice with Criminal Sentences: The influence of Irrelevant Anchors on Experts’ Judicial Decision Making.

Personality and Social Psychology Bulletin, 32(2):188–200, 2006. ↩︎Daniel Kahneman. Thinking, fast and slow. Macmillan, 2011 ↩︎

Author Bios

Rasmus Berg Palm is a Postdoc at the IT University of Copenhagen. His research interest is mainly unsupervised/self supervised learning of world models and using these for planning and reasoning.

Pola Schwöbel has a background in mathematics and philosophy. She is a PhD student at the Technical University of Denmark where she does research within Probabilistic Machine Learning and AI Ethics.

Citation

For attribution in academic contexts or books, please cite this work as

Rasmus Berg Palm and Pola Schwöbel, "Justitia ex Machina: The Case for Automating Morals", The Gradient, 2021.

BibTeX citation:

@article{palm2021morals,

author = {Palm, Rasmus Berg and Schwöbel, Pola},

title = {Justitia ex Machina: The Case for Automating Morals},

journal = {The Gradient},

year = {2021},

howpublished = {\url{https://thegradient.pub/justitia-ex-machina/} },

}

If you enjoyed this piece and want to hear more, subscribe to the Gradient and follow us on Twitter.