This piece was a runner-up for the inaugural Gradient Prize.

In 2019, the United States Department of Homeland Security (DHS) announced its plans to collect social media usernames of foreign individuals seeking entry into the United States, whether as travelers or immigrants, as part of a new “extreme vetting” process to determine admissibility into the country. For those whose online activity is mediated primarily in a language other than English, an official manual distributed by US Citizenship and Immigration Services instructs officers to use Google Translate to translate their social media posts into English. This practice continued in spite of Google’s warning that its translation services are not intended to be used in place of human interpreters.

The practice of translation between human languages has long been shaped by power asymmetries. For example, the boundaries applied to a continuum of linguistic communities and practices on the African continent centuries ago to produce European understandings of discrete language objects, and the very names applied to these objects, were imposed by European colonizers as the basis for the creation of language documentation and translation materials that under-girded colonizing efforts. Some of the first grammar resources for previously unwritten languages were created by Christian missionaries in order to translate the Bible and proselytize to indigenous peoples worldwide. History is rife with examples of colonial subjects who were forced to learn the languages of their colonizers, often facing punishment for speaking in their native languages. In many cases, this linguistic oppression has contributed to the decline in native speakers of indigenous languages, and the demand for colonial subjects to render themselves legible through obligatory translation further deepened their subjugation.

As the DHS vetting protocol makes evident, the deployment of machine translation technology extends a tradition of difference-making and exerting power over subordinate groups, whether by linguistic suppression or forced translation. In this manner, language technologies enable a new kind of linguistic surveillance. In fact, such interests are precisely what fostered the development of machine translation technology in the mid-20th century. The sociopolitical context in which machine translation was first developed has shaped the core goals and assumptions of the project, and its continued development and use in a commercial setting not only facilitate but require a consolidation of resources and power at an increasingly large scale. In light of questions of how technology complicates understandings of language ownership, linguistic communities turn toward avenues of resistance.

Origins of Machine Translation

The first machine translation efforts in the United States were spurred by the Cold War. Early rule-based systems were largely developed with funding from and for use by the military and other federal agencies, often relying on interdisciplinary collaboration between engineers and linguists. After a period of steady research largely dominated by government-funded academic work, machine translation became widely available to the general public during the personal computing revolution of the 1990s, with the advent of commercially available translation software. By the 2000s, Google’s tremendous index of web content and its swaths of capital enabled the enrichment and application of statistical (and later, neural) machine translation techniques, leading to the deployment of freely available translation services on the web as they are commonly used today.

Roots of Machine Translation: 1949-1997

“[O]ne naturally wonders if the problem of translation could conceivably be treated as a problem in cryptography. When I look at an article in Russian, I say, ‘This is really written in English, but it has been coded in some strange symbols. I will now proceed to decode.”

– Warren Weaver, in correspondence to Norbert Wiener, 19472

Modern machine technology traces its roots to work in cryptography and codebreaking during World War II. American scientist Warren Weaver, who had collaborated with pioneering information theorist Claude Shannon, had an interest in the application of information theory to the translation of human languages. In 1949, Weaver, then director of the Natural Sciences Division at the Rockefeller Institute, distributed a highly influential memo, entitled Translation, to a handful of linguists and engineers in which he laid out a call to action for the application of computers to the translation of human languages. Weaver’s memo spurred a proliferation of research efforts in machine translation at a variety of institutions in academia and industry, including the University of Washington, Georgetown University, IBM, and the RAND corporation.

The mere decision of which languages to target for the first efforts in automatic translation was a political one, shaped at the time by Cold War rivalries between the United States and the Soviet Union, and spurred in particular by a desire to increase monitoring of scientific literature in Russian. Anthony Oettinger, then an undergraduate at Harvard University, recalls being recruited to collaborate with computer scientist Howard Aiken, one of the recipients of Weaver’s memo, specifically because he was a student of Russian.

Research continued at a steady pace, and despite a promising system demonstration of Russian-English translation by the Georgetown-IBM team, funding dwindled in the 1960s in the wake of the damning ALPAC report that bemoaned the poor quality of machine translation. However, the United States government remained a faithful consumer of machine translation technology; in Tom Pedtke’s 1997 keynote address at the sixth Machine Translation Summit, he reflects on several key developments in the 1990s fostered by government demand. For example, the Drug Enforcement Agency was devoting resources to the improvement of Spanish-English translation in 1991, while projects in Chinese-English and Korean-English translation were championed by the NSA, the FBI, DARPA, and the Navy. The end of the 1990s saw a shift, however, in the key players in (and consumers of) machine translation.

1997 to Now: Data-driven Translation

“The most important thing happening in Silicon Valley right now is not disruption. Rather, it’s institution-building — and the consolidation of power — on a scale and at a pace that are both probably unprecedented in human history.” – Gideon Lewis-Kraus, ‘The Great A.I. Awakening’ New York Times Magazine, Dec. 14, 2016

By the mid to late 1990s, advances in computational processing power and the personal computing revolution had enabled the development of translation tools for use by civilians. SYSTRAN, which had been developed from the machine translation program at Georgetown University, teamed up with hardware powerhouse Digital Equipment Corporation to launch AltaVista, the first free web-based translation service, in 1997. Originally limited to translation between English and a handful of Romance languages, it was widely applauded; user studies revealed heartwarming anecdotes of how the service enabled communication with beloved monolingual family members and provided a unique source of amusement when translations went awry. The following year, Google was founded. As graduate students at Stanford, Sergey Brin and Larry Page had begun work on building a massive index of the contents of the nascent World Wide Web, as part of the Digital Libraries Project funded jointly by DARPA, NSF, and NASA; this work would come to form the basis for the Google search engine.

By 2004, Google was an enormously valued, publicly traded company that had earned the praise of web surfers worldwide. Brin claims that it was a message from a South Korean fan, mis-translated to ‘The sliced raw fish shoes it wishes. Google green onion thing!’ by the SYSTRAN software Google had been licensing, that spurred the decision to expand Google’s capabilities to include the translation of languages. After all, in Google’s quest to index all of the web, it would need to be able to include those parts of the internet that were not in English.

That year, Page reached out to Franz Och, then a research scientist at the Information Science Institute at the University of Southern California, to hire him to build what would become Google Translate. Och was skeptical at first, and bewildered as to why a search engine company would want to dive into the domain of translation, but was enticed by the fact that Google had unprecedented computational resources with which to push the frontiers of statistical machine translation, made newly tractable by the sheer quantity of text data at Google’s disposal. Over the next few years, under Och’s direction, Google Translate vastly edged out other machine translation efforts by university research groups, developing efficient systems for dozens of languages. Mark Przybocki, who oversaw machine translation evaluation contests at the National Institutes of Standards and Technology in 2010, likened Google’s competitive advantage to “going up against someone with access to a football field worth of processors to collect data.” Today, Google Translate boasts the ability to translate texts between over a hundred languages, and other tech giants like Microsoft and Facebook have also ventured into machine translation research.

Use (and abuse) of machine translation

A key driving force behind machine translation has been the quest for an exhaustive collection of knowledge that transcends local contexts. The earliest efforts in American machine translation were intended to decipher Cold War-era Russian communications and scientific papers, and now, Google has deployed its state-of-the-art machine translation tools to build its massive database of the world’s online content. While the casual user of Google Translate ostensibly benefits from access to this resource, these free tools may be understood as ‘hooks’ that ensnare users further into the extractive relationship of surveillance capitalism and ‘shifts economic activity to a handful of tech giants as providers of translation’.

While the key government benefactors of machine translation technology emphasized its utility for ‘peacekeeping’ via mutual understanding, Google advertises its translation service as a tool that ‘break[s] language barriers and ... [makes] the world more accessible’. This imagery of language as a “barrier” is often invoked in discussions of machine translation, offering a utopian view of universal understanding when these barriers are broken. Ironically, as the Department of Homeland Security’s social media vetting process shows, translation software is deployed specifically to uphold cultural barriers, merely adding to an arsenal of technological tools for demarcating ‘in’ and ‘out’ groups.

Further complicating the matter is that the apparent fluency of neural machine translation output for many language pairs can disguise the fact that systems still struggle to produce adequate translations, can amplify social biases, and are prone to inaccuracy in conveying important aspects of meaning like negation. This is particularly dangerous when considering the high-stakes scenarios in which machine translation technology is frequently used and relied upon, such as in encounters between police and civilians. We must be vigilant when applying probabilistic tools in an attempt to render legible that which has been obscured or distorted, and translation is no exception. At the same time, we must also be attentive to the conditions that make scenarios like police-civilian interactions so high-stakes in the first place — more accurate translation systems will not meaningfully disrupt stark power imbalances in society, and we should not pretend they will.

As this article has been drafted in the midst of the global COVID-19 pandemic, we would be remiss to overlook the critical role that translation has played in the exchange and spread of vital information on best practices for prevention, testing, and the search for a treatment. The increasing reliance on automatic translation for gleaning insights from the international ecosystem of scientific knowledge has prompted calls for scholars to develop a ‘machine translation literacy’ toward an understanding of the shortcomings of automatically translating scholarly texts. The limitations of machine translation must be considered by technologists, policymakers, and affected stakeholders in delineating appropriate uses for it.

Rethinking and reshaping machine translation

“The fact that language is not a tangible object that can be located or re-located makes issues of cultural ownership more subtle but also more urgent than for concrete pieces of art or other cultural objects.”

– Margaret Speas, ‘Language Ownership and Language Ideologies’

"Languages aren’t stolen the way property is stolen. Rather, people are denied the sovereignty necessary to shape their own cultural and educational practices." – Kerim Friedman

The training and evaluation of state-of-the-art neural machine translation techniques tends to rely on large, parallel collections of data produced by human translators, a practice informed by the information-theoretic roots of the paradigm. Weaver’s characterization of translation between languages as mere decryption of encoded messages may seem crude to translation scholars and literary critics, some of whom have reservations about the possibility of faithful translation (particularly of literature and poetry; Weaver himself conceded this limitation). Indeed, the concept of ‘equivalence’ between texts is vigorously debated within translation studies. This is not to say that machine translation is epistemologically bereft; the parallel text basis of contemporary machine translation paradigms aligns with Quine’s pragmatic, behaviorist approach to translation. Whether one finds this framing compelling or not, it is nonetheless important to recognize that the data treated as gold standard translations embeds the situated and subjective positions of the people who wrote them, which impacts the ensuing associations embedded in automated systems.

The success of contemporary neural machine translation systems is largely due to a reliance on massive collections of linguistic data from the web. There are thousands of so-called ‘low-resource’ languages (and minoritized dialects of widely-spoken languages) for which there exist neither political nor financial incentives for industry powerhouses to develop translation tools, nor the sheer volume of digitized resources required for the successful application of neural machine translation. In this regard, there may be space for linguistic communities to be selective about whether — and if so, to whom — to submit their knowledge and culture for observation. [1]

In 2005, the leaders of the Mapuche people issued an ultimately unsuccessful lawsuit against Microsoft, accusing them of ‘intellectual piracy,’ when the software company attempted to release a version of the Windows operating system in Mapudungun, the language of the Mapuche. Microsoft had not consulted with the Mapuche or sought their consent to use their language, instead working with the Chilean government to develop the resource, and yet the lawsuit came as a surprise. Technology has complicated the question of whether one can really ‘own’ a language; is a corpus of a thousand sentences scraped from the web enough to extract sufficient morphosyntactic features for downstream processing and translation? What recourse does a linguistic community have if they do not wish to entrust software companies with the development of tools in their language?

Kilito [2008] explores the ethics of translation in the provocatively titled ‘Thou Shalt Not Speak My Language’ — a text that, ironically, this author could only encounter and enjoy in translation. ↩︎

Western discourses of language endangerment uncritically treat the development of technologies for low-resource languages as a social good, and indeed, the very framing of the 'low-resource' denomination implicitly prioritizes the gaze of the data collector, when speakers of a language have plenty of resources unto themselves in the form of idioms, jokes, fables and oral histories. On the other hand, forced assimilation and colonization led to stark decreases in numbers of native speakers of countless indigenous languages, and documentation and revitalization efforts for languages like Māori and Yup'ik become the focus of urgent attention. Efforts such as the recent First Workshop on NLP for Indigenous Languages of the Americas also encourage work in this direction.

Adopting a participatory approach to addressing the paucity of technological resources for dozens of African languages, the Masakhane project proposes the creation of language technologies by and for Africans, thereby involving the most impacted stakeholders in guiding the research direction and the curation of data from the very beginning of the project. Masakhane creates ways for participants without formal training in computational methods to participate directly and meaningfully, and represents a promising step toward using translation technology to empower native and heritage speakers of African languages.

The creation, development, and deployment of machine translation technology is historically entangled with practices of surveillance and governance. Translation remains a political act, and data-driven machine translation developments, largely centered in industry, complicate the mechanisms by which translation shifts power. An awareness of the shortcomings of machine translation as a tool and as a paradigm are necessary for better articulating appropriate contexts for its use.

This perspective is based on a paper, "How Does Machine Translation Shift Power?", presented at the NeurIPS 2020 Resistance AI workshop.

Suggested further reading:

- Can Speech Technologies Save Indigenous Languages from Dying?

- Decolonial AI: Decolonial Theory as Sociotechnical Foresight in Artificial Intelligence

- Decolonising Speech and Language Processing

- Fair MT: Towards ethical, sustainable Machine Translation

- Countering the Far Right in Translation

- Does Information Really Want to be Free? Indigenous Knowledge Systems and the Question of Openness

Author Bio

Amandalynne Paullada is a PhD candidate in the Department of Linguistics at the University of Washington, advised by Prof. Fei Xia. Her research involves natural language processing for information extraction from scientific text, primarily in the biomedical domain, as well as investigation of the societal impact of machine learning research.

Acknowledgments

Inspiration for the title of this piece is taken from Don’t ask if artificial intelligence is good or fair, ask how it shifts power by Pratyusha Kalluri. Thanks to the audience and reviewers at Resistance AI 2020 for comments on this work, and to Jessica Dai, Alex Hanna, and Anna Lauren Hoffmann for feedback on earlier drafts of this piece.

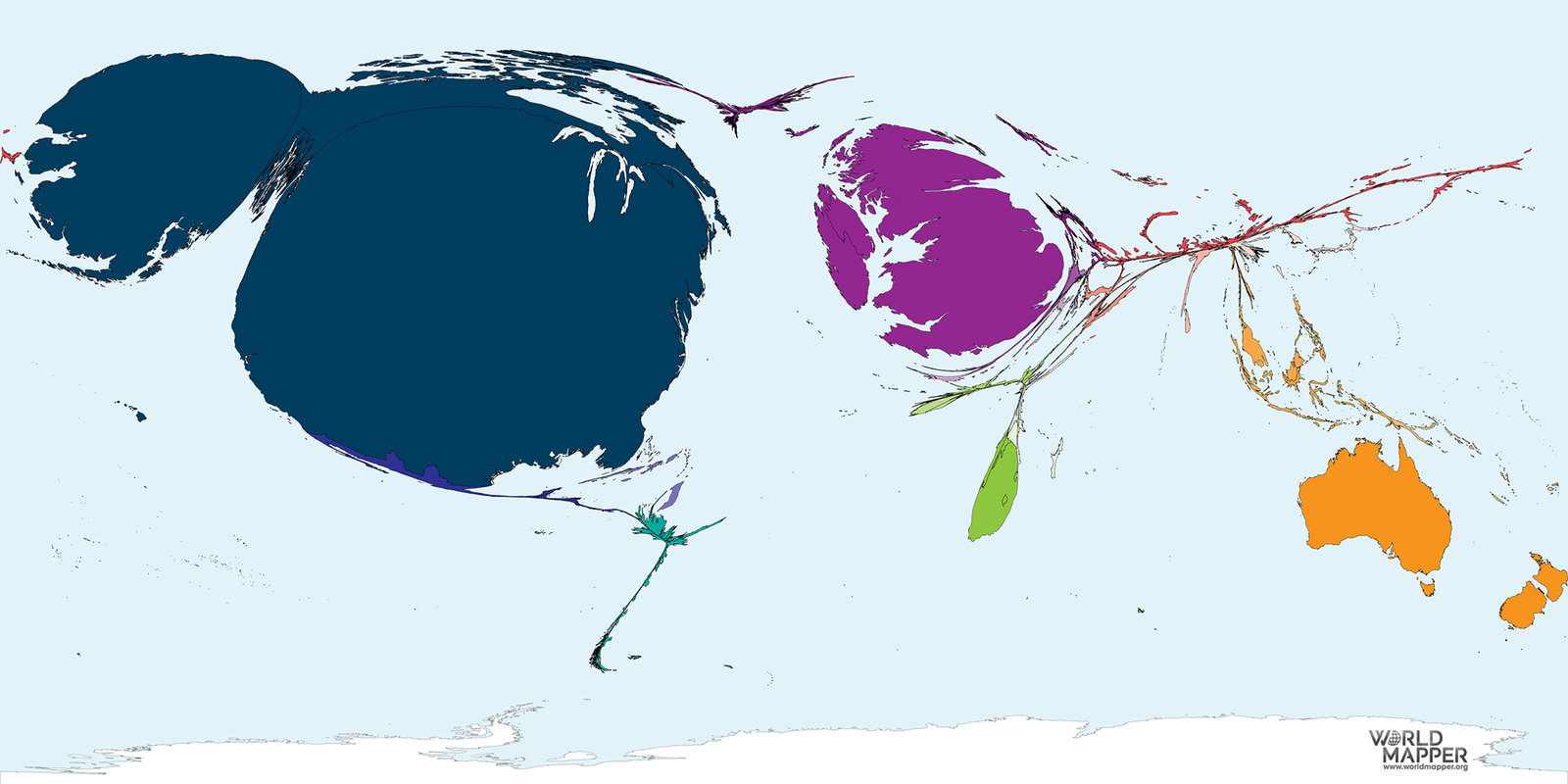

The feature image is sourced from here under a CC BY-NC-SA 4.0 license.

Citation

For attribution in academic contexts or books, please cite this work as

Amandalynne Paullada, "Machine Translation Shifts Power", The Gradient, 2021.

BibTeX citation:

@article{paullada2021shiftspower,

author = {Paullada, Amandalynne},

title = {Machine Translation Shifts Power},

journal = {The Gradient},

year = {2021},

howpublished = {\url{https://thegradient.pub/machine-translation-shifts-power/} },

}

If you enjoyed this piece and want to hear more, subscribe to the Gradient and follow us on Twitter.