Publishing a paper in academia is challenging, stimulating, and a bit baffling. Challenging because the research might fail. Stimulating because research may start assuming one outcome and finish with a totally different one. Baffling because after the paper is written and ready, I have to find it a home for it in a prestigious venue.

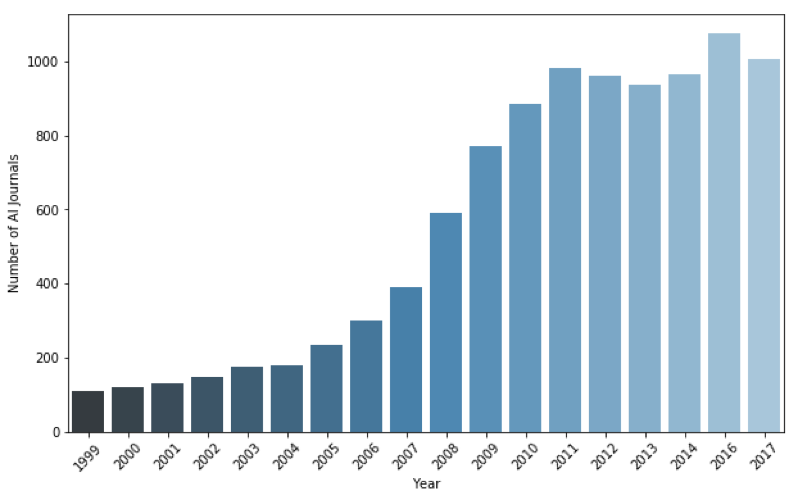

In fact, this last part is not just baffling, but illogical. I almost never know where a paper is going to get accepted. I have some papers that were published in journals that are considered prestigious, yet the same papers were rejected from other “lesser” journals. The whole process of submitting a paper to a journal and getting the paper reviewed is relatively random and subjective. This is especially true nowadays where, according to SCImago Journal Rank (SJR), there are over 34,000 ranked journals, with over 1,000 ranked journals in the field of AI alone (see Figure 1). You can almost endlessly shop around for a publishing venue.

As a researcher, I became intrigued with how the academic publication process has evolved over the years. I wanted to determine if commonly used academic metrics, such as impact factor and h-index, actually make sense in measuring academic success. Moreover, I wanted to understand what propels certain papers to be published in the very top journals, such as Science and Nature.

To carry out this research, Carlos Guestrin and I developed an open-source code framework, to analyze several large-scale datasets containing over 120 million publications, with 528 million references and 35 million authors, since the beginning of the 19th century. We discovered that academic publishing has changed drastically in both volume and velocity. The volume of papers has increased sharply from about 174,000 papers published in 1950 to over 7 million papers published 2014. For example, according to SJR the number of AI papers published in ranked journals has increased drastically, from about 7,000 papers in 1999 to over 46,000 papers in 2016. Furthermore, the speed at which researchers can share and publish their studies has accelerated significantly. Today's researchers can publish not only in an ever-growing number of traditional venues, such as conferences and journals, but also in electronic preprint repositories and in mega-journals that offer rapid publication times.

Our data analysis provides a precise and full picture of how the academic publishing world has evolved. We uncovered a wide variety of underlying changes in academia at different levels (see our full paper which was recently published in GigaScience):

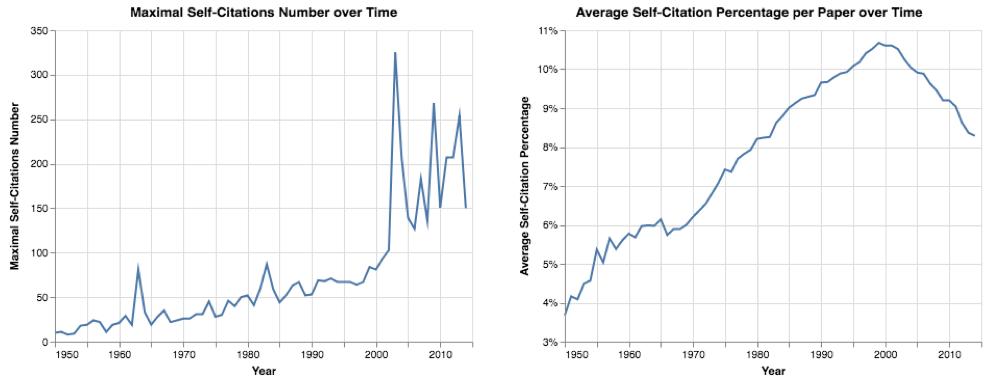

- Papers - We observed that over time, papers became shorter while other features, such as titles, abstracts, and author lists, became longer. While the number of references and the number of self-citations considerably increased, the total number of papers without any citations grew rapidly as well.

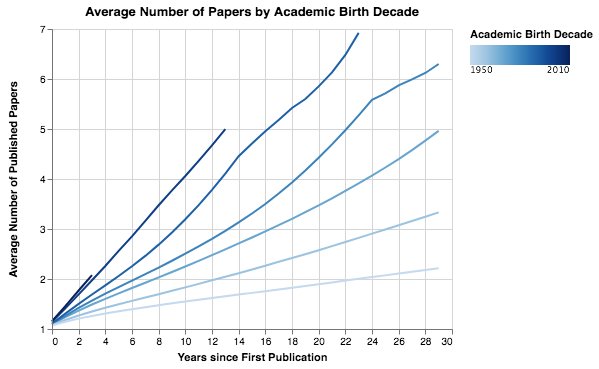

- Authors - We noticed a sharp increase in the number of new authors These new authors are publishing at a much faster rate given their career age than they have in previous decades. Additionally, the average number of coauthors per author considerably increased over time. Lastly, we observed that in recent years there has been a growing trend for authors to publish more in conferences.

- Journals - We saw a drastic increase in the number of ranked journals, with several hundred new ranked journals appearing each year. Furthermore, we discovered that the majority of papers published in 2017 were published in journals ranked in the first-quartile (Q1). For example, over 53% of AI papers published in 2017 were published in Q1 journals[1]. In addition, we observed that journal ranking changed significantly as average citations per document, while the h-index measure decreased over time. While analyzing various trends in top-ranked journals, we observed a sharp increase in the number of publications per journal, and a distinct increase in both first and last authors' career ages. For example, in Journal of Artificial Intelligence Research, the last authors' average career ages increased from 8.7 years in 1993 to 15.8 in 2014. Moreover, the percentage of journal publications with returning authors has jumped in recent years. For example, in Nature, over 76% of all papers published in 2014 included at least one author who had published in the journal before, while in 1999 this rate stood at 45.5%.

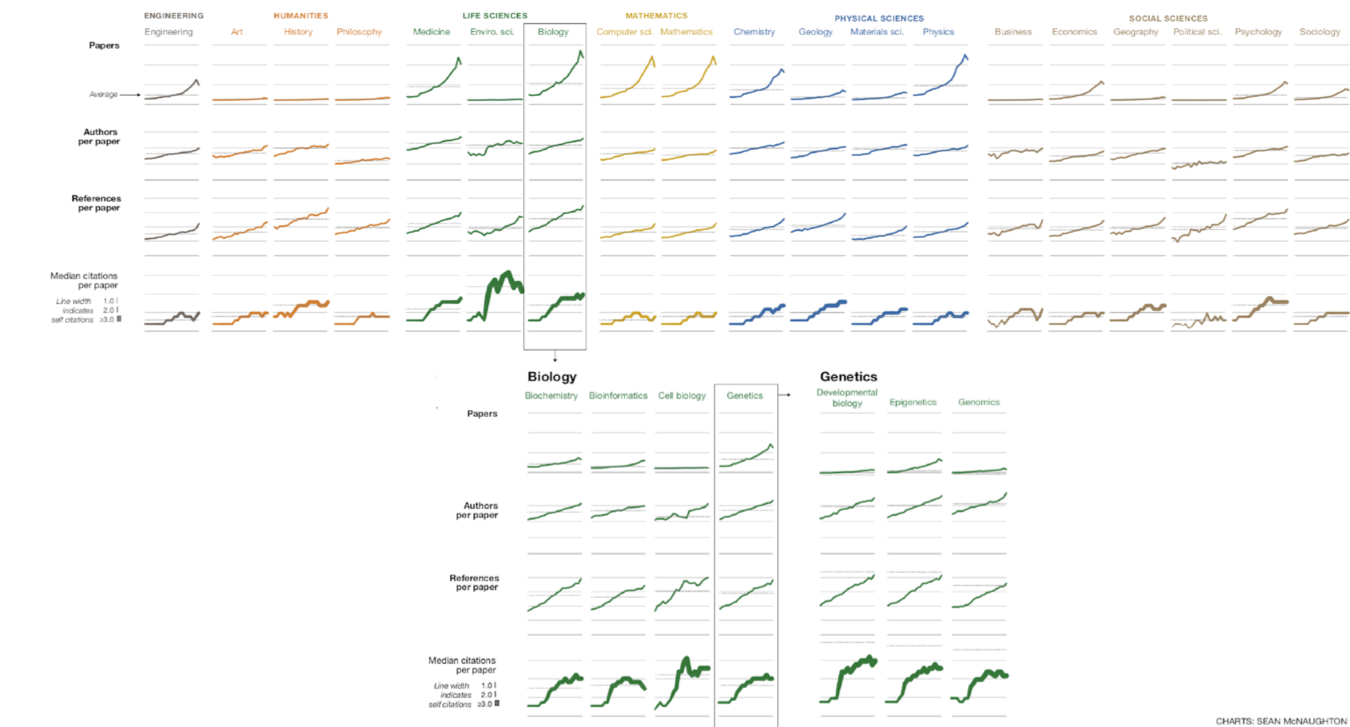

- Fields of Research - We analyzed the properties of 19 major research domains, such as art, biology, and computer science, as well as the properties of 2600 subdomains. Our analysis revealed that different domains had widely ranging properties. Even subfields of the same domain had surprisingly different average numbers of citations (see Fields of Research Features Table).

We attained the following five key insights from our study:

First, these results support Goodhart's Law as it relates to academic publishing; that is, traditional measures (e.g., number of papers, number of citations, h-index, and impact factor) have become targets, and are no longer true measures importance/impact. By making papers shorter and collaborating with more authors, researchers are able to produce more papers in the same amount of time. Moreover, the majority of changes in papers' structure are correlated with papers that receive higher numbers of citations. Authors can use longer titles and abstracts, or use question or exclamation marks in titles, to make their papers more appealing for readers and increase citations, i.e. academic clickbait[2]. These results support our hypothesis that academic papers have evolved in order to score a bullseye on target metrics.

Second, it is clear that citation number has become a target for some researchers. We observe a general increasing trend for researchers to cite their previous work in their new studies, with some authors self citing dozens, or even hundreds, of times. Moreover, a huge quantity of papers – over 72% of all papers and 25% of all papers with at least 5 references – have no citations at all after 5 years. Clearly, significant resources are spent on papers with limited impact, which may indicate that researchers are publishing more papers of poorer quality to boost their total number of publications. Additionally, we noted that different decades have very different paper citation distributions. Consequently, comparing citation records of researchers who published papers in different time periods can be challenging.

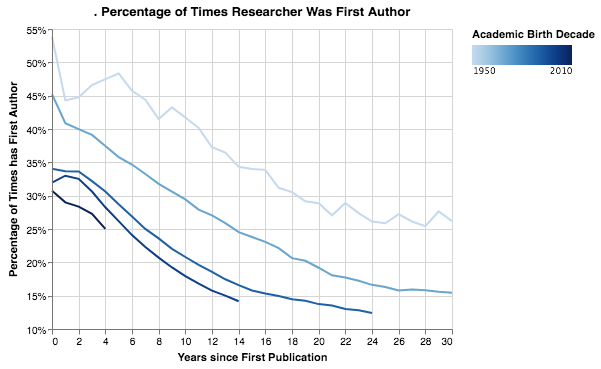

Third, we observed an exponential growth in the number of new researchers who publish papers, likely due to career pressures. We also observed that young career researchers tend to publish considerably more than researchers in previous generations did at their career age. Furthermore, we see that the percentage of early-career researchers publishing as first author(s) is considerably lower than those in previous generations. In a culture of “publish or perish,” researchers are incentivized to publish more by increasing collaboration (and being added to more author lists) and by publishing more conference papers than in the past.

Fourth, certain trends are shaping the landscape of publishing in top journals. The number of papers in selected top journals has increased sharply, along with the career age of the authors and the percentage of returning authors. The number of submissions to journals like Science has soared in recent years; however, many of these journals mainly publish papers in which at least one of the authors has previously published in the journal. We believe this situation is also a result of Goodhart's Law. Researchers are targeting high impact factors, therefore the yearly volume of papers sent to these top journals has considerably increased. Overwhelmed by the volume of submissions, editors at these journals may choose safety over risk and select papers written by only well-known, experienced researchers.

Lastly, using citation-based measures to “discriminate between scientists”[3] is like comparing apples to oranges. By comparing academic metrics over 2600 research fields and subfields, we observed vast diversity in the properties of papers in across different domains. Even papers within subdomains presented a wide range of properties, including number of references and median number of citations. These results indicate that using measures such as citation number, h-index, and impact factor are useless when comparing researchers in different fields, and even for comparing researchers in the same subfield. Moreover, using these measures to compare academic entities can drastically affect the allocation of resources and consequently damage research. For example, to improve their world ranking, universities might choose to invest in faculty for computer science and biology, rather than faculty for less-cited research fields, such as economics and psychology. Even within a department, the selection of new faculty members can be biased by using target measures. A computer science department might opt for hiring researchers in a higher cited subfield, instead of researchers in a less-cited subfield. Over time, this may unfairly favor high-citation research fields at the expense of other equally important fields.

It is time to reconsider how we judge academic papers. Citation-based measures have been the standard for decades, but these measures are far from perfect. In fact, our study shows that the validity of citation-based measures is being compromised and their usefulness is lessening. Goodhart's Law is in action in the academic publishing world.

Special thanks to Carol Teegarden, Steven Ban and Hugh Zhang for their comments.

Michael Fire is an Assistant Professor at the Software and Information Systems Engineering Department at Ben-Gurion University of the Negev (BGU), and the founder of the Data Science for Social Good Lab.

Citation

For attribution in academic contexts or books, please cite this work as

Michael Fire, "Goodhart’s Law: Are Academic Metrics Being Gamed?", The Gradient, 2019.

BibTeX citation:

@article{FireGoodhartsLaw2019,

author = {Fire, Michael}

title = {Goodhart’s Law: Are Academic Metrics Being Gamed?},

journal = {The Gradient},

year = {2019},

howpublished = {\url{https://thegradient.pub/over-optimization-of-academic-publishing-metrics/ } },

}

If you enjoyed this piece and want to hear more, subscribe to the Gradient and follow us on Twitter.

For this calculation, we counted the percentage of AI papers that according to SJR have a valid “SJR Best Quartile” value. Overall, out of 19,590 published papers in 2017, 10,389 papers were published in a Q1 journal according to their SJR Best Quartile value. ↩︎

G. Lockwood, “Academic clickbait: Articles with positively-framed titles, interesting phrasing, and no wordplay get more attention online,” The Winnower, vol. 3, Jun. 2016. ↩︎

S. Lehmann, A. D. Jackson, and B. E. Lautrup, “Measures for measures,” Nature, vol. 444, no. 7122, pp. 1003–1004, Dec. 2006. ↩︎