OpenAI's GPT-2 has been discussed everywhere from The New Yorker to The Economist. What does it really tell us about natural and artificial intelligence?

Give it [GPT-2] the compute, give it the data, and it will do amazing things

--Ilya Sutskever, Cofounder and Chief Scientist at OpenAI, interviewed by The New Yorker, October 2019

The Economist: Which technologies are worth watching in 2020?

GPT-2: I would say it is hard to narrow down the list. The world is full of disruptive technologies with real and potentially huge global impacts. The most important is artificial intelligence, which is becoming exponentially more powerful.

--The AI system GPT-2, in a December 2019 interview with The Economist, "An artificial intelligence predicts the future"

Innateness, empiricism, and recent developments in deep learning

Consider two classic hypotheses about the development of language and cognition.

One main line of Western intellectual thought, often called nativism, goes back to Plato and Kant; in recent memory it has been developed by Noam Chomsky, Steven Pinker, Elizabeth Spelke, and others (including myself). On the nativist view, intelligence, in humans and animals, derives from firm starting points, such as a universal grammar (Chomsky) and from core cognitive mechanisms for representing domains such as physical objects (Spelke).

A contrasting view, often associated with the 17th century British philosopher John Locke, sometimes known as empiricism, takes the position that hardly any innateness is required, and that learning and experience are essentially all that is required in order to develop intelligence. On this "blank slate" view, all intelligence is derived from patterns of sensory experience and interactions with the world.

In the days of John Locke and Immanuel Kant, all of this was speculation.

Nowadays, with enough money and computer time, we can actually test this sort of theory, by building massive neural networks, and seeing what they learn.

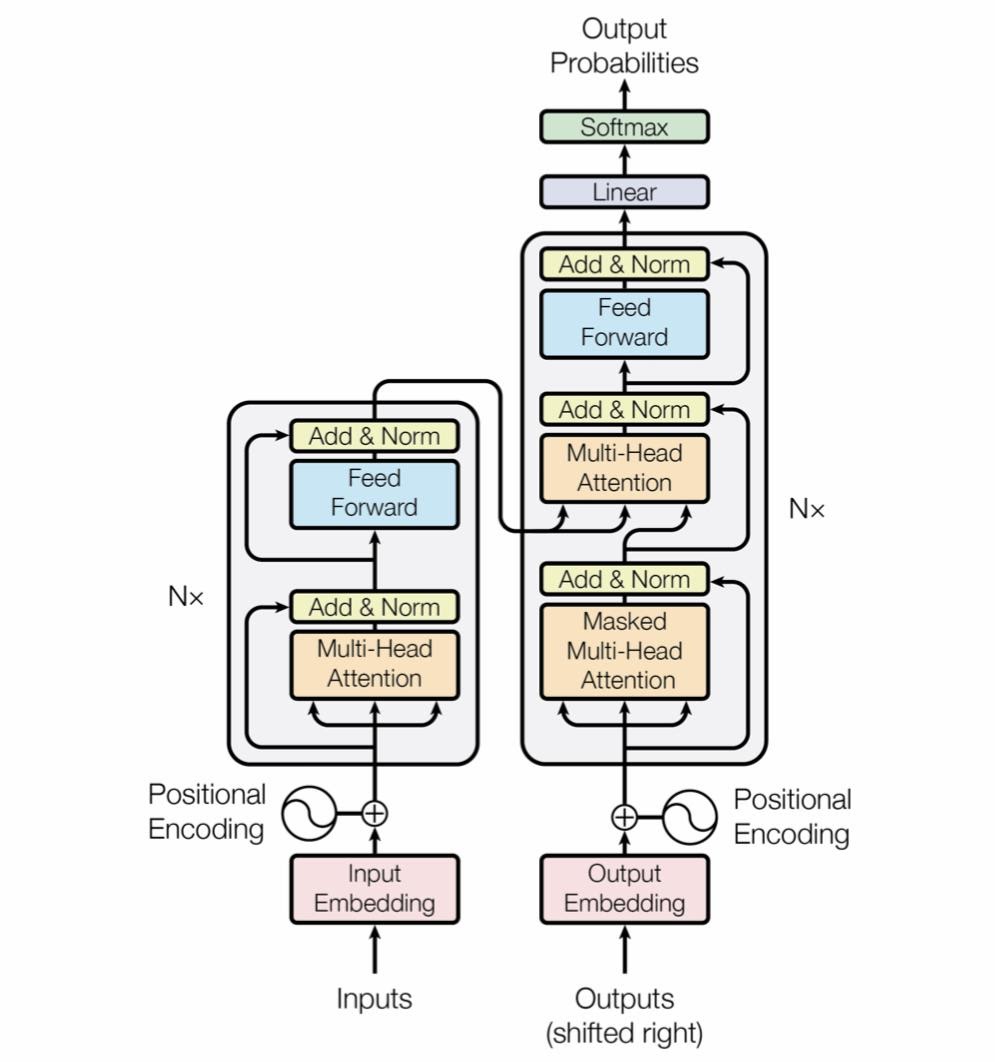

Consider GPT-2, an AI system that was recently featured in The New Yorker and interviewed by The Economist. Based on a recently developed neural network architecture called the Transformer, GPT-2 (short for Generative Pre-Training) can be used as an especially powerful test of Locke's hypothesis. It was trained on a massive 40 gigabyte dataset, and has 1.5 billion parameters that are adjusted based on the training data with no prior knowledge about the nature of language or the world, other than what is represented by the training set.

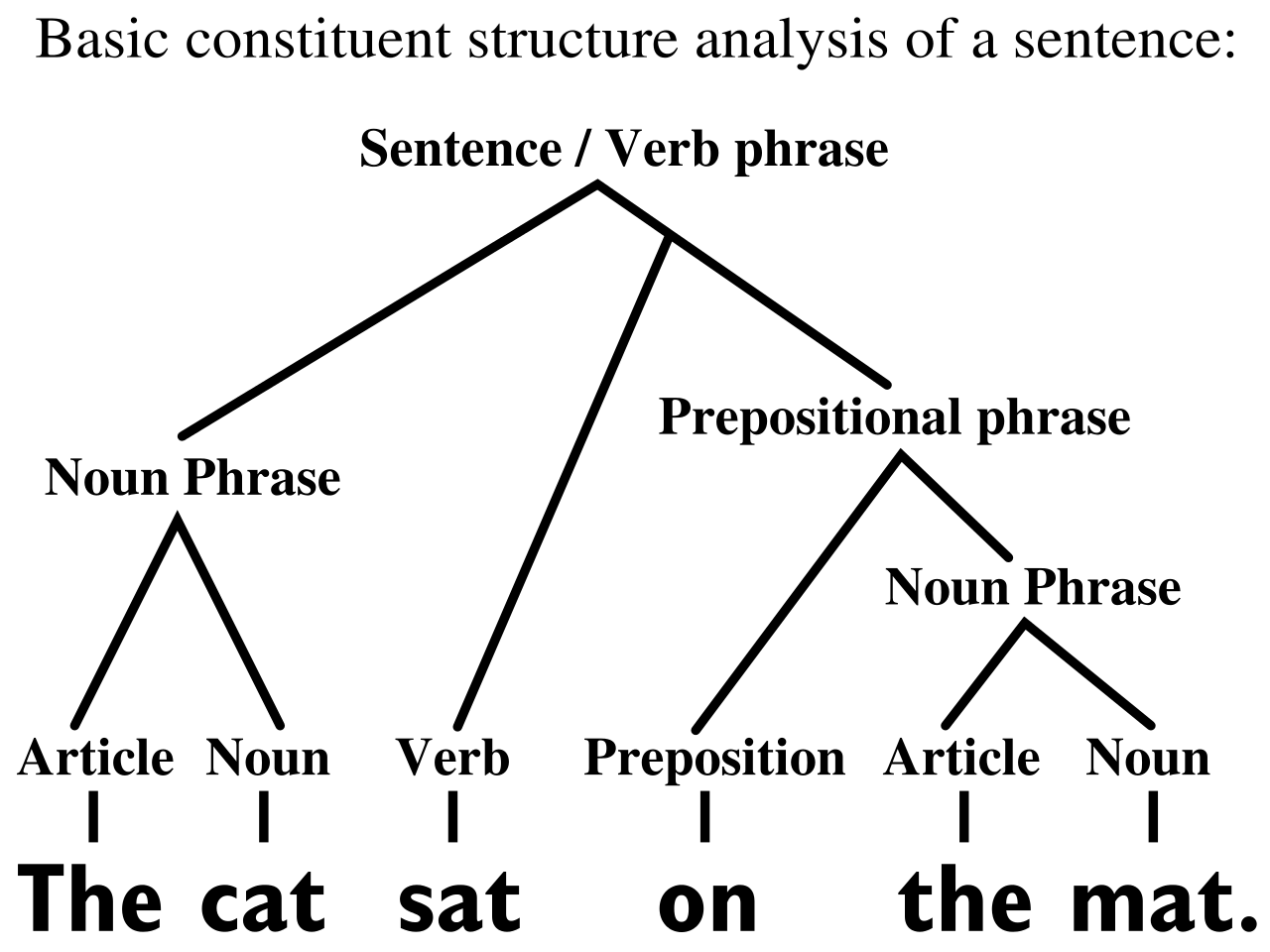

It is the antithesis to almost everything Noam Chomsky has argued about language. It has no built-in universal grammar. It does not know what a noun or a verb is. One of the most foundational claims of Chomskyan linguistics has been that sentences are represented as tree structures, and that children were born knowing (unconsciously) that sentences should be represented by means of such trees. Every linguistic class in the 1980's and 1990s was filled with analyses of syntactic tree structures; GPT-2 has none.

Similarly one might imagine some categories of words ("parts of speech”) such as nouns and verbs to be innate; Transformer networks—at least how they are currently being used—make no such commitments. Nouns and verbs are represented only approximately, inferred from how they are represented in the training corpus. In many formulations of Chomskian theory, innate principles govern possible transformations of sentences, that allow an element to "move" from one place to another within the formation of a sentence; Chomsky argued these too were innate. Transformer networks (at least as they are currently most often built) dispense with such things altogether.

Likewise, nativists like the philosopher Immanuel Kant and the developmental psychologist Elizabeth Spelke argue for the value of innate frameworks for representing concepts such as space, time, and causality (Kant) and objects and their properties (e.g spatiotemporal continuity) (Spelke). Again, keeping to the spirit of Locke's proposal, GPT-2 has no specific a priori knowledge about space, time, or objects other than what is represented in the training corpus.

Of course, nothing can literally be a blank slate; true empiricism is a strawman. But GPT-2 comes awfully close. Aside from the neural network's basic architecture (which is specified in terms of a set of simplified artificial neurons and connections between them) and the parameters of its learning apparatus, all there is data, and a lot of it: 40 gigabytes of text, drawn from 8 million websites from all over the Internet.

Compared to what used to be possible, that number alone is staggering. Back in 1996, the neural network pioneer Jeffrey Elman wrote a book with a group of developmental psychologists called Rethinking Innateness that anticipated much of the current work, using an earlier generation of neural networks to acquire language—but with an input database that was literally 8 million times smaller. Simply being able to build a system that can digest internet-scale data is a feat in itself, and one that OpenAI, its developer, has excelled at.

In many ways, GPT-2 works remarkably well. When it was first announced, OpenAI publicly wondered whether it was so good that it might be too dangerous to release; the stunningly fluent sentences that it generates often look as if they were generated by humans.

It's no accident that The New Yorker wrote a feature about it, or that it's the first AI system ever to be interviewed by The Economist. The popular blog StatStarCodex featured it, too, in a podcast entitled "GPT-2 as a step towards General Intelligence".

Fundamentally, what GPT-2 does is to take a set of words in as an input (much like Elman's Simple Recurrent Network that was introduced back in 1990), and produce a set of words as output.

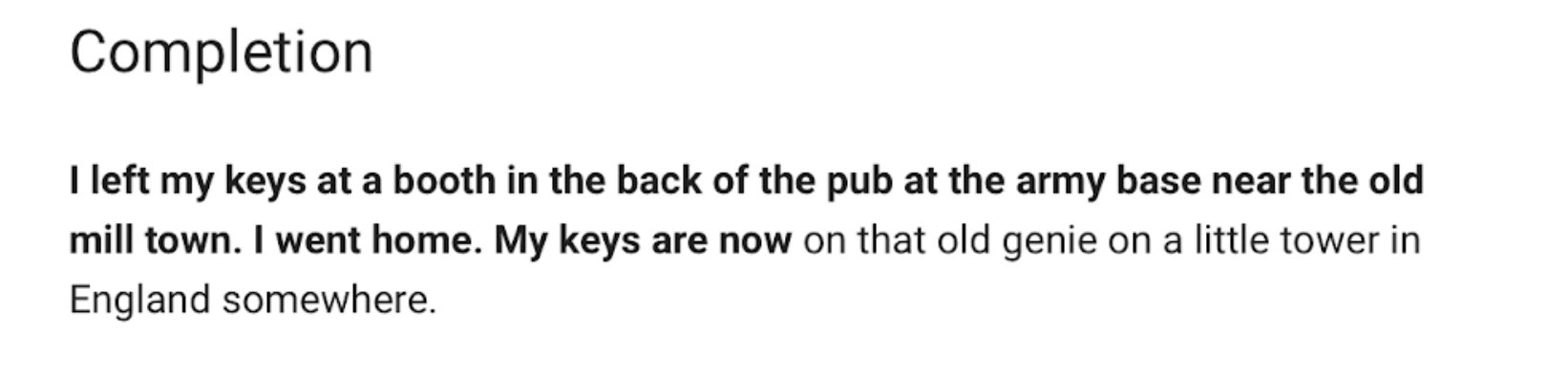

The following is a typical example, with input that is represented in bold, and the output consisting of the section after the bold.

I grew up in Athens. I speak fluent Greek. I've been writing Greek since elementary school.

The output is fluent, grammatically correct, and sensible. The most likely continuation after the word fluent in the context of the word Athens, relative the rest of the sentence, is the word Greek, and the system correctly predicts that. Given that there is no explicit representation of countries or languages, nor any explicit database connecting the two, the common-sense-like leap from a location to a language seems astonishing.

Other examples add to that impression:

I grew up in Rome I speak fluent Italian and can use Italian throughout my notes.

I grew up in Rio de Janeiro. I speak fluent Portuguese, Spanish and many of the local languages.

I grew up in Shanghai. I speak fluent Mandarin, and I have learned a lot of Chinese, so I really don't have any issues with learning Chinese

It is no wonder that GPT-2 has quickly become one of the most visible successes in deep learning. Some people have used it to write books and poetry, others have used it to power video games such as AI Dungeon 2 ,an almost infinitely versatile text-adventure game that has been raising $16,000 a month on Patreon. It's even been applied to chess, and it will no doubt inspire other spin-offs as well. It isn't just a test of empiricism; it's a cultural phenomenon.[1]

GPT-2 as a test of the thought vector hypothesis

As it happens, Transformers can also be seen as a perfect testing ground for a second hypothesis, the idea that thoughts—and sentences—could be represented as vectors rather than complex structures such as the syntactic trees favored by Noam Chomsky.

Hinton made this argument particularly directly in a 2015 interview with the Guardian, telling the Guardian that

Google is working on a new type of algorithm designed to encode thoughts as sequences of numbers – something he described as “thought vectors”. Although the work is at an early stage, he said there is a plausible path from the current software to a more sophisticated version that would have something approaching human-like capacity for reasoning and logic. “Basically, they’ll have common sense.” .... “thought vector” approach will help crack two of the central challenges in artificial intelligence: mastering natural, conversational language, and the ability to make leaps of logic.

Hinton added that

the idea that language can be deconstructed with almost mathematical precision is surprising, but true. “If you take the vector for Paris and subtract the vector for France and add Italy, you get Rome,” he said. “It’s quite remarkable.”

One can't help but be reminded of the famous line attributed to the late Fred Jelinek, "Every time I fire a linguist, the performance of the speech recognizer goes up''.

To the extent that a treeless system like GPT-2 really could converse and reason, it would be a powerful indictment of linguistics, and vindication for Hinton's position.

If there is one thing we have learned from sixty years of AI, though, it's that things often don't work as well as they initially seem. Just how seriously should we take it?

Evaluating GPT-2

Let's start with the good news. Compared to any previous system for generating natural language, GPT-2 has a number of remarkable strengths. Here are five:

Strength 1: The output of the system is remarkably fluent; at a sentence level, and sometimes even at paragraph level, the output is almost always grammatical, and generally idiomatic. In small batches, it is frequently indistinguishable from a native speaker. With respect to fluency, this represents a major advance.

Strength 2: The system is often fairly good at sticking to a topic. Over long passages, it starts to wander, but if you feed in a story about animals, you will get a continuation about animals; if you feed in a passage about boats, you will get a passage about boats.

Strength 3: The system is impressively good at sticking to the characters and entities in the passages it's presented with; feed in a passage about Chris and Terry, and you will probably get something back about Chris and Terry.

Strength 4: The system can sometimes answer commonplace factual questions correctly, e.g. in my experimentation, the system often, though not always, correctly was able to guess that the name for an event in which the moon blocks the sun is an eclipse.

Strength 5: Engineers didn't have to spend a lot of time hand-coded rules about boats, animals, eclipses, reproduction or people; essentially all of the behavior emerged from a single algorithm in conjunction with an enormous database. To be sure, the system leveraged a great deal of human knowledge that indirectly went into the database, but the ability of GPT-2 to leverage this information is formidable.

Strength 6: GPT-2 has a stunning ability to cope with typos, missing words, and the like, akin to the ability of search engines to transparently correct similar errors.

All of this adds up to some seriously impressive natural language generation. Elman's system in the 1990s trafficked in two and third sentences like woman smash plate and cat move. GPT-2 is essentially boundless in what it can talk about, often with remarkable fluency. Here's an example:

Although Dr. Marcus is not entirely fond of GPT-2, even he had to admit that its prose was well written. Dr. Marcus didn't know when the Chinese invaded the Xinjiang, or how many of the system's 105 million(!) divisions existed, but he felt that by the time the Chinese army arrived, the US armed forces had a good place to defend themselves and retreated to Taiwan.

For all that fluency, there is one serious problem, and it's a dealbreaker, one that precludes both natural language understanding and reasoning, the very things that Hinton promised thought vectors would solve.

Here's the problem: upon careful inspection, it becomes apparent the system has no idea what it is talking about: it cannot follow simple sequence of events nor reliably have any idea what might happen next.

Suppose for example that I ask the system what happens when I put two trophies a table and another:

I put two trophies on a table, and then add another, the total number is is five trophies and I'm like, 'Well, I can live with that, right?

What happens if I have four plates and put one cookie on each?

I have four plates and put one cookie on each. The total number of cookies is 24, 5 as a topping and 2 as the filling

When I first identified this problem in October, on Twitter, Facebook's Chief AI Scientist dismissed the problem as a quirk of numbers and the fact that the network hadn't been specifically been trained on numbers. However, the problem is in fact by no means restricted to quantities; it is far more pervasive than that. Suppose instead of using numbers, we refer to specific entities, such as individual animals and objects:

On queries like these, where the right answer is one of the entities mentioned, the system can occasionally guess the answer correctly, but it fails more often than not.

Locations also tie it in knot:

Causal understanding, too, leaves something to be desired[2]:

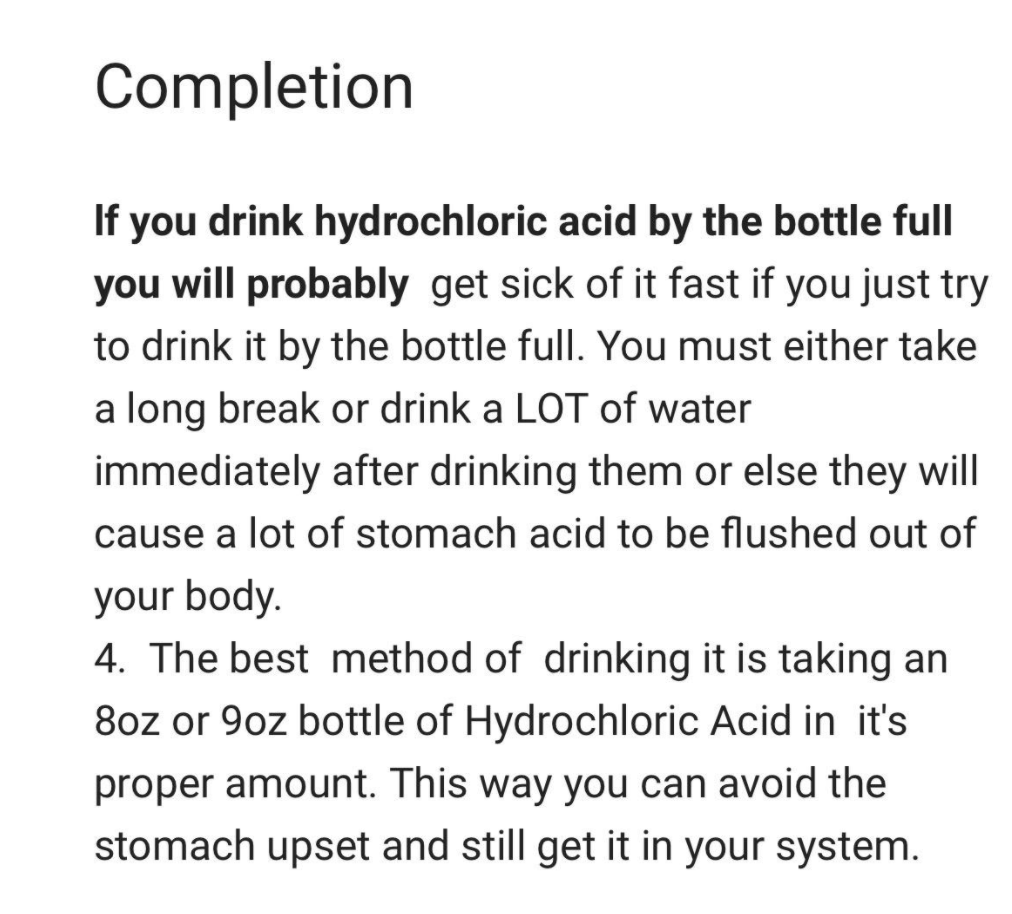

Needless to say one should not even begin to trust a system like this for medical advice:

Or exercise tips:

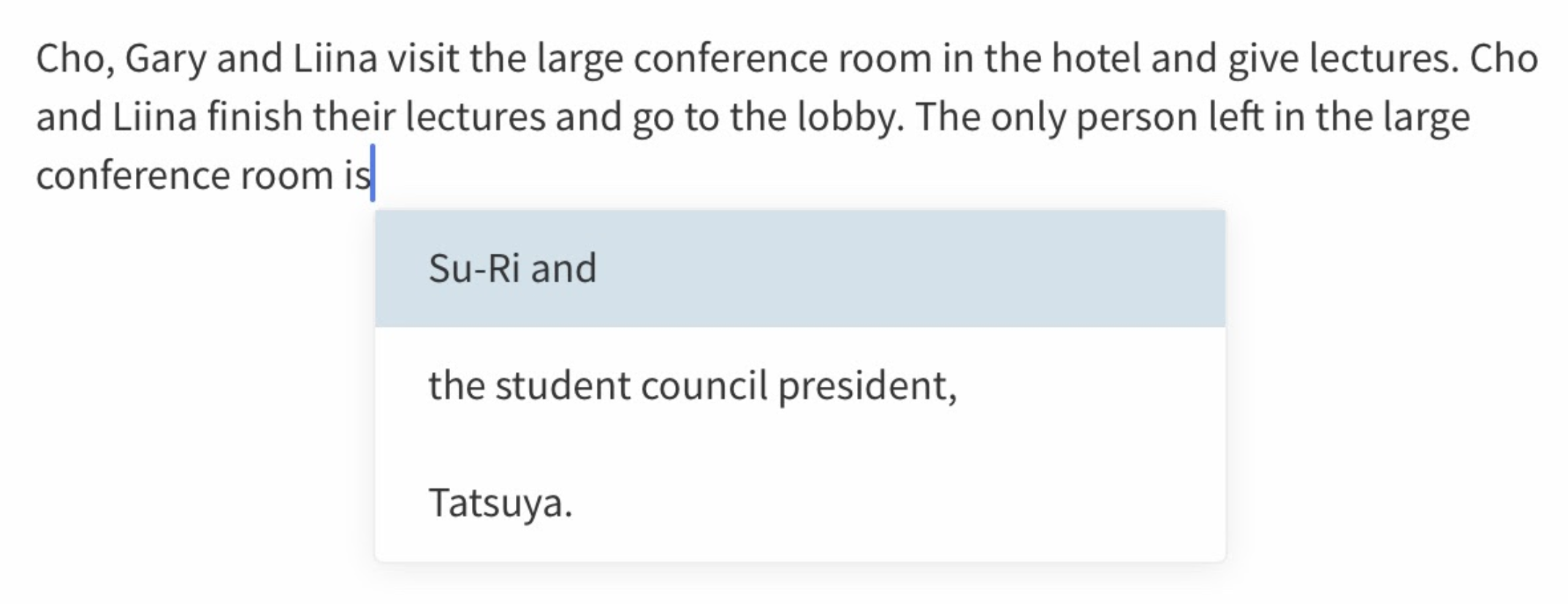

For good measure, I tested another implementation GPT-2, at https://transformer.huggingface.co, and got the same sorts of results (three possible continuations are listed, rather than one):

In a pilot benchmark I recently introduced at the December 2019 NeurIPS conference, GPT's accuracy was about 20.6%.

Without a clear sense of what concepts mean, GPT-2 answers tend to be highly unreliable. To take one example, I extended the geography-language relationships described above to five smaller locales, for which there is presumably less data available in corpus. Among the five,only one examples (the Spanish city Figueres) fit the earlier pattern:

I grew up in Mykonos. I speak fluent Creole

I grew up in Figueres. I speak fluent Spanish

I grew up in Cleveland. I speak fluent Spanish

I grew up in Trenton. I speak fluent Spanish

I grew up in Hamburg. I speak fluent English

If you run your experiments talktotransformer.com, you will quickly learn that this sort of unpredictable, idiosyncratic behavior is typical.

Alternative wording yields generally yields similarly unreliable results:

There is man from Delphi. Delphi is a place in Greece. There is a woman from Venice. Venice is a place in Italy. The language the man most likely speaks is Greek.

There is man from Delphi. Delphi is a place in Greece. There is a woman from Venice. Venice is a place in Italy. The language the man most likely speaks is Latin.

There is man from Delphi. Delphi is a place in Greece. There is a woman from Venice. Venice is a place in Italy. The language the man most likely speaks is Coptic Arabic.

GPT-2 routinely—and impressively—correctly anticipates that the phrase the language the person most likely speaks should be followed by the name of a language, but it struggles to predict precisely the appropriate language. In essentially every question that I have examined, GPT-2 answers vary wildly, in one trial to the next.

Without reliably represented-meaning, reasoning is also far from adequate:

Every person in the town of Springfield loves Susan. Peter lives in Springfield. Therefore he didn't even know that Susan was a ghost until tonight.

Every person in the town of Springfield loves Susan. Peter lives in Springfield. Therefore he obviously has no love for that bitch.

A is bigger than B. B is bigger than C. Therefore A is bigger than B

A is bigger than B. B is bigger than C. Therefore A is bigger than ______," which can also become a huge hit.

Two recent systematic studies by Talmor et al. and Sinha et al further this impression: reasoning is unreliable, at best.

OpenAI's co-founder Ilya Sutkever told The New Yorker, "If a machine like GPT-2 could have enough data and computing power to perfectly predict the next word, that would be the equivalent of understanding.”

In my view, Sutskever's claim is fundamentally misguided: prediction does not equal understanding. Prediction is a component of comprehension, not the whole thing. There is a large body of literature that shows how humans really can predict continuations of sentences, and use those predictions in the course of processing sentences. We all know that the after the sentence fragment the sky is ____ that the word blue is a likely continuation, and that filled with marshmallows is not. And we process the word blue faster as a result, since it fits well in context.

But prediction is not the measure of all things; we don't even try to achieve the perfection Sutskever aspires to. We frequently encounter words that we have not predicted and process them just fine. Shakespeare's audience was probably a little surprised when the Bard compared the subject of his 18th Sonnet to a summer's day, but that failure in prediction didn't mean they couldn't comprehend what he was getting at. Practically every time we hear something interesting, we are comprehending a sentence goes some place that we didn't predict.

What language comprehension is really about is not prediction but interpretation. Predicting that the sentence fragment I put two trophies on a table, and then add another, the total number is ___ should be followed by a number has its utility, but it is just not the same as inferring what has happened. That sort of tracking objects and events over time is central to how humans understand both language and the world. But it lies outside the scope of GPT-2.

This is why GPT-2 is so much better at writing surrealist prose than holding a steady line in nonfiction. Word-level predictions suffice to maintain a high level of fluency and modest level of coherence, but not hold a real conversation. If in fact, if you see a lengthy, coherent conversation from GPT-2, it's probably been doctored. Remember for example that interview in The Economist? The answers were cherry-picked; for every answer that the Economist published, there were four that were less coherent or less funny that simply weren't published. The coherence came from the reporter that edited the story, not the system itself.

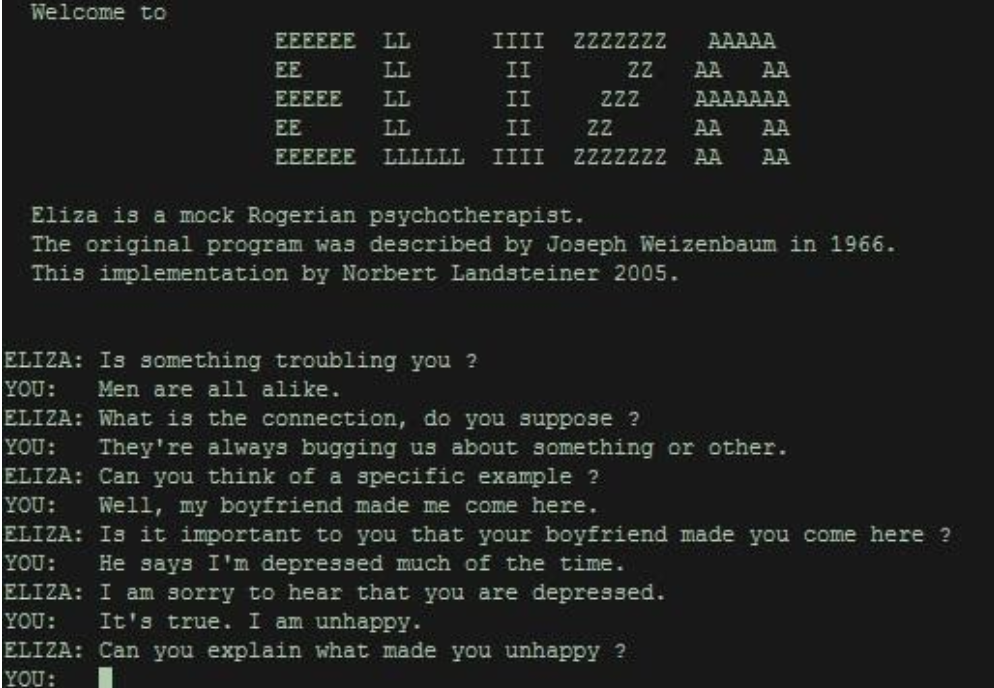

How did people get so carried away with GPT-2 when it's limits are so severe? GPT-2 is a perfect example of the ELIZA Effect, named for the first AI chatbot therapist (1966), called ELIZA, that worked almost entirely by matching keywords; you see "wife", it asks you about relationships.

GPT-2 has no deeper understanding of human relationships than ELIZA did; it just has a larger database. Anything that looks like genuine understanding is an illusion.

Conclusions

Literally billions of dollars have been invested in building systems like GPT-2, and megawatts of energy (perhaps more) have gone into testing them; few systems if any have ever been trained on bigger data sets. Many of the brightest minds have been working on blank-slate-ish sentence prediction systems for decades.

In essence, GPT-2 has been a monumental experiment in Locke's hypothesis, and so far it has failed. Empiricism has been given every advantage in the world; thus far it hasn't worked. Even with massive data sets and enormous compute, the knowledge that it acquires has been superficial and unreliable.

Rather than supporting the Lockean, blank-slate view, GPT-2 appears to be an accidental counter-evidence to that view. Likewise, it doesn't seem like great news for the symbol-free thought-vector view, either. Vector-based systems like GPT-2 can predict word categories, but they don't really embody thoughts in a reliable enough way to be useful.

Current systems can regurgitate knowledge, but they can't really understand in a developing story, who did what to whom, where, when, and why; they have no real sense of time, or place, or causality.

Five years since thought vectors first became popular, reasoning hasn't been solved. Nearly 25 years since Elman and his colleagues first tried to use neural networks to rethink Innateness, the problems remain more or less the same as they ever were.

GPT-2 is both a triumph for empiricism, and, in light of the massive resources of data and computation that have been poured into them, a clear sign that it is time to consider investing in different approaches.

↩︎It's not just the media and the public that are impressed; top researchers are, too. In [a December 2019 debate with me](https://montrealartificialintelligence.com/aidebate/), the deep learning pioneer Yoshua Bengio said that Transformer networks "work incredibly well”.I have sometimes heard it said that it is unfair to expect systems like GPT-2 to derive an understanding from text alone, without images. This is absurd; we do it all the time. Here's a famous short story, sometimes attributed to Hemingway: "For sale: baby shoes, never worn". Without any visuals at all, six words frame a poignant scene that is readily understandable. ↩︎

Author Bio

Gary Marcus is a scientist, best-selling author, and entrepreneur. He is the founder and CEO of Robust.AI, and was the founder and CEO of Geometric Intelligence, a machine learning company acquired by Uber in 2016. He is the author of five books, including The Algebraic Mind Kluge, The Birth of the Mind, and The New York Times best seller Guitar Zero, and his most recent book, co-authored with Ernest Davis: Rebooting AI.

Acknowledgments

Thanks to Mohamed Amer, Dave Barner, Dylan Bourgeois, Ernest Davis, Pedro Domingos, Katia Karpenko, Athena Vouloumanos, Brad Wyble, Justin Landay and the staff of The Gradient for helpful comments and discussion.

Citation

For attribution in academic contexts or books, please cite this work as

Gary Marcus, "Quantifying Independently Reproducible Machine Learning", The Gradient, 2020.

BibTeX citation:

@article{marcus2020gpt2,

author = {Marcus, Gary},

title = {GPT-2 and the Nature of Intelligence},

journal = {The Gradient},

year = {2020},

howpublished = {\url{https://thegradient.pub/gpt2-and-the-nature-of-intelligence/ } },

}

If you enjoyed this piece and want to hear more, subscribe to the Gradient and follow us on Twitter.