With the success of deep learning, algorithms have pushed into another domain that humans thought was safe from automation: the creation of compelling art.

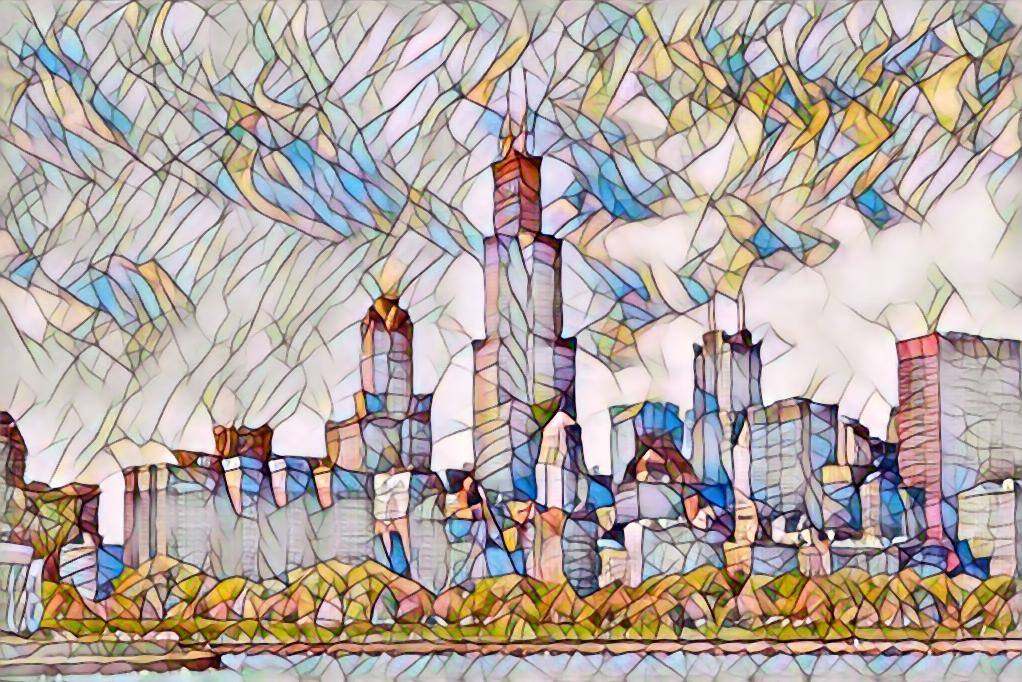

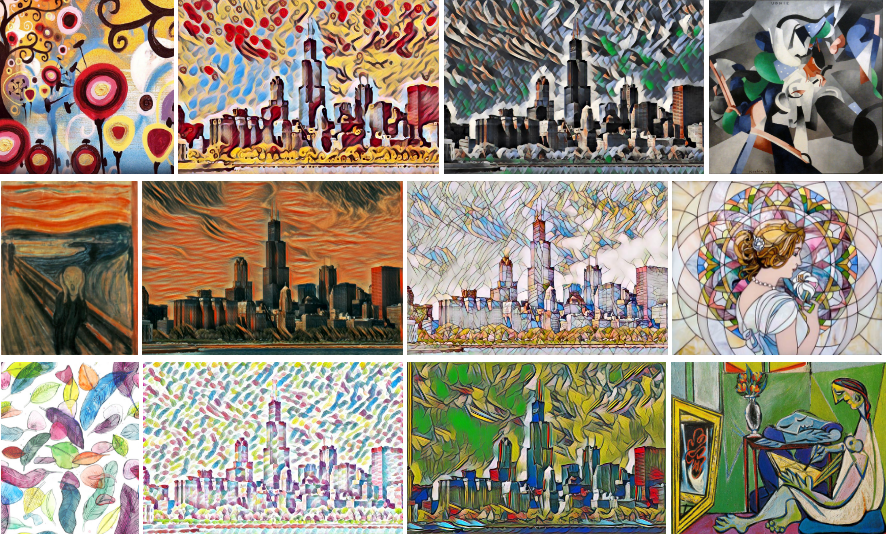

AI-generated art has improved dramatically over the past several years, and the results can be seen in competitions like RobotArt and NVIDIA's DeepArt:

But while these models are certainly an impressive technical accomplishment, a contentious point of discussion is whether AI and machine learning models are truly creative in the way humans are. Some have argued that it isn’t really creative to build mathematical models of pixels in an image or to identify sequential dependencies in the structure of songs. AI, they claim, lacks the human touch. But it’s also not clear that human brains are doing anything more impressive. How do we know that the artistic spark of a painter or musician isn’t actually a mathematical model, trained — like a neural network — through constant practice?

While the question of whether AI creativity is real creativity is unlikely to be resolved anytime soon, studying how these models work can shed some light on what this question entails. In this piece, we’ll dive into several state-of-the-art examples of machine-generated visual art and music. Specifically, we’ll discuss style transfer and music modeling, as well as where I think the field is heading.

Style Transfer

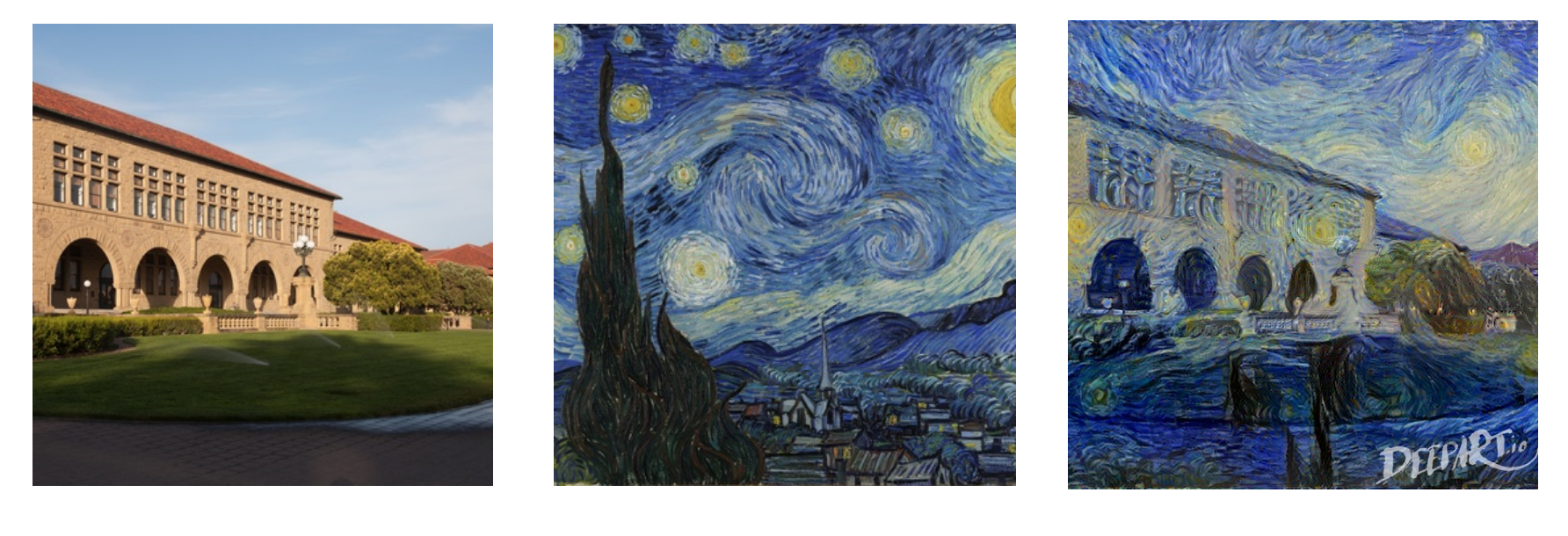

You might already be familiar with style transfer, arguably the most famous kind of AI-generated art. Here’s an example:

What’s going on here? We can think of an image as having two major components: content and style. The content is what is represented in the image (the Main Quad at Stanford University), and the style is the way the image is drawn (the swirling, colorful style of Van Gogh’s Starry Night). Style transfer is the task of re-creating one image in the style of another.

Suppose we have images $c$ and $s$, where $c$ represents the image we want to take the content from and $s$ represents the image we want to take the style from. Let $\hat{y}$ be the generated image. Intuitively, we want $\hat{y}$ to have the same content as $c$ and the same style as $s$. From a machine-learning perspective, we can formulate this as minimizing the content loss between $\hat{y}$ and $c$, as well as the style loss between $\hat{y}$ and $s$.

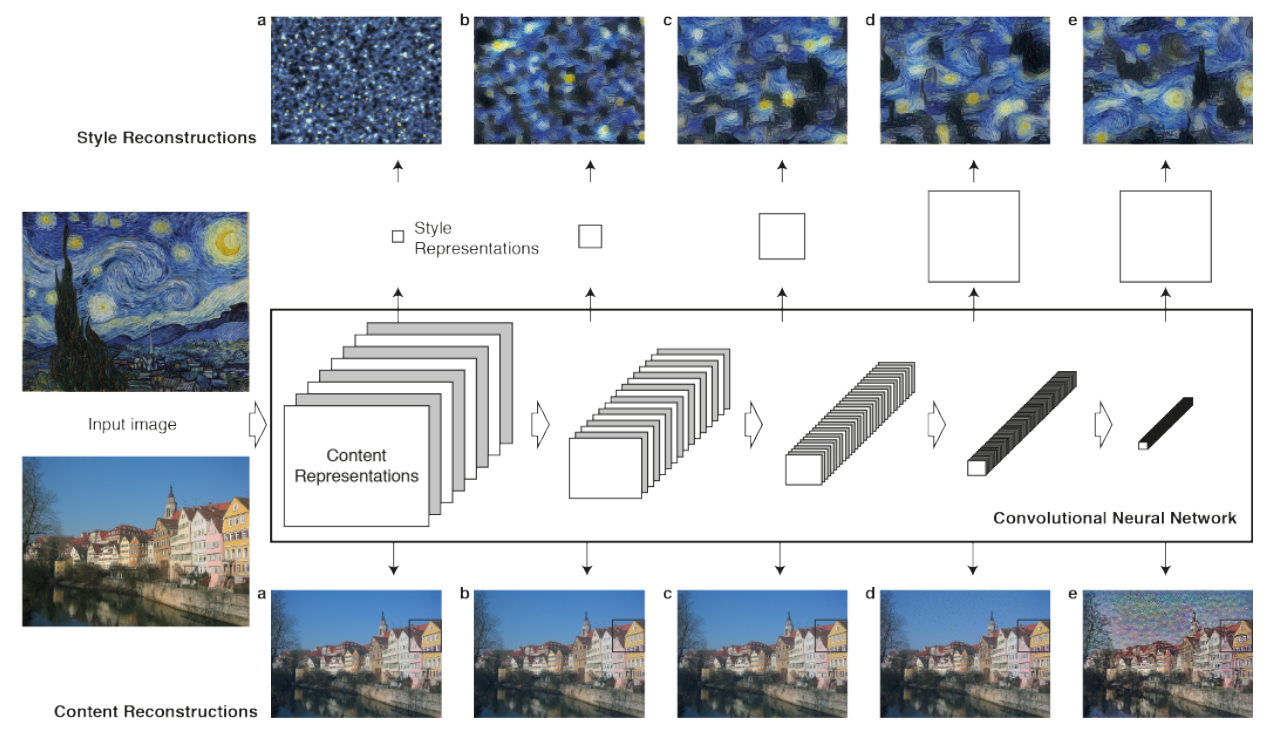

But how do we come up with these loss functions? That is, how do we approximate the concepts of content and style mathematically? Gatys, Ecker, and Bethge proposed in their landmark style transfer paper[1] that the answer lies in the structure of convolutional neural networks (CNNs).

Let's say you feed an image through a CNN that’s already been trained to classify images. [2] Due to this initial training, each successive layer of this network has been set up to extract more complex image features than the last. The authors find that the image’s content can be represented by the feature maps of a layer in the network. Then its style can be represented by the correlations between the feature map’s channels. These correlations are stored in a matrix called the Gram matrix.

Based on this representation, the authors sum the Euclidean distances between the feature maps of the generated image and the content image to construct the content loss. Next, they sum the Euclidean distances between the Gram matrices of each style layer’s feature maps to compute the style loss. Within both losses, the importance of each layer is weighted according to a set of parameters that can be tuned to achieve better-looking results.

Formally, let $\hat{y}$ be the generated image, and let $\phi_j(x)$ be the feature map of the $j$-th layer for input $x$. Then the content loss can be computed as:

$$ \ell_{\text{content}}^{j}(\hat{y}, c) = || \phi_j(\hat{y}) - \phi_j(c) ||_2^2$$

Let $G_j(x)$ be the Gram matrix of $\phi_j(x)$. Then the style loss can be computed as follows, where $F$ represents the Frobenius norm.

$$ \ell_{\text{style}}^{j} (\hat{y}, s) = || G_j (\hat{y}) - G_j(s) ||^2_F$$

Finally, we can sum over all $L$ layers with weights $\alpha_j, \beta_j$ to obtain the overall loss function:

\begin{equation}

\mathcal{L}_\mathrm{total}(\hat{y}, c, s) = \sum_{j=1}^{L} \left ( \alpha_j \ell_{\text{content}}^{j}(\hat{y},c) + \beta_j \ell_\mathrm{style}^{j}(\hat{y}, s) \right )

\label{loss}

\end{equation}

In words, this means that the loss function $\mathcal{L}_\mathrm{total}$ for the overall network is just a weighted combination of the content loss and style loss. Here, $\alpha_j$ and $\beta_j$ are hyperparameters chosen to weight each layer and control the trade-off between faithfully reconstructing the target content and reconstructing the target style. At each training step, we update the input’s pixels with respect to the loss function, and we perform this update repeatedly until the input converges to the styled image.

Feed-forward style transfer

Solving this optimization problem can take a while for each image we want to produce, since we have to go from random noise to perfectly styled content. In fact, the paper’s original algorithm took about two hours to produce a single image, motivating the need for a faster process. Fortunately, a follow-up paper was released on 2016 by Johnson, Alahi, and Li[3] describing a method for performing style transfer in real-time.

Instead of generating an image from scratch that minimizes the loss function, Johnson et al. take a feed-forward approach, training a neural network to directly apply a style to a certain image. Their model consists of two components — an image transformation network and a loss network. The image transformation network works takes a regular image and outputs the same image styled. However, this new model also uses a pre-trained loss network. The loss network measures the feature reconstruction loss, which is the difference between feature representations (for content), and a style reconstruction loss, which is the difference between styles of images (using the Gram matrix).

In training, Johnson et al. feed the image transformation network a bunch of random images from the Microsoft COCO dataset and produce these images with different styles (like Starry night). The network is trained to optimize the combination of loss functions that come from the loss network. The quality of images generated by this method is around the same as the original paper, and this method achieves an incredible 1060-fold speedup when generating 500 images of size 256x256. It took 50 milliseconds to style each of these images:

In the future, style transfer could be generalized to other mediums, such as music or poetry. For example, a musician could reimagine a pop song, such as "Shape of You" by Ed Sheeran, sounding like it came from jazz. Or one could transform modern slam poetry to a Shakespearean iambic pentameter style. Currently, we don’t have enough data in these fields to train good models, but it’s just a matter of time before we can.

Music Modeling

Generative music modeling is a hard problem, but we’ve come a long way.

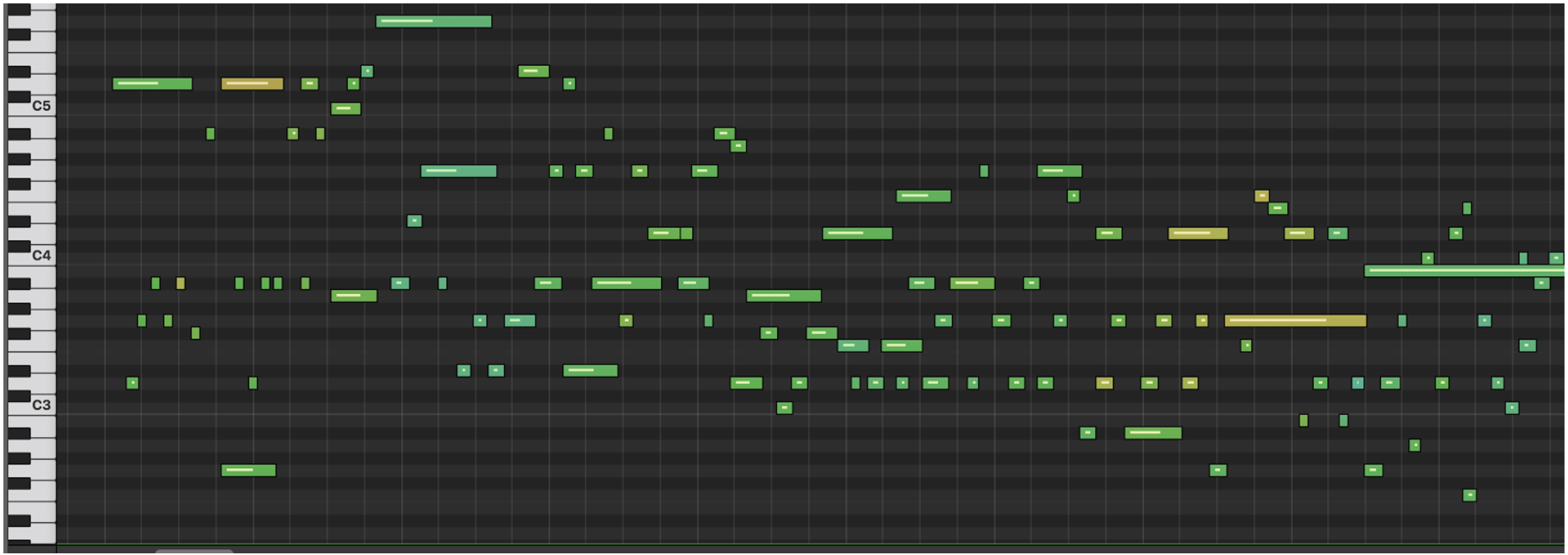

When Google’s open-source AI music project Magenta began, it could only generate simple melodies. By summer 2017, however, it had produced Performance RNN, an LSTM-based recurrent neural network (RNN) that models polyphonic music, complete with timing and dynamics.

Because a song can be viewed as a sequence of notes, music is an ideal use case for RNNs, which are designed to learn sequential patterns. We can train an RNN on a set of songs (that is, series of vectors representing notes), and then sample a melody from the trained RNN. You can check out some demos and pretrained models on the Magenta Github page.

Earlier work with music generation by Magenta and others could generate passable monophonic melodies, or sequences of time steps where at most one note could be "on" at each time step. These models were similar to the language models used to generate text: instead of one-hot vectors representing single words, the model outputs one-hot vectors representing musical notes.

Even one-hot vectors can imply a huge space of possible melodies. If we were to generate a series of $n$ notes — meaning we are generating a note at every time step for $n$ time steps — and we have $k$ possible notes to choose from at each time step, there are $k^n$ valid sequences of vectors.

This can be crazily large, and we’re still constrained to monophonic music, which plays only one note at a time step. Most music we listen to is polyphonic. Polyphonic music consists of multiple notes at the same time step. Imagine a chord, or even multiple instruments playing at once. Now, the number of valid sequences is huge — $2^{k^n}$. This meant that the Google researchers had to use a more sophisticated RNN than the ones commonly used to model text: unlike words, multiple notes can be "on" at each time step.

There’s another issue. If you have ever heard a computer play music — even human-composed music — it can sound robotic. When humans perform music, we vary the tempo (speed) or dynamics (volume), giving our performances emotional depth. To avoid this, researchers had to teach the model to slightly vary the tempo and dynamics. Performance RNN can generate human-sounding interpretations of the pieces it composes by varying their speed, stressing certain notes, and playing louder or softer.

How does one train a model to play music with emotion? There’s actually a dataset that’s perfect for this purpose. The Yamaha e-piano competition dataset includes MIDI data from live performances: each song is recorded as a series of notes, each containing information about velocity (how hard was the note played?) and the time it was played. Thus, in addition to learning which notes to play, Performance RNN uses information from human performances to learn how to play those notes. Samples can be found online here.

These recent developments are the difference between a six-year-old plinking away at a piano with one finger and a virtuoso pianist playing more complex pieces with emotion. Work remains to be done: some samples from Performance RNN still sound “AI-generated,” because they don’t have fixed keys or repeating themes or melodies like traditional songs do. Future research might explore what the model can do for drum samples or other instruments.

But as things stand, these models are developed enough to help people make their own music.

The future of AI-generated art

The intersection of machine learning and art has grown rapidly in the past few years. It’s even been the topic of a course at NYU. The rise of deep learning has had an enormous impact on the field, reviving the promise of representing and learning large forms of unstructured data such as images, music, and text.

We're just now scratching the surface of what's possible in machine-generated art. In the future, we'll likely see machine learning be used as a tool for artists, such as coloring in sketches, "autocompleting" images, generating outlines for poems or novels, and more.

With more computational power, we can train models that generalize over different media like audio, movies, or other complex forms. We’re already seeing models that can generate both audio and lip-sync videos from any new text. Mor et al.’s “musical translation network” can perform a kind of acoustic style transfer between musical instruments and genres (demo here)[4]. And Luan et al. demonstrated photorealistic style transfer[5] suitable for high-resolution photographs. The potential applications of this type of machine-generated media are enormous.

We can debate endlessly whether art generated through AI is truly creative. But maybe we should look at the issue from the other side. Through our attempts to mathematically model human creativity, we’ve begun to reach a deeper understanding of just what makes human art so impressive.

(Cover photo from Johnson and Li.)

Citation

For attribution in academic contexts or books, please cite this work as

Shreya Shankar, "How AI learned to be creative", The Gradient, 2018.

BibTeX citation:

@article{shankar2018creativeai,

author = {Shankar, Shreya}

title = {How AI learned to be creative},

journal = {The Gradient},

year = {2018},

howpublished = {\url{https://thegradient.pub/how-ai-learned-to-be-creative/ } },

}

If you enjoyed this piece and want to hear more, subscribe to the Gradient and follow us on Twitter.

Gatys, Leon A., Alexander S. Ecker, and Matthias Bethge. "A neural algorithm of artistic style." arXiv preprint arXiv:1508.06576 (2015). ↩︎

The authors use a pretrained VGG19 model, but any convolutional neural net should work. ↩︎

Johnson, Justin, Alexandre Alahi, and Li Fei-Fei. "Perceptual losses for real-time style transfer and super-resolution." European Conference on Computer Vision. Springer, Cham, 2016. ↩︎

Mor, Noam, et al. "A Universal Music Translation Network." arXiv preprint arXiv:1805.07848 (2018). ↩︎

Luan, Fujun, et al. "Deep photo style transfer." CoRR, abs/1703.07511 (2017). ↩︎