Zeros and ones. Bits and pieces. Positives and negatives. And above all, switches, some on and others off. We have all grown accustomed to seeing and using a contemporary computer. Each year, industry behemoths like Intel, AMD, ARM, and NVIDIA, release the next generation of their top-of-the-line silicon, locking horns, and pushing the envelope of the traditional computers that we know today.

If we critically evaluate these multitudes of new multi-core CPUs, GPUs, and mammoth compute clusters hosted on the cloud, we will soon realize that faster processors do not necessarily result in increased computational power. Granted, the speed of computation has increased exponentially in the past decades, so has the amount of data we can handle and process. We can store and analyze exabytes of data on the internet, train deep learning models like OpenAI’s GPT-3, and enable the computational intelligence needed to defeat champions and grandmasters at complex games like Go and Chess. But have all these technological advances expanded what we can fundamentally do with computers beyond where we started out with? Or simply put, have we changed our traditional model of computing?

Modern computers operate according to the principle of a von Neumann architecture (Ogban et.al, 2007). von Neumann architectures utilize inputs and outputs to a processing unit which performs logical functions on the inputs based on a set of instructions.

While von Neumann architectures are good for solving problems in a practical manner, they do not describe the process of computation itself. For this, we need a Turing machine (De Mol, 2018). A Turing machine provides an abstract model of today’s computers. Turing machines manipulate symbols on a tape according to a set of rules. The tape then advances or halts based on the current state of the machine. It is a well-known fact that all computations that a traditional computer can perform today can also be performed on a Turing machine. The astute reader will associate this result with the Church-Turing thesis, which states that “any real-world calculation can be done using λ-calculus, which is equivalent to using general recursive functions” (Rabin, 2012). In practice, however, the speed of an actual Turing machine would be too slow for any realistic, reasonably sized problem.

As proved by the Church-Turing thesis, the computability offered by a Turing machine is a glass ceiling that we have not broken through. As we shall discuss later on, although basing our computing devices on Turing machines unlocks computational possibilities, there are also several prohibitive drawbacks intrinsic to this model.

That is not to say, however, that all is lost. Martin Luther King Jr. once said, “We must accept finite disappointment, but never lose infinite hope." Shattering this glass ceiling requires more than just packing a legion of transistors onto computer chips. It requires a radical rethinking of computers from the ground-up; right from their most basic unit – a bit.

Enter the quantum realm

The last 120 years have yielded perhaps the greatest advancements in the history of physics. Einstein’s Special and General Theory of Relativity changed our perceptions of time, space, and gravity, while Dirac, Pauli, Feynman, Schrödinger, Heisenberg, and Planck’s formulation of quantum mechanics fundamentally revolutionized our understanding of the infinitesimal world of electrons, protons, and neutrons.

It is the last of these advances—quantum mechanics—that is most directly relevant to the quest for finding a powerful new model of computation. In the early 1980s, Richard Feynman envisaged that quantum computers could provide a way to solve problems that are exponentially hard for the contemporary (or classical) computer to solve (Feynman, 1986). This is possible as quantum computers require us to adopt a radically different concept of a bit. Before we can delve any deeper into this mode of computation, we must define what we mean by a quantum computer.

Unlike traditional computers, which have bits that can exist in either state 0 or 1 at any given moment, the quantum bit (or qubit for short) in quantum computers can exist in additional states. It can exist as a discrete state (0 or 1) or as a superposition of both states. This is an intrinsic property of the qubit, which assigns a probability distribution to its locality.

Our purpose here is not to provide an explanation of the quantum eccentricities that occur underneath the hood of a quantum computer. Nevertheless, it is worth reviewing two essential concepts of quantum physics: wave-particle duality and entanglement. As we shall see later, these are the foundational pillars of quantum computers.

A quantum mechanical interlude

The state of a qubit can be represented as a column vector. Distinct states are represented by distinct column vectors where each column vector is orthogonal to the rest. The state corresponding to a qubit is given by a linear combination of the possible states, which are weighted by complex numbers. This is the equivalent of saying that at any given moment, the qubit is in a superposition of these basis states, or in a probability wave. The act of measuring an exact position out of all these possible positions collapses this probability wave, or wavefunction, to reveal a single state. This is the crux of wave-particle duality: a particle exhibits both wave-like and particle-like behavior. Until we explicitly observe a particle, we can never say what state it is in; the Copenhagen Interpretation formally rendered an inquiry about a particle’s position before measurement moot (Faye, 2002).

The second important principle to understand is quantum entanglement. Consider a system of particles, with each particle having its own wavefunction. A system of multiple particles is defined as the tensor product of state spaces. This tensor product of k particles, with each particle being represented by an n-dimensional column vector is said to have nᵏ dimensions. This representation of state space is called the assembling of states.

Now, for illustrative purposes, let’s distill our initial system of k particles to just two particles, each in a superposition of two states, a and b (or circle or square for simplicity). We say that the two particles are independent if knowledge about the state of one particle does not reveal information about the state of the second particle.

However, we say that the two particles are entangled if knowing the state of one particle gives us immediate information about the state of the other. How quickly we obtain this information is one of the most puzzling experimentally proven facts: regardless of the distance between an entangled pair of particles, measuring the state of one particle reveals information about the other in an instant. That means if you produce two entangled particles, take them to opposing ends of the solar system, collapsing the wave function of one particle will immediately collapse the wave function of the other. This speed of communication between two particles happens at a speed faster than the speed of light itself, causing Einstein himself to label the peculiarity as “spooky action at a distance.”

Indulging in a deeper instruction of entanglement requires some rigorous mathematical formulation, so we shall refrain from that here. Additionally, despite it transmitting information at a rate faster than light, entanglement does not violate locality. But for further details on that, you may refer to this guide (Wilczek, 2016).

In practice, it is rare to encounter independent particles as interactions between systems lead to entanglement because of the underlying mathematics connecting wave functions to concrete probabilities, which introduces correlation between particles (Joos, 2009). With these concepts—wave-particle duality and quantum entanglement—fresh in our mind, let us see how quantum computers can cleverly exploit these phenomena for computation.

A radically different model of computation

Like a transistor represents 1-bit of information in classical computers, the nuclear spin of a semiconducting material like silicone or phosphorus represents a qubit of information. These semiconducting silicon or phosphorus atoms are manipulated and read using electrical and magnetic fields (Vogel, n.d.) (Physics World, 2019).

As stated before, qubits are the basic unit of a quantum computer. Since qubits can exist in more states than just the traditional 0 and 1 of a bit, we can encode more information using them. In practice, it is possible to encode a classical bit using a qubit, but the converse is not true. You cannot encode a qubit of information in a classical transistor. Using bits, an n-component system can be encoded using n transistors; only 8 stored bits are required to encapsulate an 8-bit classical system. If the n-component system were instead to be quantum, 2ⁿ complex numbers would be required to encode it (Kopczyk, 2018). By extension, encoding an 8-qubit quantum computer requires 256 complex numbers. And to simulate 64 qubits one will need 2⁶⁴=18, 446, 744, 073, 709, 551, 616 complex numbers on a classical machine. Therefore, quantum computation provides a vastly larger space of potential states when compared to a classical computer; while the qubit is a much larger computational object, a smaller number of qubits are needed to represent difficult computational problems. And as it is clear, such representations would be exceedingly hard for a classical computer to emulate.

Like classical gates (e.g. AND, OR, XOR) are the bread and butter of any operation on bits, quantum gates modify the state of a qubit as well with corresponding quantum gates. However, quantum computers have an array of special gates that are specific to qubit operations. Among these are the Hadamard and CNOT gates (Djordjevic, 2012). The Hadamard gate can be used to create superpositions of states (Qiskit/IBM, n.d.) whereas the CNOT gate can be used to entangle qubits (Qiskit/IBM, n.d.).

Through the use of quantum gates, a quantum computation will start at some initial state where it receives an input. From there, it transitions towards a final state, which can then be measured to retrieve specific information.

By cleverly employing the principles of superposition and entanglement, a quantum computer can simultaneously compute results from multiple qubits (Kopczyk, 2018). For example, say our quantum computation includes applying a transformation or function on a set of inputs; we can apply that function on multiple inputs and obtain their results in tandem. On the other hand, the same operation on a classical computer would need to be executed sequentially for each input or done in separate classical circuits. To illustrate, since classical bits aren’t entangled or in a superposition, individual measurements and calculations are needed to extract information from them. In the case of quantum computers, entanglement and superposition allow us to simultaneously calculate information about multiple qubits in a single operation. In essence, this model of computation allows us to explore different pathways and execute mathematical operations in tandem.

This is a key advantage offered by quantum computers. The inherent parallelism is quite potent and leads to what we call ‘exponential computational power’. To double this power, we only need to add one more qubit to the circuit. This discovery led to the development of a new category of algorithms, called quantum algorithms that exploit the parallelism offered by quantum computers to gain exponential speedups over the optimal classical solutions for certain types of problems.

The first tangible demonstration of a quantum computer outperforming a classical computer debuted in 2019. Researchers at Google used Sycamore, a 53-qubit quantum computer, to solve a problem in under 200 seconds. Conversely, this same problem would have taken an existing classical supercomputer approximately 10,000 years to solve. The Sycamore result was officially dubbed as a show of ‘quantum supremacy’, a clear example of the quantum computing paradigm revealing itself to be significantly more powerful than its classical counterpart (Arute & Arya, 2019, 505-510).

While IBM quickly challenged Google’s claims with a follow-up paper of its own (Pednault et al., 2019), Google’s finding (“Quantum supremacy using a programmable superconducting processor”) is generally considered as the breakthrough moment in the development of quantum computers.

The grass isn’t exactly green on this side, either

So far, we have only discussed the positive aspects of quantum computers. But all is not smooth sailing in terms of their implementation and development. As it turns out, suspending qubits in a state of superposition is incredibly hard. To achieve stability, quantum computers need to be kept in refrigerators that cool down the qubits to temperatures just shy of absolute zero (0 K). This means that quantum computers are restricted to niche research domains and expensive laboratories, at least for now.

Additionally, qubits are susceptible to noise (a phenomenon known as decoherence), which means that they lose their probabilistic quantum behavior—and with it their stored information—to an environment of interacting particles. This happens because on a quantum level, no observation or interaction is gentle enough to simultaneously extract information from a system but also preserve its original unperturbed state. Such interactions effectively localize quanta, causing the favorable superposition of states to disappear (Bacciagaluppi, 2020), and are another reason why we have not been able to fully realize the potential of quantum computers (Kopczyk, 2018).

Considering their limitations, we are in what researchers call the Noisy Intermediate-Scale Quantum (NISQ) era of quantum computers. The current generation of quantum computers are not powerful enough to produce fault-tolerant results. Decoherence also threatens the effectiveness of quantum algorithms by disrupting their speedup advantages. It is the reason Shor’s algorithm, which has the potential to destabilize our existing standards of encryption by enabling the prime factorization of massive numbers in polynomial time, remains a theoretical advance.

Most importantly, quantum computers are not the superior choice for every type of computation. They will not be faster at doing elementary operations on two numbers, they will not effortlessly train neural networks, and they will certainly not be running everyday programs faster. Firms like IBM go as far as to claim that quantum computers “will never reign 'supreme’ over classical computers, but will rather work in concert with them, since each have their unique strengths,” (Pednault & Gunnels, 2019).

However, there are certain problems at which quantum computers excel, and it's worth discussing them.

Quantum Machine Learning

Research in recent years has shown that the true potency of quantum computing lies in setting up a pipeline composed of both classical and quantum segments. Consider a scientific application we have to calculate the ground state of a particle. This problem is often important in studying chemical reactions and equilibria. The ground state would be defined as a state where the particle is at its lowest energy level, and therefore, at its most stable state. Traditionally, obtaining the ground state requires calculating the smallest eigenvalue from the eigenvectors of the states of a particle, which are represented by a matrix known as the Hamiltonian. For small systems, classical computers will not break a sweat during the solution, but this simple task grows exponentially for larger systems that have numerous particles and soon overwhelms available computational resources.

However, this increase in the search space becomes tractable if we use a hybrid, quantum machine learning algorithm. The Variational-Quantum-Eigensolver (VQE) uses both classical and quantum algorithms to estimate the lowest eigenvalue of a Hamiltonian. Simply put, its quantum part, known as the ansatz, intelligently searches the space of all possible states of a particle. The classical part tunes the ansatz’ parameters with gradient descent to help it approach the optimal answer. This combination has shown that the quantum computer can be especially helpful in particle simulation tasks of this kind.

Various other algorithms under the umbrella of quantum machine learning have been formulated in the past few years as well. The best-known quantum algorithm for the traditional k-means clustering optimizes the Lloyd classical distance calculation subroutine (Rebollo-Monedero & Girod, 2009) between the vectors to reduce the classical O(NkM) computational complexity exponentially down to O(Mklog(N)), where k is the number of clusters, M is the count of the training examples, and N is the feature count (Biamonte & Wittek, 2017, 195-202).

Researchers have also investigated the power of quantum computers in running neural networks. While a robust formulation of a neural network is still a fair way away in the quantum realm (Schuld & Sinayskiy, 2014), academics have produced varying methods to represent classical neural networks with quantum circuits. One example comes from researchers hailing from ETH Zurich and IBM Q, who compared the dimensionality, optimizability, and trainability of classical neural networks and quantum neural networks (Abbas et al., 2020).

Abbas et.al used the dimensionality of a model to compare the power of different neural networks. Their results showed that a quantum neural network combined with a ‘good’ feature map (to encode the data) had higher effective dimensionality than a classical neural network. Moreover, unlike classical neural networks, which sometimes suffer from slow trainability due to highly degenerate Fisher information matrices, the quantum neural network above offered a more descriptive Fisher information matrix with more uniform, non-zero eigenvalues. This quantum neural network was able to train and converge faster than a classical neural network on the Iris dataset on IBM's 27-qubit machine.

These results demonstrate that a robust quantum neural network with three segments (feature mapping, variational, and measurement) offers advantages like high capacity and fast trainability.

NP-Hard Problems, Searching, and Monte Carlo Simulations

Quantum computers also excel at optimization problems. Optimization problems utilize a particular solution heuristic to find the best-possible solution out of a cohort of valid solutions. To understand how optimization might operate in a quantum computing context, researchers have devised quantum algorithms for some NP-hard problems. One example of this is a quantum algorithm for the Traveling-Salesman-Problem (TSP), which provides a quadratic speedup over the classical brute force method for a large number of cities (Srinivasan et al., 2018).

Other algorithms that exploit a quantum computer’s parallelism have highlighted promising results too. Grover’s algorithm is currently the fastest quantum algorithm to search through an unsorted database with N entries. On a classical computer, this task would require time proportional to N, but the quantum counterpart demonstrates a square-root speedup and gets the job done in O(sqrt(N)) instead. Similarly, quantum computers can perform Fourier transforms over N data points, invert sparse N*N matrices, and find their eigenvalues and eigenvectors in time proportional to a polynomial in log (N). For these tasks, optimal classical algorithms known would take time proportional to N log(N), that is, the quantum computer exhibits an exponential speed up in such cases as well (Biamonte & Wittek, 2017, 195-202).

The finance industry is also priming itself for potential use cases of quantum computers. The task of analyzing stock markets and their associated metrics can be turned into an optimization problem. Considering this, the immediate practical usage of quantum computers could potentially take root in the finance domain. A study by a Spanish Bank, BBVA, which came out in July this year, found that quantum computers could boost credit-scoring, spot arbitrage opportunities, and accelerate Monte Carlo simulations (The Economist, 2020). Likewise, the head of a research unit at JPMorgan Chase & Co., Marco Pistoia, hopes that quantum computers could potentially boost profits by speeding up asset pricing, digging up better-performing portfolios, and improving existing ML algorithms. Even the head of quantum research at Goldman Sachs, William Zeng, made a bold claim that quantum computers could “revolutionize” the banking and finance industries (The Economist, 2020).

Entangled Future

Quantum computers reveal a promising novel approach to computing and problem-solving. Exponential speedups and polynomial-time solutions to intractable problems are natural consequences of the quantum mechanical properties of qubits. This results in a model of computation that is closer to the one abstractly modeled by a quantum Turing machine.

Shifting gears back to our original discussion of Turing machines, a quantum Turing machine is the generalization or quantization of the classical Turing machine, where the head and tape are superposed. Formally, the states of a machine are quantum states in Hilbert space. The tape of a quantum Turing machine is an infinite ‘unilateral tape’ which represents the superposed bits. In this context, a quantum computation is a unitary transformation whose result is determined by quantum measurement, which will reduce the ‘unilateral tape’ in coherent superposition to a classical bilateral tape with separable orthogonal eigenstates (Moschovakis, 2003).

Coupling this model of computation with enabling hardware, the demonstration of quantum supremacy by Google is what many people in the research community believe to be a violation of the extended Church-Turing thesis, which asserts that such a model of computation should be efficiently modeled by a traditional Turing machine. In fact, (Bernstein & Vazirani, 1993) showed that quantum Turing machines are inherently different from traditional Turing machines and can solve certain problems that would require superpolynomial time on their classical counterparts.

Tangible applications in chemistry, finance and optimization problems provide avenues for utilizing quantum computers in real-world settings too. Furthermore, the impressive trainability and dimensionality of quantum neural networks provide exciting new avenues for research in the use of quantum computers for machine learning and deep learning.

Aware of their potency, tech firms like IBM, Intel, Zapata, Amazon, and Honeywell are all investing heavily in developing commercial applications for quantum computers. High-level languages, frameworks, and libraries for programming on quantum computers like Q#, Qiskit, TensorFlow Quantum, and Cirq are steadily gaining traction too. These frameworks and their tutorials have eased the barrier to entry for quantum development and if the growing popularity is anything to go by, we can expect to see a bunch of exciting new developments in quantum computing in this decade.

Despite these developments, we need to critically contemplate the current state of quantum computers. The concerns posed by a qubit’s penchant for decoherence coupled with its exorbitant cryogenic requirements present significant limitations for our existing hardware. Thus, whether quantum computers can truly reign supreme for practical applications might not be the correct question to ask at this point in time. The more pressing inquiry is whether we can overcome the impracticalities of the NISQ era.

For now, this is a tale of David versus the Goliath, except it's too early to call the battle.

Author Bio

Ather is a final-year undergraduate student at FAST University, Lahore. He’s majoring in Computer Science but takes a keen interest in Physics and Mathematics as well. His senior-project pertains to high-fidelity speech synthesis using Generative Adversarial Networks. Ather regularly writes about the latest developments in quantum computing, artificial intelligence, and space travel at Neowin. Outside of work, you’ll find him engrossed in the world of Formula 1 racing and papercraft. You can find him @atherfawaz on Twitter.

Credits

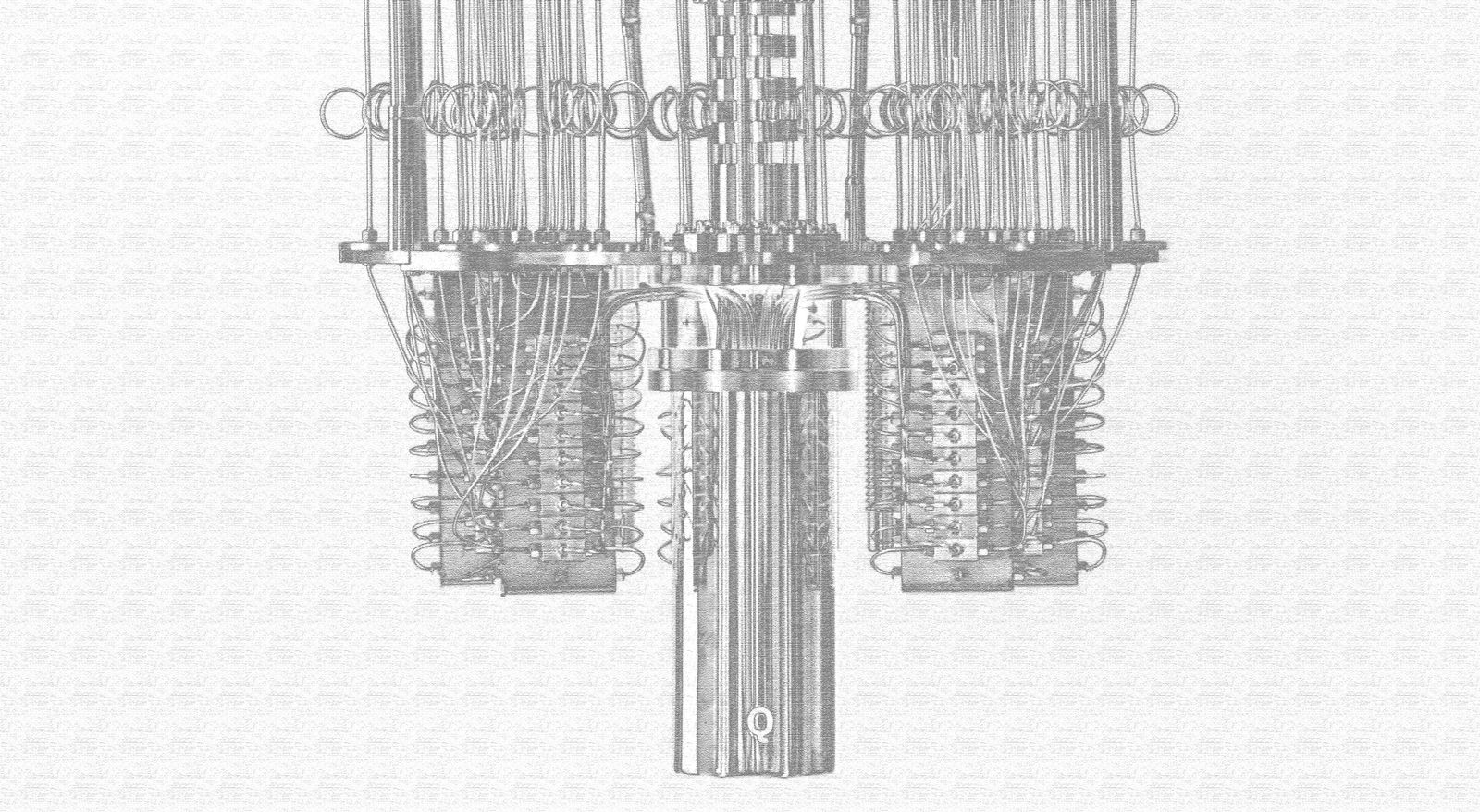

Source for feature image

Acknowledgements

Special thanks to Andrey Kurenkov, Malhar Patel, and Bradly Alicea for their vital insight, comments, and suggestions pre-publication.

Citation

For attribution in academic contexts or books, please cite this work as

Ather Fawaz, "Knocking on Turing’s door: Quantum Computing and Machine Learning", The Gradient, 2021.

BibTeX citation:

@article{fawaz2021quantumml,

author = {Fawaz, Ather},

title = {Knocking on Turing’s door: Quantum Computing and Machine Learning},

journal = {The Gradient},

year = {2021},

howpublished = {\url{https://thegradient.pub/knocking-on-turings-door-quantum-computing-and-machine-learning } },

}

If you enjoyed this piece and want to hear more, subscribe to the Gradient and follow us on Twitter.

Bibliography

Abbas, A., Sutter, D., Zoufal, C., & Lucchi, A. (2020, October 30). The power of quantum neural networks. arXiv. https://arxiv.org/abs/2011.00027

Arute, F., & Arya, K. (2019, October 23). Quantum supremacy using a programmable superconducting processor. Nature, 574, 505-510. https://www.nature.com/articles/s41586-019-1666-5

Bacciagaluppi, G. (2020). The Role of Decoherence in Quantum Mechanics. Stanford Encyclopedia of Philosophy. https://plato.stanford.edu/entries/qm-decoherence/

Bernstein, E., & Vazirani, U. (1993, June). Quantum complexity theory. ACM Digital Library. https://dl.acm.org/doi/10.1145/167088.167097

Biamonte, J., & Wittek, P. (2017, September 14). Quantum machine learning. Nature, 549(2017), 195–202. https://www.nature.com/articles/nature23474

Brandl, M.F. (2017). A Quantum von Neumann Architecture for Large-Scale Quantum Computing. arXiv, 1702.02583v3.

de Mol, L., Bullynck, M., & Daylight, E.G. (2018). Less is more in the Fifties. Encounters between Logical Minimalism and Computer Design during the 1950s. IEEE Annals of the History of Computing, 40(1).

Djordjevic, I. (2012). Quantum Gate. Science Direct. https://www.sciencedirect.com/topics/engineering/quantum-gate

Faye, J. (2002, May 3). Copenhagen Interpretation of Quantum Mechanics. Stanford Encyclopedia of Philosophy. https://plato.stanford.edu/entries/qm-copenhagen/

Feynman, R. P. (1986). Quantum mechanical computers. Foundations of physics, 16(6), 507-531.

Joos, E. (2009). Compendium of Quantum Physics. SpringerLink. https://link.springer.com/chapter/10.1007/978-3-540-70626-7_48

Kopczyk, D. (2018, April 25). Quantum machine learning for data scientists. https://arxiv.org/abs/1804.10068

Mol, L. D. (2018, September 24). Turing Machines. Stanford Encyclopedia of Philosophy. https://plato.stanford.edu/entries/turing-machine/

Moschovakis, Y. N. (2003). Turing Machines. Science Direct. https://www.sciencedirect.com/topics/physics-and-astronomy/turing-machines

Ogban, F.U., Arikpo, I.I., & Eteng, I.E. (2007). Von Neumann Architecture and Modern Computers. Global Journal of Mathematical Sciences, 6(2), 97-99. doi:10.4314/gjmas.v6i2.21415.

Pednault, E., & Gunnels, J. (2019, October 21). On “Quantum Supremacy”. IBM. https://www.ibm.com/blogs/research/2019/10/on-quantum-supremacy/

Pednault, E., Gunnels, J. A., & Nannicini, G. (2019, October 21). Leveraging Secondary Storage to Simulate Deep 54-qubit Sycamore Circuits. arXiv. https://arxiv.org/abs/1910.09534

Physics World. (2019, November 12). Manufacturing silicon qubits at scale. Physics World. https://physicsworld.com/a/manufacturing-silicon-qubits-at-scale/

Qiskit/IBM. (n.d.). Multiple Qubits and Entangled States. Qiskit Documentation. https://qiskit.org/textbook/ch-gates/multiple-qubits-entangled-states.html#cnot

Qiskit/IBM. (n.d.). Single Qubit Gates. Qiskit Documentation. https://qiskit.org/textbook/ch-states/single-qubit-gates.html#hgate

Rabin, M. O. (2012, July 10). Turing, Church, Gödel, Computability, Complexity and Randomization: A Personal View. Web Archive. https://web.archive.org/web/20190605192515/http:/videolectures.net/turing100_rabin_turing_church_goedel/

Rebollo-Monedero, D., & Girod, B. (2009). Lloyd Algorithm. Science Direct. https://www.sciencedirect.com/topics/computer-science/lloyd-algorithm

Schuld, M., & Sinayskiy, I. (2014, October 15). An introduction to quantum machine learning. Taylor Francis Online. https://www.tandfonline.com/doi/abs/10.1080/00107514.2014.964942

Soare, R. I. (2009, January 3). Turing Oracle Machines, Online Computing, and Three Displacements in Computability Theory. UChicago. http://www.people.cs.uchicago.edu/~soare/History/

Srinivasan, K., Satyajit, S., & Behera, B. K. (2018, May 28). Efficient quantum algorithm for solving travelling salesman problem: An IBM quantum experience. arXiv. https://arxiv.org/abs/1805.10928

The Economist. (2020, December 19). Wall Street’s latest shiny new thing: quantum computing. The Economist. https://www.economist.com/finance-and-economics/2020/12/19/wall-streets-latest-shiny-new-thing-quantum-computing

Vogel, B. (n.d.). Qubits – the building blocks of the quantum computer. University of Basel. https://www.unibas.ch/en/Research/Uni-Nova/Uni-Nova-130/Uni-Nova-130-Qubits-the-building-blocks-of-the-quantum-computer.html

Vos, J. (n.d.). All About Hadamard Gates. Manning. https://freecontent.manning.com/all-about-hadamard-gates/

Wilczek, F. (2016, January 5). Your Simple (Yes, Simple) Guide to Quantum Entanglement. Wired. https://www.wired.com/2016/05/simple-yes-simple-guide-quantum-entanglement/