How Stable Diffusion hides behind hype, non-profits, and creative accounting to not pay artists

AI art has got the internet buzzing. The ability to create breath taking works of art through a prompt is a great way for anyone to express their creativity. However, there is another side to the AI art process, one that is not talked about enough. The artists whose works enables these generators are not compensated for their work in any way. In this article, I will cover why this the case, the debate around artist compensation in AI art, and some possible solutions to the problem. Through this article, I hope to raise more awareness about this issue, so that you have a better understanding of it. Towards the end, I will leave you with a question- should visual artists be compensated more for their contributions to these diffusion-based models?

Want to learn more about this? Read on. Whether you’re an artist whose work might potentially fuel these works, a developer who might (often unknowingly) use people’s work without permission, or a business bro trying to understand this industry to build the next thing, this discussion is one that you should know about. Hopefully, this can generate or add to the discussion around this issue.

Commercializing non-commercial datasets

Before we broach the larger questions related to artists being compensated for AI art, let’s walk through an often ignored issue: the way companies such as Stability AI attain the data their products require may be seen as an instance of data laundering.

Data Laundering involves transforming stolen data so that it can be used for legitimate purposes. This can involve many steps and is going to become a bigger problem as the use of data in society increases.

As with other forms of data theft, data harvested from hacked databases is sold on darknet sites. However, instead of selling to identity thieves and fraudsters, data is sold into legitimate competitive intelligence and market research channels. -ZDnet, Cyber-criminals boost sales through ‘data laundering’

In the case of Stability AI and AI art, the process plays out like this:

- Create or fund a non-profit entity to create the datasets for you. The non-profit, research-oriented nature of these entities allows them to use copyrighted material more easily.

- Then use this dataset to create commercial products, without offering any compensation for the use of copyrighted material.

Think, I’m making things up? Think back to Stable Diffusion, Stability’s AI text-to-image generator. Who created it? Many people think it’s Stability AI. You’re wrong. It was created by the Ludwig Maximilian University of Munich, with a donation from Stability. Look at the Github of Stable Diffusion to see for yourself

Stable Diffusion is a latent text-to-image diffusion model. Thanks to a generous compute donation from Stability AI and support from LAION, we were able to train a Latent Diffusion Model on 512x512 images from a subset of the LAION-5B database.

So the non-profit created the dataset/model, and the company then worked to monetize it. As noted in AI Data Laundering: How Academic and Nonprofit Researchers Shield Tech Companies from Accountability:

“A federal court could find that the data collection and model training was infringing copyright, but because it was conducted by a university and a nonprofit, falls under fair use.

Meanwhile, a company like Stability AI would be free to commercialize that research in their own DreamStudio product, or however else they choose, taking credit for its success to raise a rumored $100M funding round at a valuation upwards of $1 billion, while shifting any questions around privacy or copyright onto the academic/nonprofit entities they funded.”

To their credit, Stability has started trying to use more licensed datasets since the release of Stable Diffusion v1. Still, this situation merits examination.

To a certain degree, this is normal. Lots of companies fund research in universities and then use those insights for better commercialization. The debate isn’t around what is going on. It’s about where you should draw the line. How far is too far? When should we step in and involve the interests of people who have contributed indirectly? It’s important to at least think about these issues. If you don’t engage in the conversation, someone else will decide the rules for you.

With that out of the way, let’s move on to the overarching debate about AI art and whether it copies artists. This is a sentiment that is thrown around a lot. Is this true, and what are the aspects that we should know about this? Is AI art stealing inspiration from artists?

So, if the datasets of copyrighted materials were instead collected by the company itself for the purpose of making a commercial product, would they then need to compensate the creators of those images or videos? IOn the one hand, the use of copyrighted materials was essential to building the product. On the other hand, these generative models don’t directly use or store any of those copyrighted materials after they are trained.

If I decided to create Goku-like character after looking at DBZ, do I owe money to Akira Toriyama? Do all the anime creators pay royalties to their inspirations? No, to both. Should this be any different for large models, which are essentially just sampling a data pool of inspirations to create their outputs?

This question becomes murkier given that art has historically rewarded people that ‘steal’ from others. To quote this BBC article on the subject,

Pablo Picasso (“good artists copy; great artists steal”) could never have painted his breakthrough works of the 1900s without recourse to African sculpture.

Based on how these models work, and the fact that the visual arts have always had a strong proclivity for borrowing inspiration from other works, it would be quite arbitrary to claim that the AI artist is stealing while human artists don’t.

That being said, this claim is not entirely false. While the art works generated themselves might be fine, the commercial use of datasets created for academic purposes is still at the very least questionable. Such laundering of these datasets allows companies such Stability to monetize their models, without the artists seeing any benefits for their contributions to the datasets these companies rely on to build their product. And monetary compensation aside, these artists are also not given something typically expected of human artists: attribution.

Why AI generated art is not like human art

Let’s go back to our DBZ example. Let’s say I started a business drawing people like DBZ characters. I become moderately successful. I can sustain a good standard of living based on my art. In this case, I’m not paying Akira Toryiama. He doesn’t gain any benefit from my business. So far so good. So why is it a problem if my human drawn art was replaced by an AI agent?

Simply put, when I use a tool like Stable Diffusion, the artists whose work inspired the output are never credited. This is fundamentally different to what happens with human created art. Strong inspirations are generally credited, and if not, they can be traced back easily. If you’re a manga reader like me, how many times have you found the work of another author because an author you read mentions/credits them? How many times do you discover a new comic because of a cover art, meme, or other transformations applied to the image? I’ve discovered some amazing series just because I saw some cool-looking cover art online, and decided to ask for the source.

Thus when human artists use an underlying piece as inspiration, the creator of the original piece generally benefits from increased exposure. This is a feature missing from Stable Diffusion and other AI generators. They are unable to credit the sources of inspiration that they use to create their amazing pieces. And this hurts the artists whose work allows for these generators in the first place.

This is a problem – what can we do about it?

Possible Solutions

So what can be done to make Stable Diffusion and AI generated art more ethical? We address the underlying issues. The problem is that artists don’t see any benefit from their work being used in these pieces. Here are two ways this can be rectified-

- Credit the artists: The text-image pairs used to train these models have a corresponding embedding generated by the model, and this is also true of new outputs the model is prompted to create after training. So, for every output generated, it is possible to find the inputs with the most similar embeddings, which can be credited ‘significant inspirations’. Credit these pieces in the output, so that people have the opportunity to look at them (and thus the original artists will see more exposure). Both Clip Front and StableAttribution implement a version of this idea, so this is already possible!

- Pay the artists: This is a much simpler solution – pay every artist whose work is used in the dataset. This way you can use their work without worrying. This would lead to the need for smaller, more curated datasets, which would open up the market for different AI art generators for different niches. But most importantly, this would ensure that artists are more directly compensated for their contributions to the generator. The downside of this solution would be that there would be higher upfront costs of developing these generators, but that is an acceptable tradeoff if the alternative is to not compensate artists in any way.

There will be a lot of trial and error needed to implement these solutions in an effective manner. However, that process needs to start somewhere. Because the status-quo harms the very people who make these solutions possible.

What the artists think

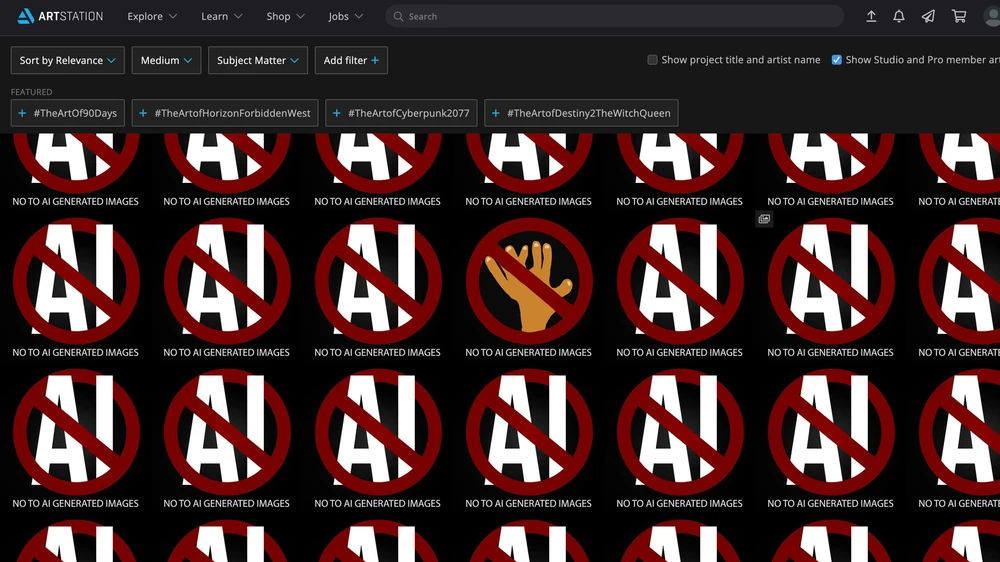

Any discussion around AI Art would be incomplete without mentioning the artist perspective.

Artists have had a mixed response to the AI art. There is some fear around AI replacing artists, which has been fed by a lot of the marketing around these tools.

Artist and designer Sebastian Errazuriz has been exploring the potentials of AI in the creative field for years. In a recent video on instagram, he gives us all his insights into which artists he predicts to be the first to be ‘replaced’ by artificial intelligence. Replying to comments, he writes: ‘When I see something clearly that seems could be a threat to many I feel compelled to sound alarms and help others who might not be aware.’ -illustrators are the first to go: sebastian errazuriz warns artificial intelligence to replace artists

There are several artists who have raised alarm bells against the practices that have led to the creation of these massive art projects (the ways we’ve discussed earlier). One of the most common sentiment is that of resentment, since many artists have pointed to the double standard in the way datasets were created between visual art and music. This video is one such example:

Finally, a lot of artists are confident that AI art will not fundamentally take away their work. They point to the importance of prompts in creating AI art images. The artists believe that the superior knowledge allows them to create prompts that are more detailed and ultimately create more high quality images. This video goes over this argument in more detail:

This variance in emotion makes sense. AI art is a new technology, and one that caught everyone by surprise. There are many factors to it, all of which complicate the issue. Only time will tell which of these fears was most valid, and which concerns everyone overlooked.

Hopefully, this will give you more perspective on this issue. Such conversations are important in helping us create more responsible solutions for the future. Having such conversations with a diverse crowd is key to ensuring that the solutions work to help all parties, instead of pulling one down to help another.

If you liked this write-up, you would like my daily email newsletter Technology Made Simple. It covers topics in Algorithm Design, Math, AI, Data Science, Recent Events in Tech, Software Engineering, and much more to make you a better developer. I am currently running a 20% discount for a WHOLE YEAR, so make sure to check it out. You can learn more about the newsletter here

Reach out to me

Use the links below to check out my other content, learn more about tutoring, or just to say hi. To help me understand you fill out this survey (anonymous)

- Check out my other articles on Medium. : https://rb.gy/zn1aiu

- My YouTube: https://rb.gy/88iwdd

- Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

- My Instagram: https://rb.gy/gmvuy9

- My Twitter: https://twitter.com/Machine0177681

Citation

For attribution of this in academic contexts or books, please cite this work as:

Devansh Lnu, "Artists enable AI art - shouldn't they be compensated?", The Gradient, 2023.

BibTeX citation:

@article{Lnu2023aiart,

author = {Lnu, Devansh},

title = {Artists enable AI art - shouldn't they be compensated?},

journal = {The Gradient},

year = {2023},

howpublished = {\url{https://thegradient.pub/should-stability-ai-pay-artists}},

}