We need strong artificial intelligence (AI) so it can help us understand the nature of the universe to satiate our curiosity, devise cures for diseases to ease our suffering, and expand to other star systems to ensure our survival. To do all this, AI must be able to learn representations of the environment in the form of models that enable it to recognize entities, infer missing information, and predict events. And because the universe is of almost infinite complexity, AI must be able to compose these models dynamically to generate combinatorial representations to match that complexity.

The models that AI builds must encode the fundamental patterns of experience and they must be rich and deep so that the AI can access and reason over the causes of their inputs. This means that AI needs to understand the world at the level of a human child before it can progress higher. To get this initial understanding, we need to train AI in simulated environments like our own, and the AI needs to be guided to pay attention to the useful aspects for learning. To measure learning progress, we need to access the internal processes of the AI models because there are lots of ways for AI to appear intelligent without being able to generalize. Once AI has this foundation of models and the ability to dynamically combine them to make more models, it can make the necessary inferences and forward projections to help humanity.

Why models are needed for strong AI

Models are needed for strong AI because they enable intelligent behavior in novel situations. There’s an old famous story about the Sphex wasp [1]. The wasp stuns crickets and puts them into its nest to feed its young. Before bringing the cricket into its nest, it first moves it to the edge, and then it goes into the nest to perform a check. Once the check is complete, it exits the nest and brings the cricket inside. But, if a meddling experimenter moves the cricket away from the edge while the wasp is inside checking the nest, the wasp will blindly repeat the loop by first bringing the cricket back to the edge and then going inside to check the nest. This loop has been observed to repeat up to 40 times. The wasp has a rote plan that appears intelligent but is surprisingly brittle. A model of the situation would look something like, “if nest is not blocked, wasp can be brought in,” and this model would allow it to infer that the nest doesn’t need to be checked again.

Models are more than recipes of what to do, they are encoded pieces of the world, such as “a roof blocks the path of rain to keep it dry underneath.” Models provide scaffolding over which to optimize behavior. Current AI is like the Sphex wasp—it lacks higher-level models and is therefore brittle and unable to adapt to novel situations [2]. We researchers have been building low-level models, like models that predict the next word in a news story given the previous words, but we need to focus on building higher-level models based on experience in the physical world.

In addition to being needed to act intelligently, higher-level models are required for language because efficient communication requires inferences. Inferences are necessary because talking is slow, and so for efficiency we only say the minimum needed to be understood. Each speaker has a model of the listener that includes models of the listener’s models of the speaker. We can then speak words that we estimate will trigger the correct inferences in the head of the listener [3]. Imagine you turn to someone and say, “That tree made it.” Without models to interpret context, your statement could mean anything, “Made what? A sandwich?” But with models with which to infer that the recent historically cold weather could have killed the tree, the listener can easily understand what you mean. Models provide the structure necessary to guess omitted information by providing a measure for how a set of facts fit together.

Models are also needed for efficient learning because they tell the learner what to focus on. The world is more complex than it seems, and our brain only feeds the conscious mind the parts of the world that it deems relevant for the task at hand. An AI can achieve this focus of attention with models. Each model can focus on a subset of the world, allowing the learner to ignore irrelevant variables. This focus makes the state space smaller, which improves learning [4]. Focusing only on the relevant variables reduces the amount of experience a learner needs because it can focus on causal explanations rather than statistical reasoning [5, 6].

Models allow us to learn from the distant past. To learn from the past, we have to store it and retrieve it at the right time, and models allow us to condense and tag experience. As humans, our episodic memories (the memories of the moments in our lives) only contain the important events. If we need other details not stored, our brains make up the details based on our internal models [7]. Of course, this makes us terrible eyewitnesses, but by storing only the information that can’t be regenerated with models, we can remember back over decades. In addition, by combining models with language, knowledge that one learner gains can be shared with many others across centuries. And if an external storage medium is used, knowledge can be shared between learners even when there is no chain of interpersonal connections.

Finally, models allow us to simulate forward. If we know that plants need water, and we know it hasn’t rained in a while, we can infer that if we bring water to the plant it will grow. Models can be wrong, one could believe that plants crave sports drinks, but models are what allows for imagination, and experimentation guided by imagination is what enables invention.

Why models must be learned and dynamically composed

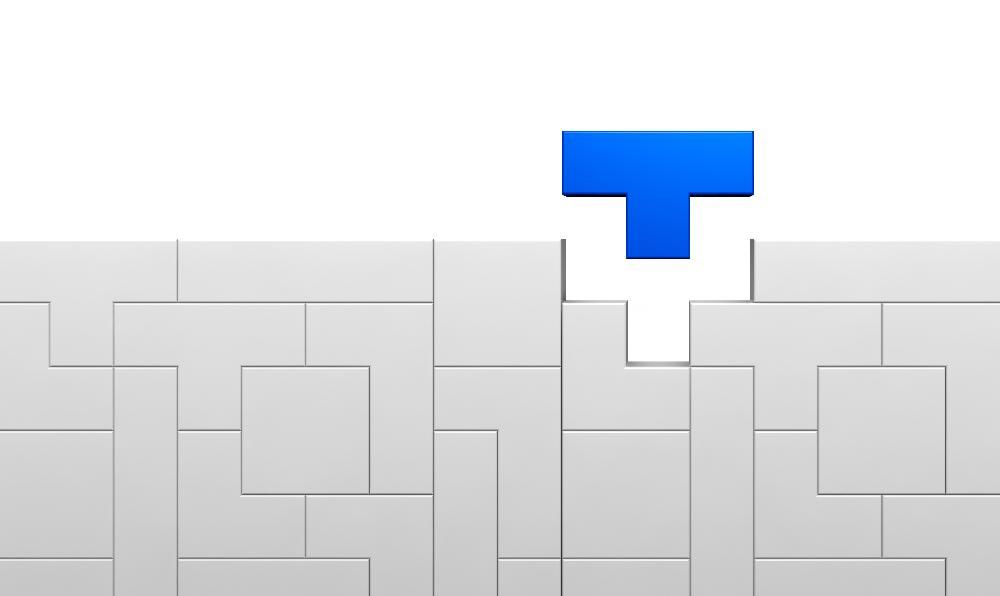

The models that AI will use to reason, act, and communicate must be learned. Decades of trying have demonstrated that intelligent behavior requires more models than we can program in. Even if we could program that many models, we wouldn’t know which ones to build because we can’t anticipate all of the situations an AI will need to address. The set of possible situations is effectively infinite because situations are composed of combinations of an almost infinite set of possible pieces. The only way to match that complexity is to be able to dynamically compose pieces to fit the situation—we need the ability to combinatorially choose model pieces to match the combinatorial complexity of the environment.

We humans use this combinatorial power to generate new ideas to solve problems. We blend existing models into new ones to dynamically understand situations and create new solutions. One can imagine our great^4000 grandmother thinking, “I need to hit that thing hard. What if I combined a rock with a stick by attaching the rock to the end of the stick?”

In addition to generating new ideas, we humans use combinations to learn from others. In school, teachers can’t put new ideas directly into kids’ heads. The best they can do is hint at them sufficiently so the students can construct the new ideas themselves [8]. The children must take the final step because it has to fit within their internal representation, making learning a process of generation rather than recognition. Likewise, an AI can see a combination of models and save that combination as a useful solution for a particular task, such as attaching a rock to the end of a stick. Without the ability to encode what it is seeing, inputs would just be noise. The attachment of the rock to the end of the stick is what is relevant, where the model of the stick and the model of the rock combine to create a new model. The color of the stick doesn’t matter, nor does the weather.

This need for dynamic blending is particularly salient in communication [9]. A football game announcer might say, “That running back is a truck.” The viewers then at some level put that truck into a simulated football game in which they are the defense and recognize that it would be hard to tackle [10]. This dynamic blending is also discussed as analogy and metaphor [11-13].

The current inability of AI to create dynamic models hampers communication between AI and humans because we can’t coordinate inner worlds and we can’t coordinate meaning in conversation [14]. Imagine you are trying to set up a serverless API using a cloud provider. The authors of the instructions don’t know what you know, so they have to guess what your level of expertise might be. The first instruction might be to ensure that the “chickenhominid” is properly configured. If you don’t know what a “chickenhominid” is, you are stuck and forced to hunt around on the web.

Coordination of inner worlds is required when two speakers have different words for concepts they both share. If the cloud instruction AI could coordinate inner worlds with you, you could both mutually discover that what it calls a “chickenhominid” you call a “turkeysapien.” Coordination of meaning is when one participant in a conversation is missing a concept needed for understanding. If you weren’t familiar with “chickenhominids” or “turkeysapiens,” the AI could first determine whether you were familiar with birds and humans, and if so it could explain what a “chickenhominid” is in those terms. Not only would this ability enable you to set up your API, it would be the ultimate tutor. One of the hardest parts of learning is finding a resource that begins with your level of knowledge so that it explains new concepts in terms of concepts you already know. And of course being able to transfer new knowledge to the AI with coordination between inner worlds and meaning would allow AI assistants to better customize to their users.

Related to the need for coordinating inner worlds and meaning, the combinatorial complexity of the environment means there can be no single ontology that we endow our AI with. An ontology is a catalog and organization of everything that we consider to exist in the world. For example, gasoline is a fuel, which is a substance, which is a self-connected object, which is an object, which is something physical, which is an entity [15]. But gasoline is also a resource, a cause of climate change, a taxable item, and on and on. Each purpose we have will require that fuel be looked at in a different way, and we can’t anticipate all of these needs ahead of time. An ontology is a useful way to organize knowledge, but our AIs will need to be able to create custom ontologies for each emerging need.

You probably recall Searle’s Chinese room [16]. To review, imagine you are in a room and you don’t speak Mandarin. You receive a piece of paper with Chinese characters written on it and you consult a big set of books to tell you what strokes to write in response to each stroke on the paper. You have no idea what you are doing, but when you pass the paper back with your strokes, it looks like a reasonable response to a Mandarin speaker. This is analogous to what computers and the Sphinx wasp do, and Searle argued that it means they understand nothing. Just like there can be no single ontology to match all of the possible combinations of things that may exist in every future situation, all of the possible things an AI must know and do can’t be written down in some large set of programs. AI needs to combine models to match the current situation—it must dynamically write the books in the Chinese room.

The current state of AI research on learning and composing models

Humans are hypothesized to use a language of thought (LOT) to compose concepts to understand the environment, creating complex thoughts by combining simpler concepts, eventually grounding out in primitives [17]. Analogously, strong AI requires a system that can autonomously build and compose models. Models do three things. First, models enable one to identify entities in the environment because they represent their nature. A model may represent the features of entities with probability distributions over feature values, such as apples usually being red or green. Another example is the distribution of items found in a kitchen. Second, models enable one to guess missing information because they provide a measure for how a set of facts fit together. A model tells you that if your friend says he saw a mouse under the refrigerator that the mouse was in the kitchen. And third, models enable one to imagine and predict the future because they encode forward transitions. For example, a model may specify the probability that a dog will chase a cat or that a tree will lose its leaves in the fall.

We can divide current models in AI into two classes: neural-network models and representation-based models. Researchers often divide models into subsymbolic and symbolic models, but this work focuses on model composability, so dividing models into neural-network models and representation-based models is helpful, as we will see.

Neural-network models are great because we can use the derivative of their error on training examples to directly improve the model. This is why we can make them so powerful, and it is also why neural network models can process the world at the lowest level, such as pixels from a camera. The problem is that we are currently asking neural networks to do too much, especially in the area of natural language processing. Words are not pixels, they are the minimal hints that a listener needs for understanding in a particular context. Pixels come from dumb sensors, but as we have seen, words come from people with rich models of both the world and likely listeners. If you ask where the tamales are, the answer “the fridge” is all that is given and is all you need, even though there are a lot of refrigerators that could conceivably contain them. Because they lack this context, giant neural networks processing large amounts of text can only get a flavor of what is said. Even when reading carefully written news articles that follow the generally established rules of language syntax, computers don’t have the context of lived lives and can therefore only partially understand, that’s one reason why the text responses of large neural networks are plausible-sounding but not always exactly correct.

To handle natural language, neural networks will need to process the pieces of what is being said and recognize what they point to. They will need to make a sequence of semantic decisions before deciding how to respond to queries. Instead of running a chain of computations to produce each word in sequence, they will first need to decide what to say, and then decide how to say it, and then they can produce the words of the response. Such an approach would allow neural networks to be more exact because they would be making more modular decisions.

We are also asking neural networks to do too much when we train robots and agents with deep reinforcement learning. At its simplest, reinforcement learning is built on the idea of random exploration all the while hoping that something good happens. Modern deep reinforcement learning uses neural networks to process sensory input to decide how the learner should behave. Even in this case, the agent must still string together long sequences of low-level actions to accomplish anything useful. In general, reinforcement learning often requires a huge amount of experience and computation to train. Even then, the trained agent only knows how to do particular tasks under specific conditions [18]. To learn effectively, an AI agent needs to think like a scientist or a child [19]. That is, the AI agent needs to compose higher-level models to enable it to plan and execute experiments.

In contrast to neural networks, which can operate on low-level inputs, representation-based models require higher-level inputs that already have some meaning. The simplest representation-based model is a linear equation, such as 3x + 4 = y. Linear equations are powerful and simple. If you know the value of x, you can infer the value of y. But where do x and y come from? That’s the representation. They stand for things, and a lot of cognitive work, usually by a human, has already been done to identify and distill them down into the variables x and y.

The field of logic extends linear models from numerical values to the domain of true and false. You can infer propositions given other statements, such as inferring that leaves will fall from a tree if it is autumn or that the oven is hot given that you turned the knob. Graphical models, such as Bayesian networks, add probabilities to these statements, which allow you to model unknown causes that might keep leaves from falling or the oven from getting hot using probability distributions. Probabilistic programming expands on graphical models by not requiring a fixed set of variables [20]. Probabilistic programming also encompasses linear models by computing probability distributions over numerical values.

Representation-based models naturally compose if the representations align. So if you have a model for x = 15 + z, it is easy to compose that model with 3x + 4 = y. Our representation-based models are becoming more flexible with probabilistic programming as an example, but we need to make our models richer and deeper. Richer models scale out horizontally to include more context. When the leaves fall, what happens to them? Do they accrue on the ground? Do they stay there forever? Why would a human rake them off the grass? By contrast, deeper models contain long chains of causal structure. Why do leaves fall in autumn? Why does it get cold? If it doesn’t get cold one year, what will the leaves do? Why is cold related to leaves? Like a child, Why? Why? Why?

To build these representation-based models, we could directly try to encode patterns of experience, sometimes called schemas [21], scripts [22], or frames [23]. For example, children’s birthday parties follow a pattern where there are presents, entertainment, and a source of sugar to serve as fuel. The difficulty is ensuring that these patterns are sufficiently fundamental and composable. The piñata may fall to the ground, but lots of things fall. Falling means they keep going until they hit something. If they stay on that something, we say the thing supports the once-falling thing. So it keeps it in place and blocks it from going further. So a roof blocks rain, which is why it doesn’t rain inside, but does the roof support rain? Not really, it slides off the side. Or consider when one object contains another. If you move the piñata with candy in it, the candy also moves. You move the piñata by applying force to it, and of course it is the force of gravity that causes the piñata to fall when its attachment to the rope breaks.

These more fundamental patterns are sometimes called image schemas [22]. The developmental psychologist Jean Mandlar says that image schemas make up the foundation of human thought [25]. She also claims that they are not symbolic, which is maybe why AI researchers have had such a hard time coding them in. And these image schemas aren’t just for concrete things. We understand abstract things by using metaphors to the concrete [26,27]. Your favorite sports team can fall in the rankings after a loss, or a broken air conditioner can force you to move your meeting.

Fundamental understandings begin with a learner acting in the world. You can understand the concept of block when you yourself can’t get inside your cave because of fallen rocks. Acting in the world is how image schemas derive meaning. The theory of embodiment describes meaning as coming from the linkage of the internal state of the organism to outside events that relate to its survival [28]. For simple organisms these internal states and linkages can be relatively straightforward, such as a frog reacting to small dark spots indicating possible flies [29]. The meaning of the dark spots is grounded in the sensory apparatus of the frog and derived from those spots leading to the frog’s survival when it flings its tongue in their direction. This kind of grounding is necessary but not sufficient for strong AI. Grounding is necessary because a sophisticated learner needs to verify its models and refine incorrect ones [28]. If a model is wrong, the agent needs to be able to trace it back. Grounding is not sufficient however because the learner must also be able to construct models on top of those grounded sensory states for effective action and communication, as we saw previously.

The analog of image schemas learned by strong AI must be grounded by linking to meaningful sensory states, however it is currently unclear if these image schemas will come from neural networks or if we researchers will figure out how to learn and compose them in something like probabilistic programming. There has been well-publicised progress [31] with neural networks, and some great work [32,33] on learning models, but there still currently is a gap, with neural networks able to process perceptual input and representation-based models able to process meaningful input. This gap largely corresponds to the one pointed out by Hanard, referred to as the symbol grounding problem, where he argues that we must tie pixel inputs to symbol outputs for a machine to have meaning [34]. We saw previously that the theory of embodiment describes meaning as coming from the correlation of internal states to external stimuli, so meaning in itself doesn’t seem to be a difficult problem. The difficult problem is acquiring useful meaning in the form of many [35] deep and rich composable models, and image schemas seem to be at the core of this gap.

How to move AI research forward to better learn and compose models

We need an AI that can autonomously learn rich and deep models and can compose them to represent any given situation. The solution will almost certainly involve a form of neural networks because AI needs the ability to process the world at the “pixel level” [36] so its models can trace the representation down to the sensor input. To bring them closer to representational models so neural networks can be just as flexible and better interface with them, we will need to make neural networks more modular with reusable representations.

To make neural networks more flexible, there is work on how they can learn to pay attention to relevant parts of the input space [37], and there has been work on learning reusable discrete representations of experience and on being able to encode that experience as combinations of those representations [38]. And there has also been work on using explicit memory in neural networks and accessing the relevant parts of the memory when needed [39]. This is in the right direction toward systems that can learn and manipulate a version of image schemas with neural networks. Image schemas presumably evolved as patterns of computation that were first helpful for one kind of problem and then could be reused by nature to solve other problems, building up in this way until they formed the foundation of our current complex and abstract thought. We can strive to learn composable neural networks with these same properties, with some parts of the networks specializing in different fundamental representations that are reused and combined for many situations. Neural networks process “pixels,” but we haven’t figured out how to make them sufficiently composable and exact, so we should expand representation-based models in parallel. Probabilistic programs are arguably our most flexible current modeling method, and we need to focus on learning [40] rich and deep models, hopefully back to image schemas. This learning will require algorithms that efficiently search through the space of possible models to find those that most concisely [41] explain the AI’s experience.

Making progress on neural-network models will require that researchers perform a search over architectures, and making progress with representation-based models will require a search over learning methods. For both kinds of search, we will need effective training environments and evaluations. In recent years, researchers have focused on training large neural-network models on images or news articles or wikipedia [42]. These models are useful, but they are ultimately limited because the data doesn’t have enough context to enable the model to learn what these pixels or words mean [43]. It would be better to use children's stories and TV shows as training, but even content created for children lacks sufficient context to teach an AI on its own. They assume their viewers have had a few years of experience in the world, and this experience in the world is where training needs to start to enable an AI to learn deep, rich, and composable models.

We have to catch up with millions of years of evolution, so we need to work in simulation because physical robots are expensive and slow to train. We could begin with a simulated E. coli whose only actions are to turn and move forward to increase the food gradient [44]. We could then increase the complexity of the body so the intelligence of the learner could adapt and grow, eventually giving the AI the ability to walk on land, and finally enabling it to stand upright so it could manipulate objects with its hands.

Rewalking the path of evolution might be necessary, but hopefully not. To save on expenses, we can start with a simulation of a typical home and simulate a typical neighborhood. There are some great beginnings [45] in that direction with AI2-Thor and ThreeDWorld. But we can’t just put a simulated kid in a simulated house and expect it to learn; we need to help it focus attention. If we did rewalk the path of evolution, we could partially rely on an evolved internal reward function to focus attention, but starting that far back gives us less control and understanding of the internal reward function and the associated attention [46] mechanism. Regardless of how far back we start, to teach it about the modern world, we will need to guide its attention and scaffold its learning in its zone of proximal development so that what the learner is experiencing is at the edge of its knowledge but not too far out. Parents guide their children this way through shared attention. The caregiver points at a cup and says “cup,” and the learner sees a cup, and the learner grabs the cup and gets a liquid reward.

Parents are expensive, so we will need automated mechanisms to guide the simulated AIs in their simulated living rooms. We can’t just have the AI watch TV, but we could use existing TV shows to guide the training in simulation. The camera provides a weak signal for attention, so we could simultaneously have the simulation focus on the part of the environment the TV show is focusing on. The TV show could guide the “script” of the simulation. You could imagine a cooking show where the character picks up a pot, and this event guides the simulated parent to show a simulated pot to the AI in the simulation. We could use a curriculum where we first focus on TV show scenes that discuss only objects in the room and then progress to scenes that discuss objects outside of the room and then on to increasingly complex and abstract situations.

To guide our search for AI learning algorithms, we need effective measures of AI capabilities. Multiple-choice tests are not a good measure of AI progress. When we humans take those tests, like the SAT, we can make the results less valid by studying just to the test by learning surface-level patterns that don’t reflect the deep reality of the subject domain. With an AI, this problem is far worse because computers have infinite patience and algorithms well-suited to learning these patterns. Also, while the multiple-choice format is unnatural for humans because life rarely enumerates choices, it closely aligns with how computers are trained and make decisions (e.g., the softmax operator). This alignment makes it even easier for AI to study to the test.

If we can’t use multiple-choice tests to evaluate AI like we do for humans, what can we do? Fortunately, unlike with live human brains, we have access to the internal workings and structure of AI systems. We can measure how many models an AI has, and for each model we can measure how fundamental it is by noting how often it is used and the diversity of the situations to which it is applied. We can also measure how well the models are organized [47] to take advantage of composition, for example whether models that frequently go together have a structure in place (such as a prior probability) to reflect that coupling. At a gross level, we can measure how modular neural networks are. We can measure how much they look like a system of neural networks instead of a single, large neural network, and we can also measure how many kinds of data they consider. And for our representational models, we can directly measure how rich and deep they are. We can also measure whether their inferences match those of children [48]. A lot of research in developmental psychology is based on the fact that babies look longer at unexpected events [49], and we can similarly test whether our AI agents focus attention on the right things, such as events that should be surprising because they break the laws of physics.

We can also measure understanding by having the AI show us directly how it represents what we have told it. This means that in addition to creating simulations to train AI, we can have the AI create simulations to show us how well it understands. We could have the AI read children’s stories and depict what it sees by creating a simulation. If a story says, “The cat went out the open door into a sunny afternoon” the AI can generate that scene using a video game engine like Unity. We can then judge how well that simulation matches what the story was. Was there a sun in the sky? What was on the ground outside the door? How big was the cat relative to the door?

We have much of the infrastructure for this already. Pixar has open-sourced a language for describing computer-animated scenes and NVIDIA has built mature tooling around it, and we can vary the difficulty depending on the kinds of assets (primitives) we give it. Does it have to build a cat from scratch, or can it just grab an asset called “cat”? Once AI shows us that it can understand simple things in the physical world, then we can evaluate whether it understands more complicated things using more fundamental concepts. For example, for the human body, the AI could understand cells as containers in the shape of spheres and veins as rivers of these flowing spheres.

We can also measure how often composable models add value in conversation. With a personal assistant like Siri or Alexa, responses may come from rote patterns or other methods, and when these methods aren’t able to answer a query, they sometimes resort to a web search. We could measure how often models can be used instead of a web search to successfully answer a query or perform the right observation.

Once the AI has learned rich and deep models down to image schemas and is able to compose them to understand the world as well as a child, it can start reading textbooks and watching videos to learn more. But the AI will still need to learn some things by doing, and we will need to build simulations for high school and college science labs. With this ability to gain experience and represent it with deep and rich models, the AI can begin to read scientific papers, and it can begin running simulated experiments as well as consulting open-source scientific datasets to test hypotheses. Ultimately, it can suggest experiments to humans or run them in the physical world with a robotic laboratory. With this ability to gain knowledge, AI can help push humanity forward.

References

[1] Hofstadter, D. R. (1985). Metamagical themas: Questing for the essence of mind and pattern. Basic books.

[2] Marcus, G. (2020). The next decade in AI: Four steps towards robust artificial intelligence. ArXiv Preprint ArXiv:2002.06177.

[3] Goodman, N. D., & Frank, M. C. (2016). Pragmatic language interpretation as probabilistic inference. Trends in Cognitive Sciences, 20(11), 818–829.

[4] Mugan, J., & Kuipers, B. (2012). Autonomous learning of high-level states and actions in continuous environments. IEEE Trans. Autonomous Mental Development, 4(1), 70–86.

[5] Pearl, J. (2000). Causality: Models, reasoning, and inference. Cambridge University Press.

[6] Mitchell, T. (1997). Machine Learning. McGraw-Hill Education.

[7] Gilbert, D. (2006). Stumbling on happiness. Knopf.

[8] Piaget, J. (1952). The Origins of Intelligence in Children. Norton.

[9] Fauconnier, G., & Turner, M. (2008). The way we think: Conceptual blending and the mind’s hidden complexities. Basic Books.

[10] Bergen, B. K. (2012). Louder than words: The new science of how the mind makes meaning. Basic Books.

[11] Mitchell, M. (2021). Abstraction and analogy-making in artificial intelligence. ArXiv Preprint ArXiv:2102.10717.

[12] Hofstadter, D. R., & Sander, E. (2013). Surfaces and essences: Analogy as the fuel and fire of thinking. Basic Books.

[13] Lakoff, G., & Johnson, M. (1980). Metaphors We Live By. University of Chicago Press.

[14] Gardenfors, P. (2014). The geometry of meaning: Semantics based on conceptual spaces. MIT press.

[15] Pease, A. (2011). Ontology: A practical guide. Articulate Software Press.

[16] Searle, J. R. (1980). Minds, brains, and programs. Behavioral and Brain Sciences, 3(3), 417–424.

[17] Piantadosi, S., & Aslin, R. (2016). Compositional reasoning in early childhood. PloS One, 11(9), e0147734.

[18] Irpan, A. (2018). Deep Reinforcement Learning Doesn’t Work Yet. https://www.alexirpan.com/2018/02/14/rl-hard.html

[19] Gopnik, A. (2009). The Philosophical Baby: What Children’s Minds Tell Us About Truth, love, and the meaning of life. Farrar Straus & Giroux.

[20] Goodman, N. D., Tenenbaum, J. B., & Contributors, T. P. (2016). Probabilistic Models of Cognition (Second). http://probmods.org/v2

[21] Bartlett, F.C. (1932). Remembering: A Study in Experimental and Social Psychology. Cambridge University Press.

[22] Schank, R. C., & Abelson, R. P. (1977). Scripts, plans, goals and understanding: An inquiry into human knowledge structures (Vol. 2). Lawrence Erlbaum Associates Hillsdale, NJ.

[23] Haugeland, J. (1997). Mind design II: philosophy, psychology, artificial intelligence. MIT press.

[24] Johnson, M. (1987). The Body in the Mind: The Bodily Basis of Meaning, Imagination, and Reason. University of Chicago Press.

[25] Mandler, J. (2004). The Foundations of Mind, Origins of Conceptual Thought. Oxford University Press.

[26] Feldman, J. (2006). From molecule to metaphor: A neural theory of language. MIT press.

[27] Lakoff, G., & Johnson, M. (1980). Metaphors We Live By. University of Chicago Press.

[28] Thompson, E. (2010). Mind in life. Harvard University Press.

[29] Winograd, T., Flores, F., & Flores, F. F. (1986). Understanding computers and cognition: A new foundation for design. Intellect Books.

[30] Stoytchev, A. (2009). Some basic principles of developmental robotics. IEEE Transactions On Autonomous Mental Development, 1(2), 122–130.

[31] Silver, D., Schrittwieser, J., Simonyan, K., Antonoglou, I., Huang, A., Guez, A., Hubert, T., Baker, L., Lai, M., Bolton, A., & others. (2017). Mastering the game of go without human knowledge. Nature, 550(7676), 354.

[32] Ellis, K., Wong, C., Nye, M., Sable-Meyer, M., Cary, L., Morales, L., Hewitt, L., Solar-Lezama, A., & Tenenbaum, J. B. (2020). DreamCoder: Growing generalizable, interpretable knowledge with wake-sleep Bayesian program learning. ArXiv Preprint ArXiv:2006.08381.

[33] Evans, R., Bošnjak, M., Buesing, L., Ellis, K., Pfau, D., Kohli, P., & Sergot, M. (2021). Making sense of raw input. Artificial Intelligence, 299, 103521.

[34] Harnad, S. (1990). The Symbol Grounding Problem. Physica D: Nonlinear Phenomena, 42, 335–346.

[35] Hawkins, J. (2021). A thousand brains: A new theory of intelligence. Basic Books New York.

[36] Kuipers, B. (2005). Consciousness: Drinking from the Firehose of Experience. AAAI.

[37] Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, Lukasz, & Polosukhin, I. (2017). Attention is all you need. Advances in Neural Information Processing Systems, 5998–6008.

[38] van den Oord, A., Vinyals, O., & others. (2017). Neural discrete representation learning. Advances in Neural Information Processing Systems, 6306–6315.

[39] Karunaratne, G., Schmuck, M., Le Gallo, M., Cherubini, G., Benini, L., Sebastian, A., & Rahimi, A. (2021). Robust high-dimensional memory-augmented neural networks. Nature Communications, 12(1), 1–12.

[40] Lake, B. M., Salakhutdinov, R., & Tenenbaum, J. B. (2015). Human-level concept learning through probabilistic program induction. Science, 350(6266), 1332–1338.

[41] Baum, E. B. (2004). What is thought? MIT press.

[42] Bommasani, R., Hudson, D. A., Adeli, E., Altman, R., Arora, S., von Arx, S., Bernstein, M. S., Bohg, J., Bosselut, A., Brunskill, E., & others. (2021). On the opportunities and risks of foundation models. ArXiv Preprint ArXiv:2108.07258.

[43] Jackendoff, R., & Jackendoff, R. S. (2012). A user’s guide to thought and meaning. Oxford University Press.

[44] Bray, D. (2009). Wetware: A computer in every living cell. Yale University Press.

[45] Zellers, R., Holtzman, A., Peters, M., Mottaghi, R., Kembhavi, A., Farhadi, A., & Choi, Y. (2021). PIGLeT: Language Grounding Through Neuro-Symbolic Interaction in a 3D World. ArXiv Preprint ArXiv:2106.00188.

[46] Graziano, M. S. (2019). Rethinking consciousness: A scientific theory of subjective experience. WW Norton & Company.

[47] Alicea, B., & Parent, J. (2021). Meta-brain Models: Biologically-inspired cognitive agents. ArXiv Preprint ArXiv:2109.11938.

[48] Griffiths, T. L., Sobel, D. M., Tenenbaum, J. B., & Gopnik, A. (2011). Bayes and blickets: Effects of knowledge on causal induction in children and adults. Cognitive Science, 35(8), 1407–1455.

[49] Spelke, E. S., Breinlinger, K., Macomber, J., & Jacobson, K. (1992). Origins of knowledge. Psychological Review, 99(4), 605.

Citation

For attribution in academic contexts or books, please cite this work as

Jonathan Mugan, "Strong AI Requires Autonomous Building of Composable Models", The Gradient, 2021.

BibTeX citation:

@article{mugan2021strong,

author = {Mugan, Jonathan},

title = {Strong AI Requires Autonomous Building of Composable Models},

journal = {The Gradient},

year = {2021},

howpublished = {\url{https://thegradient.pub/strong-ai-requires-autonomous-building-of-composable-models} },

}